#FactCheck

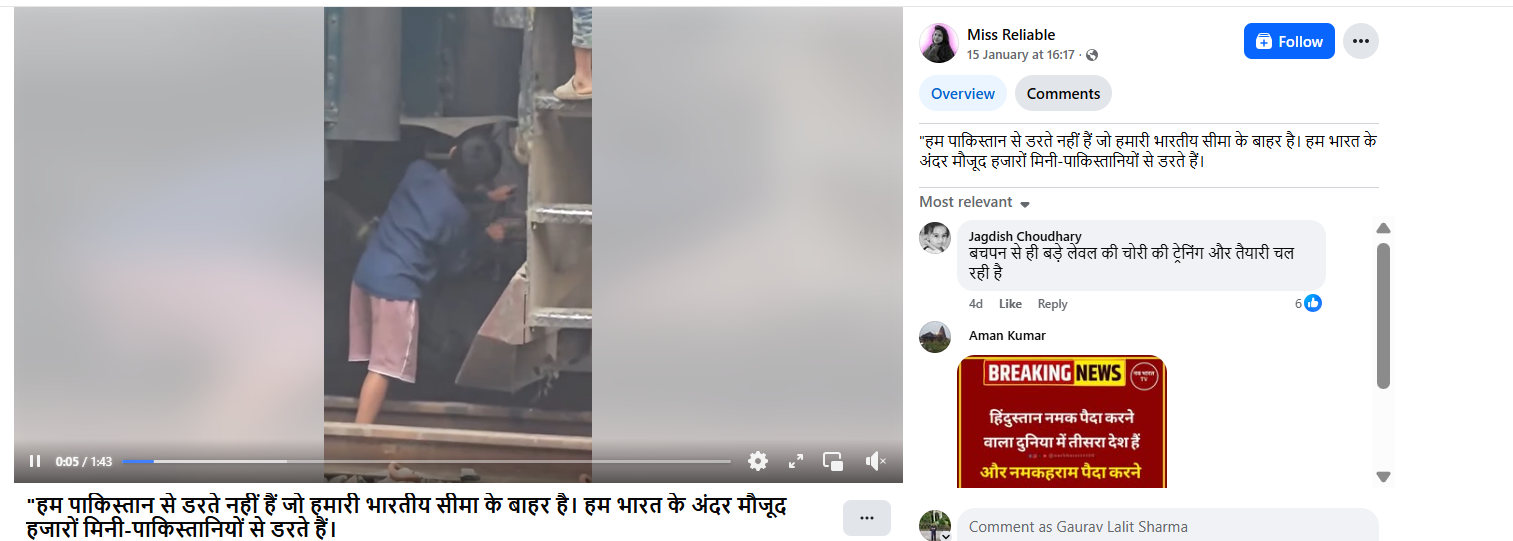

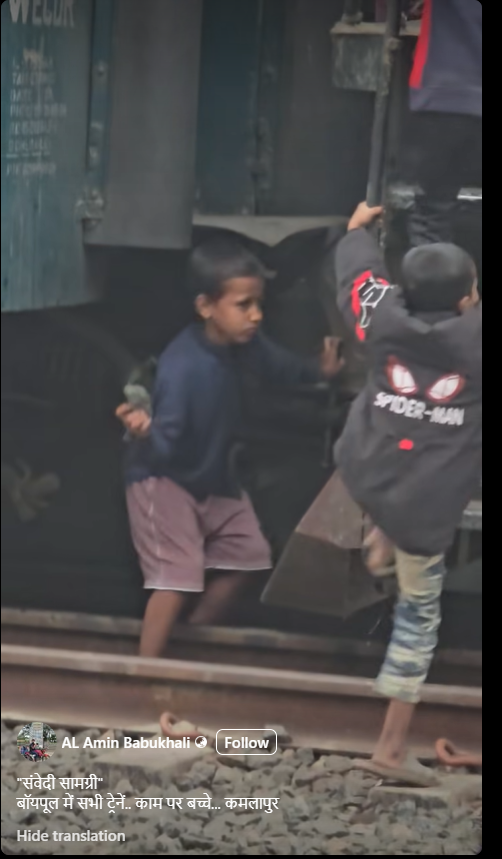

A video circulating widely on social media shows a child throwing stones at a moving train, while a few other children can also be seen climbing onto the engine. The video is being shared with a communal narrative, with claims that the incident took place in India.

Cyber Peace Foundation’s research found the viral claim to be misleading. Our research revealed that the video is not from India, but from Bangladesh, and is being falsely linked to India on social media.

Claim:

On January 15, 2026, a Facebook user shared the viral video claiming it depicted an incident from India. The post carried a provocative caption stating, “We are not afraid of Pakistan outside our borders. We are afraid of the thousands of mini-Pakistans within India.” The post has been widely circulated, amplifying communal sentiments.

Fact Check:

To verify the authenticity of the video, we conducted a reverse image search using Google Lens by extracting keyframes from the viral clip. During this process, we found the same video uploaded on a Bangladeshi Facebook account named AL Amin Babukhali on December 28, 2025. The caption of the original post mentions Kamalapur, which is a well-known railway station in Bangladesh. This strongly indicates that the incident did not occur in India.

Further analysis of the video shows that the train engine carries the marking “BR”, along with text written in the Bengali language. “BR” stands for Bangladesh Railways, confirming the origin of the train. To corroborate this further, we searched for images related to Bangladesh Railways using Google’s open tools. We found multiple images on Getty Images showing train engines with the same design and markings as seen in the viral video. The visual match clearly establishes that the train belongs to Bangladesh Railways.

Conclusion

Our research confirms that the viral video is from Bangladesh, not India. It is being shared on social media with a false and misleading claim to give it a communal angle and link it to India.

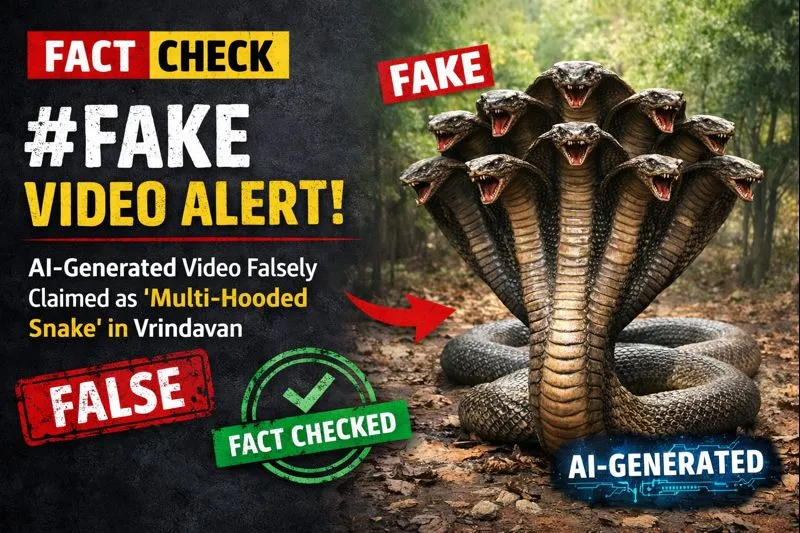

A video is being widely shared on social media showing devotees seated in a boat appearing stunned as a massive, multi-hooded snake—resembling the mythical Sheshnag—suddenly emerges from the middle of a water body.

The video captures visible panic and astonishment among the devotees. Social media users are sharing the clip claiming that it is from Vrindavan, with some portraying the sight as a divine or supernatural event. However, research conducted by the Cyber Peace Foundation found the viral claim to be false. Our research revealed that the video is not authentic and has been generated using artificial intelligence (AI).

Claim

On January 17, 2026, a user shared the viral video on Instagram with the caption suggesting that God had appeared again in the age of Kalyug. The post claims that a terrifying video from Vrindavan has surfaced in which devotees sitting in a boat were shocked to see a massive multi-hooded snake emerge from the water. The caption further states that devotees are hailing the creature as an incarnation of Sheshnag or Vasuki Nag, raising religious slogans and questioning whether the sight represents a divine sign. (The link to the post, its archive link, and screenshots are available.)

- https://www.instagram.com/reel/DTngN9FkoX0/?igsh=MTZvdTN1enI2NnFydA%3D%3D

- https://archive.ph/UuAqB

Fact Check:

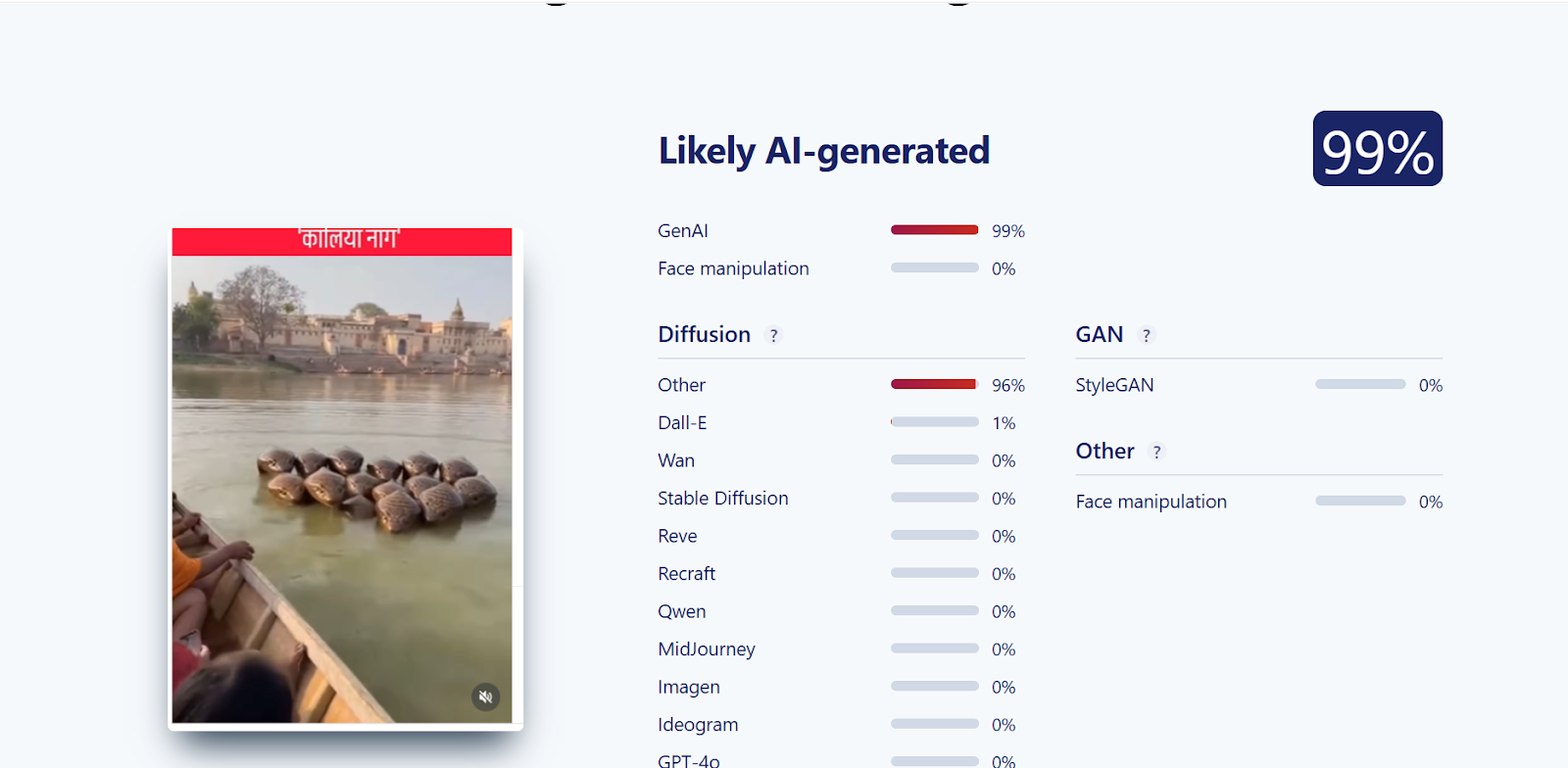

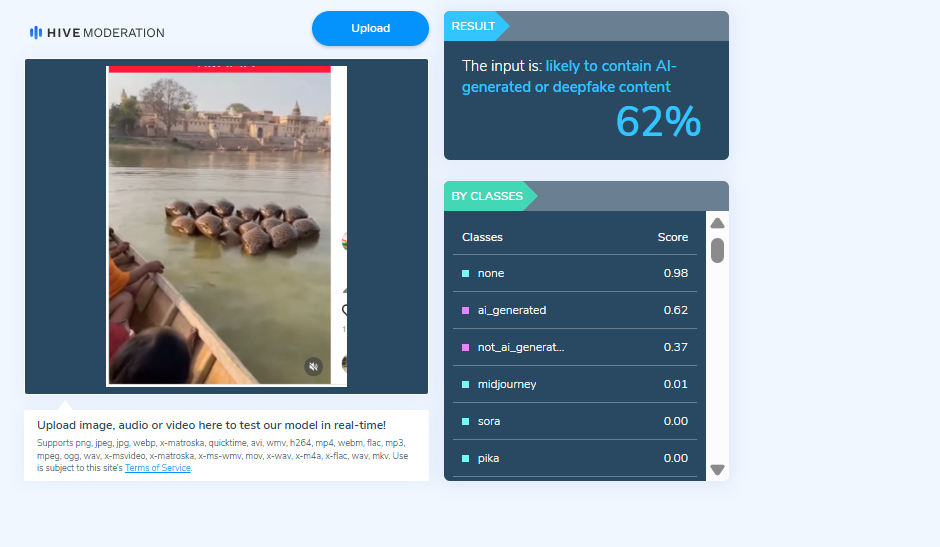

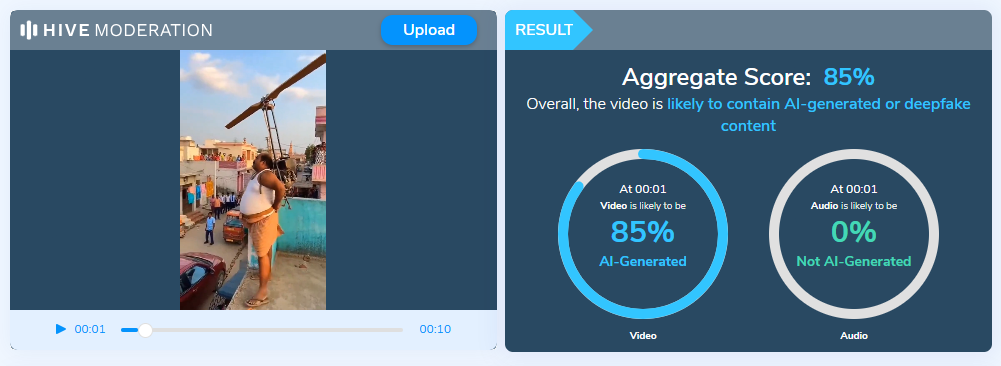

Upon closely examining the viral video, we suspected that it might be AI-generated. To verify this, the video was scanned using the AI detection tool SIGHTENGINE, which indicated that the visual is 99 per cent AI-generated.

In the next step of the research , the video was analysed using another AI detection tool, HIVE Moderation. According to the results obtained, the video was found to be 62 per cent AI-generated.

Conclusion

Our research clearly establishes that the viral video claiming to show a multi-hooded snake in Vrindavan is not real. The clip has been created using artificial intelligence and is being falsely shared on social media with religious and sensational claims.

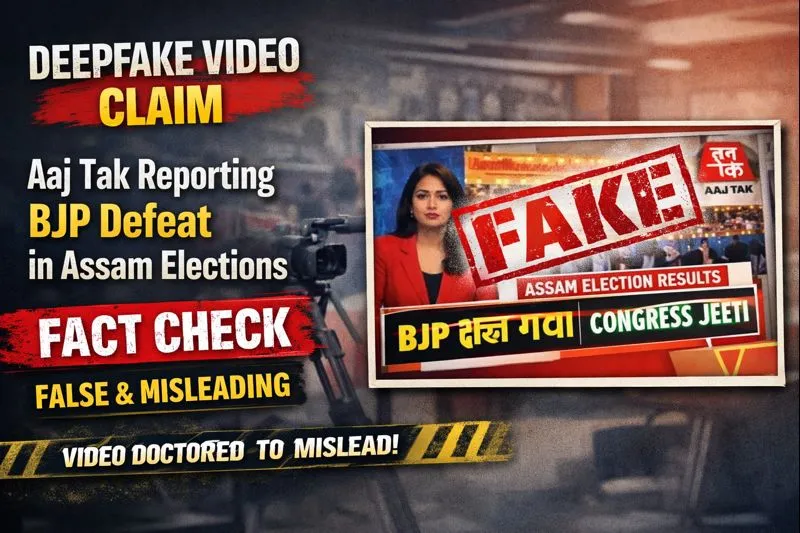

Assembly elections are due to be held in Assam later this year, with polling likely in April or May. Ahead of the elections, a video claiming to be an Aaj Tak news bulletin is being widely circulated on social media.

In the viral video, Aaj Tak anchor Rajiv Dhoundiyal is allegedly seen stating that a leaked intelligence report has issued a warning for the ruling Bharatiya Janata Party (BJP) in Assam. The clip claims that according to this purported report, the BJP may suffer significant losses in the upcoming Assembly elections. Several social media users sharing the video have also claimed that the alleged intelligence report signals the possible removal of Assam Chief Minister Himanta Biswa Sarma from office.

However, an investigation by the Cyber Peace Foundation found the viral claim to be false. Our probe clearly established that no leaked intelligence report related to the Assam Assembly elections exists.

Further, Aaj Tak has neither published nor broadcast any such report on its official television channel, website, or social media platforms. The investigation also revealed that the viral video itself is not authentic and has been created using deepfake technology.

Claim

On social media platform Facebook, a user shared the viral video claiming that the BJP has been pushed on the back foot following organisational changes in the Congress—appointing Priyanka Gandhi Vadra as chairperson of the election screening committee and Gaurav Gogoi as the Assam Pradesh Congress Committee president. The post further claims that an Intelligence Bureau report predicts that the current Assam government will not return to power.

(Link to the post, archive link, and screenshots are available.)

FactCheck:

To verify the claim, we first searched for reports related to any alleged leaked intelligence assessment concerning the Assam Assembly elections using relevant keywords. However, no credible or reliable reports supporting the claim were found. We then reviewed Aaj Tak’s official website, social media pages, and YouTube channel. Our examination confirmed that no such news bulletin has been published or broadcast by the network on any of its official platforms.

- https://www.facebook.com/aajtak/?locale=hi_IN

- https://www.instagram.com/aajtak/

- https://x.com/aajtak

- https://www.youtube.com/channel/UCt4t-jeY85JegMlZ-E5UWtA

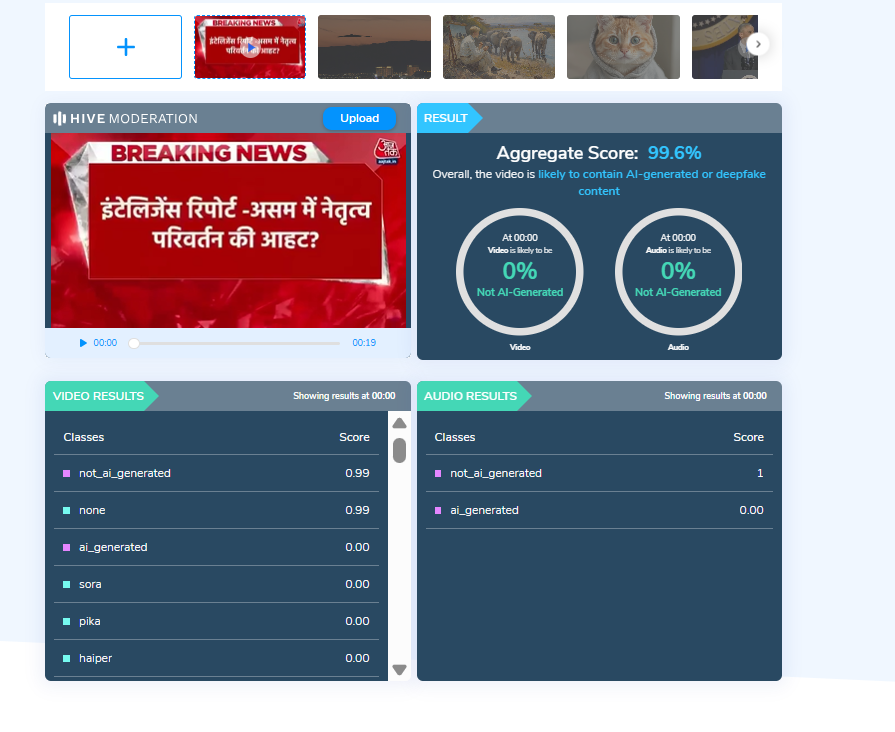

To further verify the authenticity of the video, its audio was scanned using the deepfake voice detection tool HIVE Moderation.

The analysis revealed that the voice heard in the video is 99 per cent AI-generated, clearly indicating that the audio is not genuine and has been artificially created using artificial intelligence.

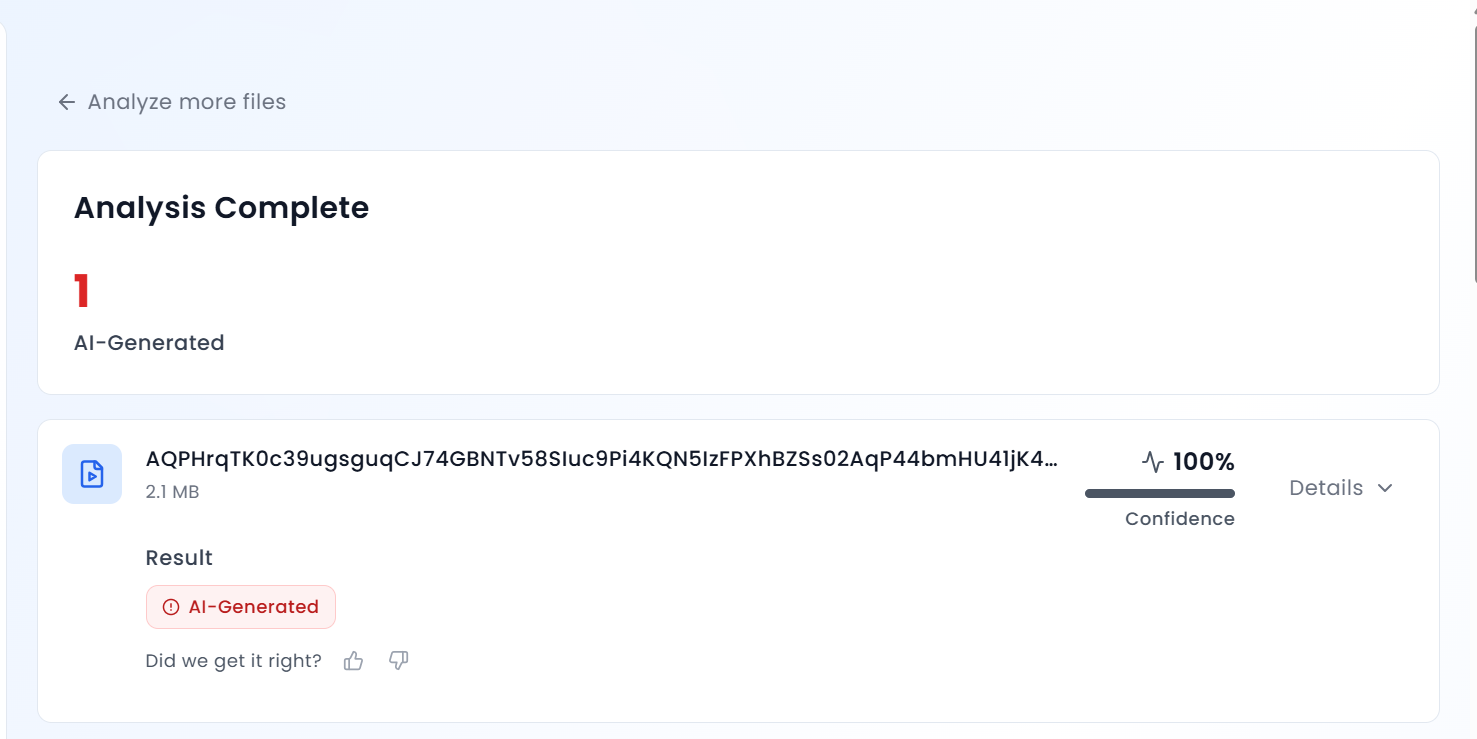

Additionally, the video was analysed using another AI detection tool, Aurigin AI, which also identified the viral clip as AI-generated.

Conclusion:

The investigation clearly establishes that there is no leaked intelligence report predicting BJP’s defeat in the Assam Assembly elections. Aaj Tak has not published or broadcast any such content on its official platforms. The video circulating on social media is not authentic and has been created using deepfake technology to mislead viewers.

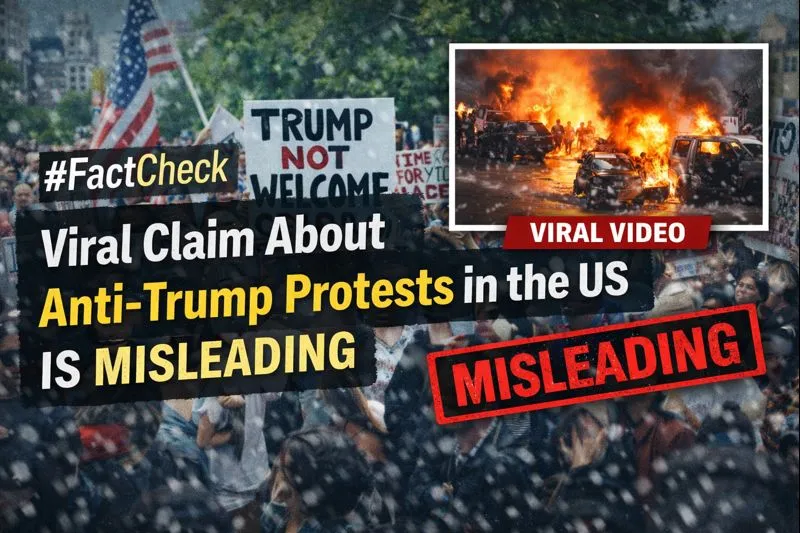

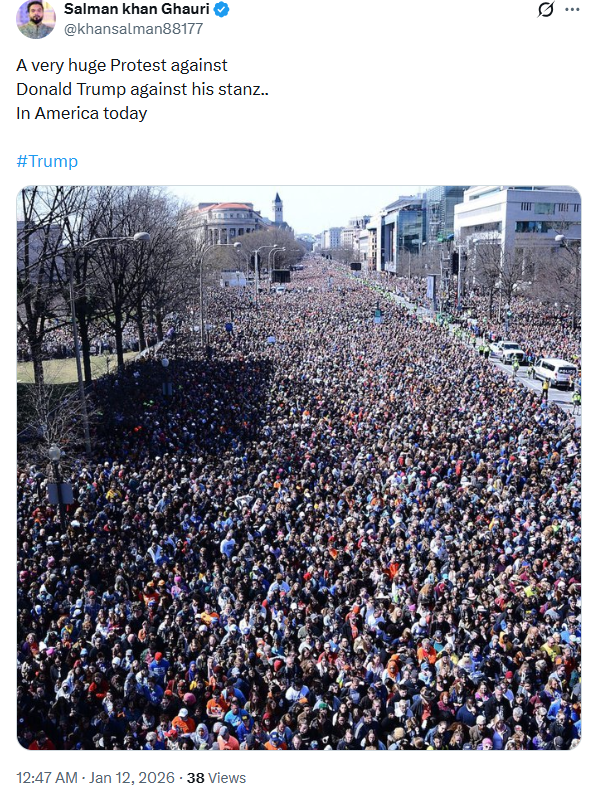

A photograph showing a massive crowd on a road is being widely shared on social media. The image is being circulated with the claim that people in the United States are staging large-scale protests against President Donald Trump.

However, CyberPeace Foundation’s research has found this claim to be misleading. Our fact-check reveals that the viral photograph is nearly eight years old and has been falsely linked to recent political developments.

Claim:

Social media users are sharing a photograph and claiming that it shows people protesting against US President Donald Trump.An X (formerly Twitter) user, Salman Khan Gauri (@khansalman88177), shared the image with the caption:“Today, a massive protest is taking place in America against Donald Trump.”

The post can be viewed here, and its archived version is available here.

FactCheck:

To verify the claim, we conducted a reverse image search of the viral photograph using Google. This led us to a report published by The Mercury News on April 6, 2018.

The report features the same image and states that the photograph was taken on March 24, 2018, during the ‘March for Our Lives’ rally in Washington, DC. The rally was organized to demand stricter gun control laws in the United States. The image shows a large crowd gathered on Pennsylvania Avenue in support of gun reform.

The report further notes that the Associated Press, on March 30, 2018, debunked false claims circulating online which alleged that liberal billionaire George Soros and his organizations had paid protesters $300 each to participate in the rally.

Further research led us to a report published by The Hindu on March 25, 2018, which also carries the same photograph. According to the report, thousands of Americans across the country participated in ‘March for Our Lives’ rallies following a mass shooting at a school in Florida. The protests were led by survivors and victims, demanding stronger gun laws.

The objective of these demonstrations was to break the legislative deadlock that has long hindered efforts to tighten firearm regulations in a country frequently rocked by mass shootings in schools and colleges.

Conclusion

The viral photograph is nearly eight years old and is unrelated to any recent protests against President Donald Trump.The image actually depicts a gun control protest held in 2018 and is being falsely shared with a misleading political claim.By circulating this outdated image with an incorrect context, social media users are spreading misinformation.

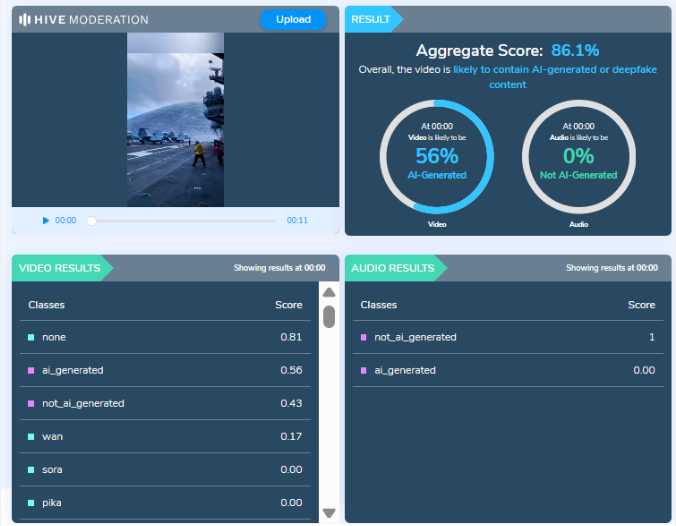

Social media users are widely sharing a video claiming to show an aircraft carrier being destroyed after getting trapped in a massive sea storm. In the viral clip, the aircraft carrier can be seen breaking apart amid violent waves, with users describing the visuals as a “wrath of nature.”

However, CyberPeace Foundation’s research has found this claim to be false. Our fact-check confirms that the viral video does not depict a real incident and has instead been created using Artificial Intelligence (AI).

Claim:

An X (formerly Twitter) user shared the viral video with the caption,“Nature’s wrath captured on camera.”The video shows an aircraft carrier appearing to be devastated by a powerful ocean storm. The post can be viewed here, and its archived version is available here.

https://x.com/Maailah1712/status/2011672435255624090

Fact Check:

At first glance, the visuals shown in the viral video appear highly unrealistic and cinematic, raising suspicion about their authenticity. The exaggerated motion of waves, structural damage to the vessel, and overall animation-like quality suggest that the video may have been digitally generated. To verify this, we analyzed the video using AI detection tools.

The analysis conducted by Hive Moderation, a widely used AI content detection platform, indicates that the video is highly likely to be AI-generated. According to Hive’s assessment, there is nearly a 90 percent probability that the visual content in the video was created using AI.

Conclusion

The viral video claiming to show an aircraft carrier being destroyed in a sea storm is not related to any real incident.It is a computer-generated, AI-created video that is being falsely shared online as a real natural disaster. By circulating such fabricated visuals without verification, social media users are contributing to the spread of misinformation.

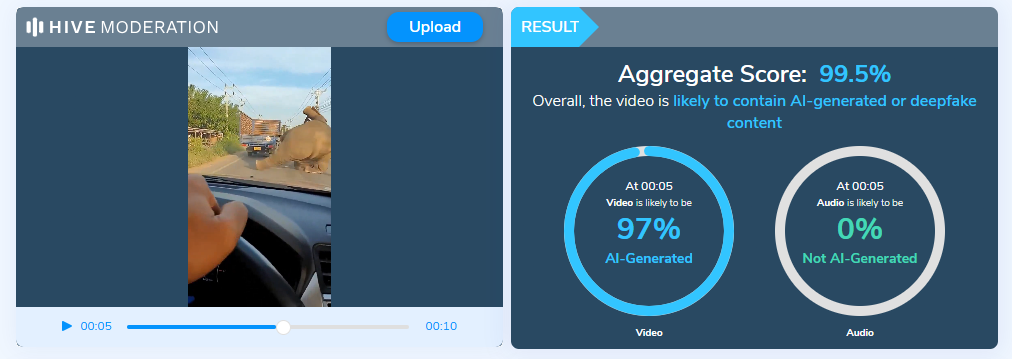

Executive Summary:

A video circulating on social media claims to show a live elephant falling from a moving truck due to improper transportation, followed by the animal quickly standing up and reacting on a public road. The content may raise concerns related to animal cruelty, public safety, and improper transport practices. A detailed examination using AI content detection tools, visual anomaly analysis indicates that the video is not authentic and is likely AI generated or digitally manipulated.

Claim:

The viral video (archive link) shows a disturbing scene where a large elephant is allegedly being transported in an open blue truck with barriers for support. As the truck moves along the road, the elephant shifts its weight and the weak side barrier breaks. This causes the elephant to fall onto the road, where it lands heavily on its side. Shortly after, the animal is seen getting back on its feet and reacting in distress, facing the vehicle that is recording the incident. The footage may raise serious concerns about safety, as elephants are normally transported in reinforced containers, and such an incident on a public road could endanger both the animal and people nearby.

Fact Check:

After receiving the video, we closely examined the visuals and noticed some inconsistencies that raised doubts about its authenticity. In particular, the elephant is seen recovering and standing up unnaturally quickly after a severe fall, which does not align with realistic animal behavior or physical response to such impact.

To further verify our observations, the video was analyzed using the Hive Moderation AI Detection tool, which indicated that the content is likely AI generated or digitally manipulated.

Additionally, no credible news reports or official sources were found to corroborate the incident, reinforcing the conclusion that the video is misleading.

Conclusion:

The claim that the video shows a real elephant transport accident is false and misleading. Based on AI detection results, observable visual anomalies, and the absence of credible reporting, the video is highly likely to be AI generated or digitally manipulated. Viewers are advised to exercise caution and verify such sensational content through trusted and authoritative sources before sharing.

- Claim: The viral video shows an elephant allegedly being transported, where a barrier breaks as it moves, causing the animal to fall onto the road before quickly getting back on its feet.

- Claimed On: X (Formally Twitter)

- Fact Check: False and Misleading

Executive Summary:

A viral video circulating on social media shows a man attempting to fly using a helicopter like fan attached to his body, followed by a crash onto a parked car. The clip was widely shared with humorous captions, suggesting it depicts a real life incident. Given the unusual nature of the visuals, the video was subjected to technical verification using AI content detection tools. Analysis using the AI detection platform indicates that the video is AI generated, and not a genuine real world event.

Claim:

A viral video (archive link) claims to show a person attempting to fly using a self made helicopter fan mechanism, briefly lifting off before crashing onto a car in a public setting. The video shows a man attempting to fly by strapping a helicopter like rotating fan to himself, essentially trying to imitate a human helicopter using a DIY mechanism. For a brief moment, it appears as if the device might work, but the attempt quickly fails due to lack of control, engineering support, and safety measures. Within seconds, the man loses balance and crashes down, landing on top of a parked car. The scene highlights a mix of overconfidence, unregulated experimentation, and risk taking carried out in a public space, with bystanders watching rather than intervening. The clip is shared humorously with the caption “India is not for beginners”.

Fact Check:

To verify the authenticity of the video, it was analyzed using the Hive Moderation AI detection tool, a widely used platform for identifying synthetic and AI generated media. The tool flagged the video with a high probability of AI generation, indicating that the visuals are not captured from a real physical event. Additional indicators such as unrealistic motion physics, inconsistent human object interaction further support the conclusion that the clip was artificially generated or heavily manipulated using generative AI techniques. No credible news reports or independent eyewitness sources corroborate the occurrence of such an incident.

Conclusion:

The claim that the video shows an individual attempting and failing to fly using a helicopter like device is false. Technical analysis confirms that the video is AI generated, and it should be treated as synthetic or fictional content rather than a real life incident. This case highlights how AI generated videos, when shared without context, can mislead audiences and be mistaken for real events, reinforcing the need for verification tools and critical evaluation of viral content.

- Claim: A viral video claims to show a person attempting to fly using a self made helicopter fan mechanism, briefly lifting off before crashing onto a car in a public setting

- Claimed On: X (Formally Twitter)

- Fact Check: False and Misleading

.webp)

Executive Summary:

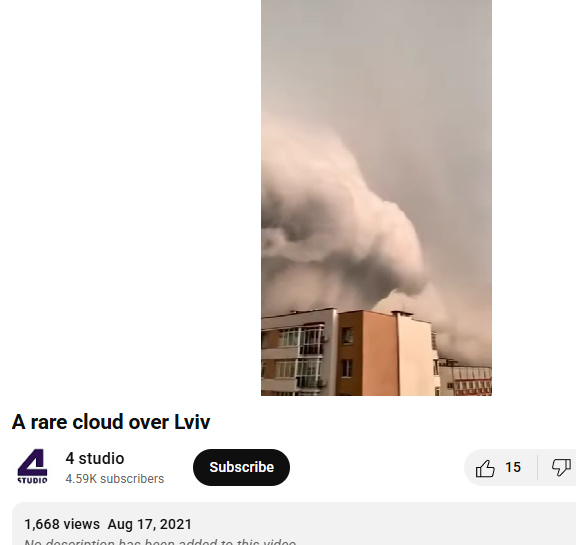

A viral video claims to show a massive cumulonimbus cloud over Gurugram, Haryana, and Delhi NCR on 3rd September 2025. However, our research reveals the claim is misleading. A reverse image search traced the visuals to Lviv, Ukraine, dating back to August 2021. The footage matches earlier reports and was even covered by the Ukrainian news outlet 24 Kanal, which published the story under the headline “Lviv Covered by Unique Thundercloud: Amazing Video”. Thus, the viral claim linking the phenomenon to a recent event in India is false.

Claim:

A viral video circulating on social media claims to show a massive cloud formation over Gurugram, Haryana, and the Delhi NCR region on 3rd September 2025. The cloud appears to be a cumulonimbus formation, which is typically associated with heavy rainfall, thunderstorms, and severe weather conditions.

Fact Check:

After conducting a reverse image search on key frames of the viral video, we found matching visuals from videos that attribute the phenomenon to Lviv, a city in Ukraine. These videos date back to August 2021, thereby debunking the claim that the footage depicts a recent weather event over Gurugram, Haryana, or the Delhi NCR region.

Further research revealed that a Ukrainian news channel named 24 Kanal, had reported on the Lviv thundercloud phenomenon in August 2021. The report was published under the headline “Lviv Covered by Unique Thundercloud: Amazing Video” ( original in Russian, translated into English).

Conclusion:

The viral video does not depict a recent weather event in Gurugram or Delhi NCR, but rather an old incident from Lviv, Ukraine, recorded in August 2021. Verified sources, including Ukrainian media coverage, confirm this. Hence, the circulating claim is misleading and false.

- Claim: Old Thundercloud Video from Lviv city in Ukraine Ukraine (2021) Falsely Linked to Delhi NCR, Gurugram and Haryana.

- Claimed On: Social Media

- Fact Check: False and Misleading.

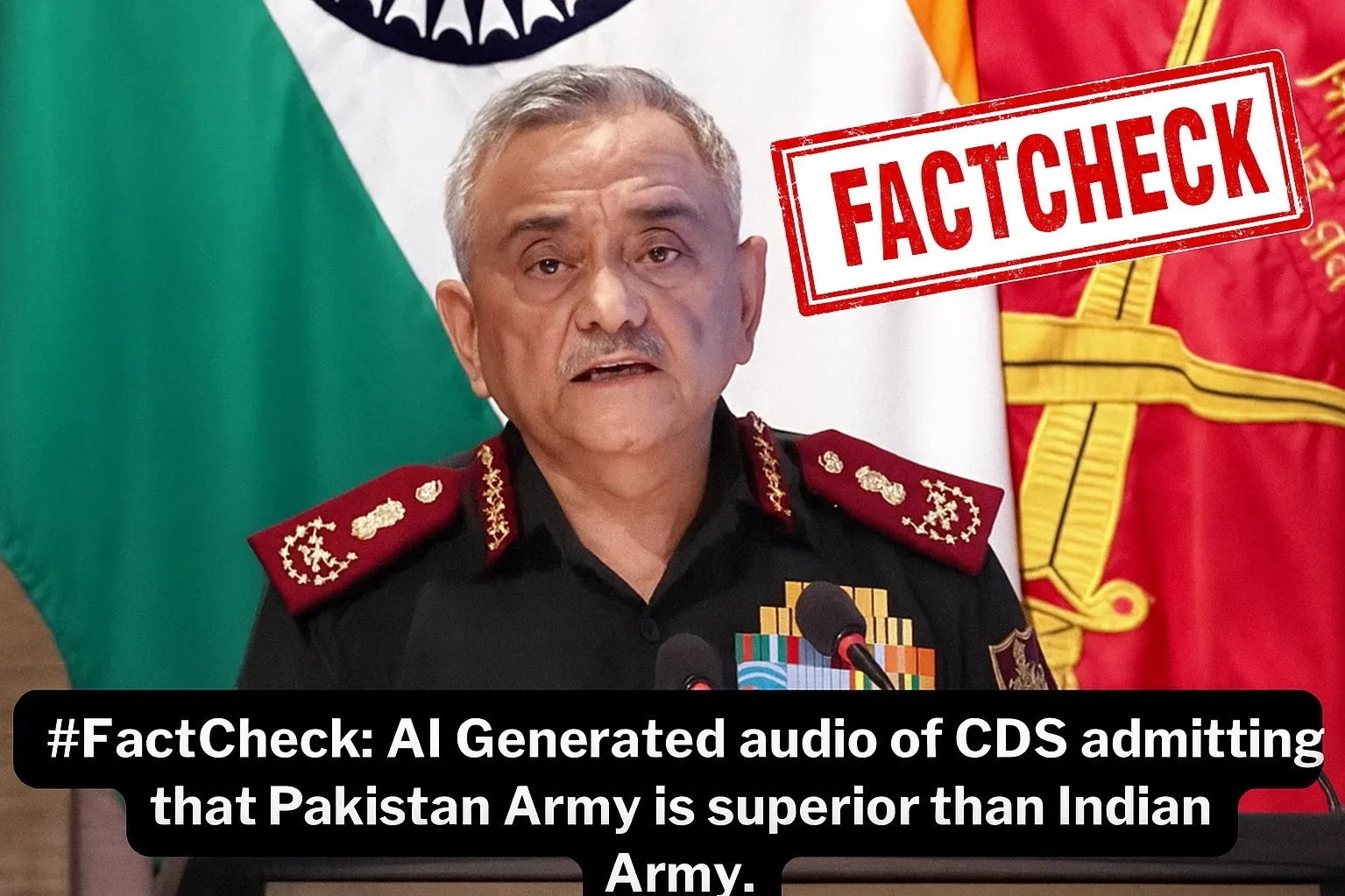

Executive Summary:

A viral social media claim alleges that India’s Chief of Defence Staff (CDS), General Anil Chauhan, praised Pakistan’s Army as superior during “Operation Sindoor.” Fact-checking confirms the claim is false. The original video, available on The Hindu’s official channel, shows General Chauhan inaugurating Ran-Samwad 2025 in Mhow, Madhya Pradesh. At the 1:22:12 mark, the genuine segment appears, proving the viral clip was altered. Additionally, analysis using Hiya AI Audio identified voice manipulation, flagging the segment as a deepfake with an authenticity score of 1/100. The fabricated statement was: “never mess with Pakistan because their army appears to be far more superior.” Thus, the viral video is doctored and misleading.

Claim:

A viral claim is being shared on social media (archived link) falsely claiming that India’s Chief of Defence Staff (CDS), General Anil Chauhan described Pakistan’s Army as superior and more advanced during Operation Sindoor.

Fact Check:

After performing a reverse image search we found a full clip on the official channel of The Hindu in which Chief of Defence Staff Anil Chauhan inaugurated ‘Ran-Samwad’ 2025 in Mhow, Madhya Pradesh.

In the clip on the time stamp of 1:22:12 we can see the actual part of the video segment which was manipulated in the viral video.

Also, by using Hiya AI Audio tool we got to know that the voice was manipulated in the specific segment of the video. The result shows Deepfake with an authenticity score 1/100, the result also shows the statement which is deepfake which was “ was to never mess with Pakistan because their army appears to be far more superior”.

Conclusion:

The viral video attributing remarks to CDS General Anil Chauhan about Pakistan’s Army being “superior” is fabricated. The original footage from The Hindu confirms no such statement was made, while forensic analysis using Hiya AI Audio detected clear voice manipulation, identifying the clip as a deepfake with minimal authenticity. Hence, the claim is baseless, misleading, and an attempt to spread disinformation.

- Claim: AI Generated audio of CDS admitting that the Pakistan Army is superior to the Indian Army.

- Claimed On: Social Media

- Fact Check: False and Misleading

Executive Summary:

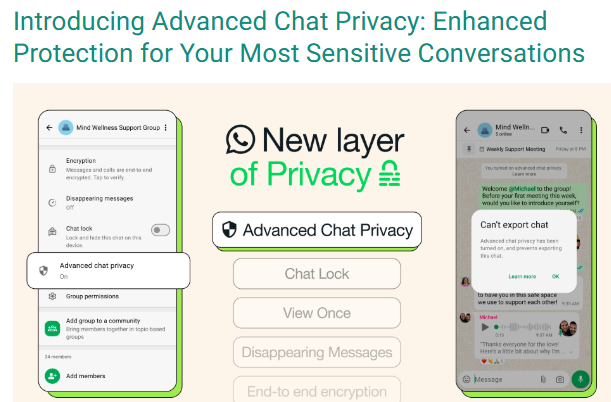

A viral social media video falsely claims that Meta AI reads all WhatsApp group and individual chats by default, and that enabling “Advanced Chat Privacy” can stop this. On performing reverse image search we found a blog post of WhatsApp which was posted in the month of April 2025 which claims that all personal and group chats remain protected with end to end (E2E) encryption, accessible only to the sender and recipient. Meta AI can interact only with messages explicitly sent to it or tagged with @MetaAI. The “Advanced Chat Privacy” feature is designed to prevent external sharing of chats, not to restrict Meta AI access. Therefore, the viral claim is misleading and factually incorrect, aimed at creating unnecessary fear among users.

Claim:

A viral social media video [archived link] alleges that Meta AI is actively accessing private conversations on WhatsApp, including both group and individual chats, due to the current default settings. The video further claims that users can safeguard their privacy by enabling the “Advanced Chat Privacy” feature, which purportedly prevents such access.

Fact Check:

Upon doing reverse image search from the keyframe of the viral video, we found a WhatsApp blog post from April 2025 that explains new privacy features to help users control their chats and data. It states that Meta AI can only see messages directly sent to it or tagged with @Meta AI. All personal and group chats are secured with end-to-end encryption, so only the sender and receiver can read them. The "Advanced Chat Privacy" setting helps stop chats from being shared outside WhatsApp, like blocking exports or auto-downloads, but it doesn’t affect Meta AI since it’s already blocked from reading chats. This shows the viral claim is false and meant to confuse people.

Conclusion:

The claim that Meta AI is reading WhatsApp Group Chats and that enabling the "Advance Chat Privacy" setting can prevent this is false and misleading. WhatsApp has officially confirmed that Meta AI only accesses messages explicitly shared with it, and all chats remain protected by end-to-end encryption, ensuring privacy. The "Advanced Chat Privacy" setting does not relate to Meta AI access, as it is already restricted by default.

- Claim: Viral social media video claims that WhatsApp Group Chats are being read by Meta AI due to current settings, and enabling the "Advance Chat Privacy" setting can prevent this.

- Claimed On: Social Media

- Fact Check: False and Misleading

Executive Summary:

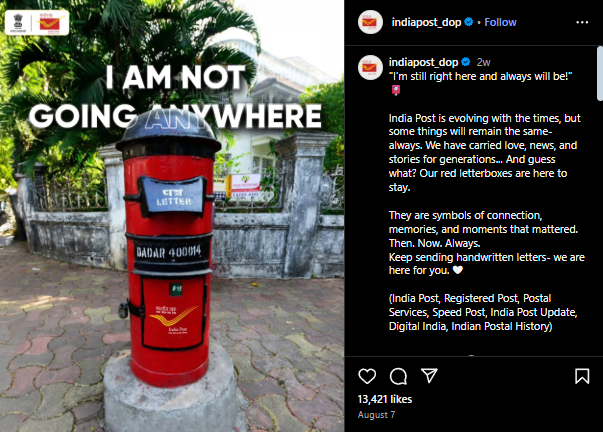

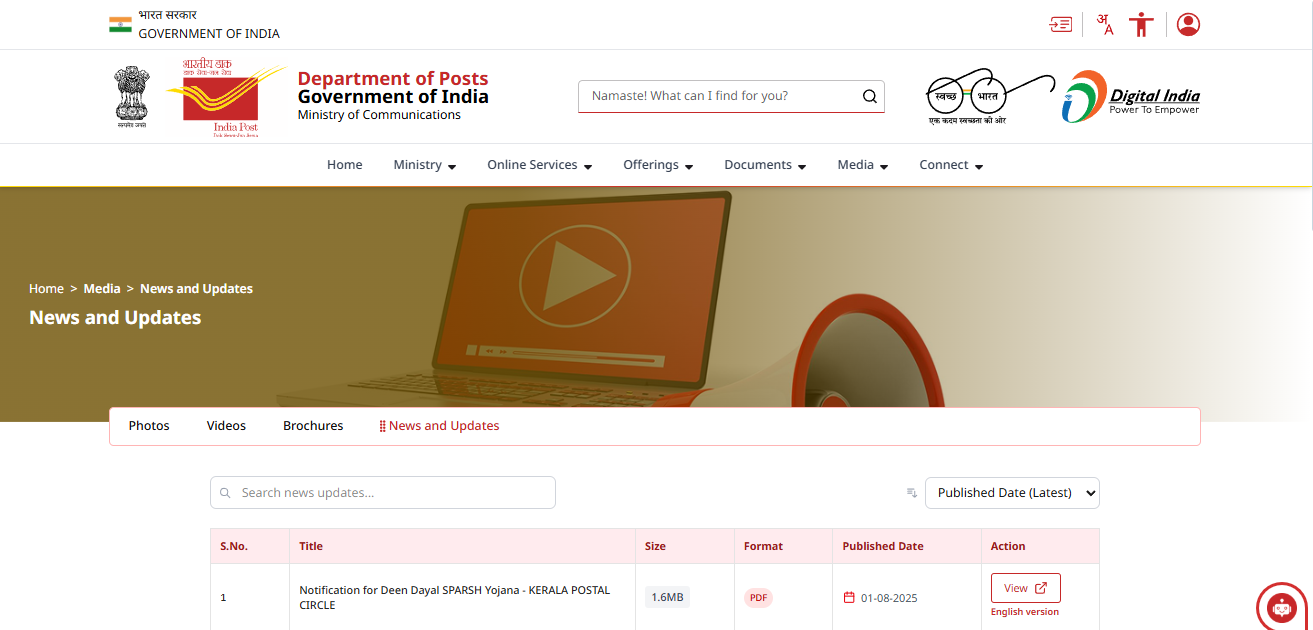

A viral social media claim suggested that India Post would discontinue all red post boxes across the country from 1 September 2025, attributing the move to the government’s Digital India initiative. However, fact-checking revealed this claim to be false. India Post’s official X (formerly Twitter) and Instagram handles clarified on 7 August 2025 that red letterboxes remain operational, calling them timeless symbols of connection and memories. No official notice or notification regarding their discontinuation exists on the Department of Posts’ website. This indicates the viral posts were misleading and aimed at creating confusion among the public.

Claim:

A claim is circulating on social media stating that India Post will discontinue all red post boxes across the country effective 1 September 2025. According to the viral posts,[archived link] the move is being linked to the government’s push towards Digital India, suggesting that traditional post boxes have lost their relevance in the digital era.

Fact Check:

After conducting a reverse image analysis, we found that the official X handle of India Post, in a post dated 7 August 2025, clarified that the viral claim was incorrect and misleading. The post was shared with the caption:

I’m still right here and always will be!"

India Post is evolving with the times, but some things will remain the same- always. We have carried love, news, and stories for generations... And guess what? Our red letterboxes are here to stay.

They are symbols of connection, memories, and moments that mattered. Then. Now. Always.

Keep sending handwritten letters- we are here for you.

This directly refutes the viral claim about the discontinuation of the red post box from 1 September 2025. A similar clarification was also posted on the official Instagram handle @indiapost_dop on the same date.

Furthermore, after thoroughly reviewing the official website of the Department of Posts, Government of India, we found absolutely no trace, notice, or even the slightest mention of any plan to discontinue the iconic red post boxes. This complete absence of official communication strongly reinforces the fact that the viral claim is nothing more than a baseless and misleading rumour.

Conclusion:

The claim about the discontinuation of red post boxes from 1 September 2025 is false and misleading. India Post has officially confirmed that the iconic red letterboxes will continue to function as before and remain an integral part of India’s postal services.

- Claim: A viral claim suggests that India Post will remove all red letter boxes across the country beginning 1 September 2025.

- Claimed On: Social Media

- Fact Check: False and Misleading

Executive Summary:

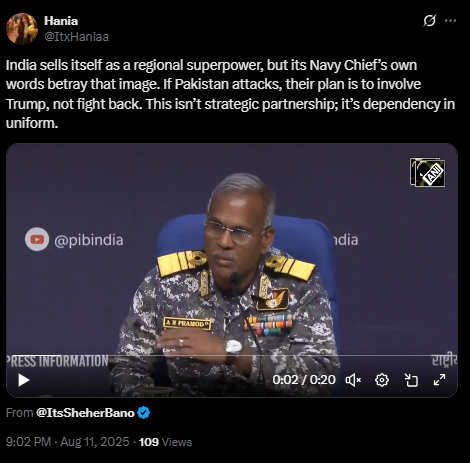

A viral video (archived link) circulating on social media claims that Vice Admiral AN Pramod stated India would seek assistance from the United States and President Trump if Pakistan launched an attack, portraying India as dependent rather than self-reliant. Research traced the extended footage to the Press Information Bureau’s official YouTube channel, published on 11 May 2025. In the authentic video, the Vice Admiral makes no such remark and instead concludes his statement with, “That’s all.” Further analysis using the AI Detection tool confirmed that the viral clip was digitally manipulated with AI-generated audio, misrepresenting his actual words.

Claim:

In the viral video an X user posted with the caption

”India sells itself as a regional superpower, but its Navy Chief’s own words betray that image. If Pakistan attacks, their plan is to involve Trump, not fight back. This isn’t strategic partnership; it’s dependency in uniform”.

In the video the Vice Admiral was heard saying

“We have worked out among three services, this time if Pakistan dares take any action, and Pakistan knows it, what we are going to do. We will complain against Pakistan to the United States of America and President Trump, like we did earlier in Operation Sindoor.”

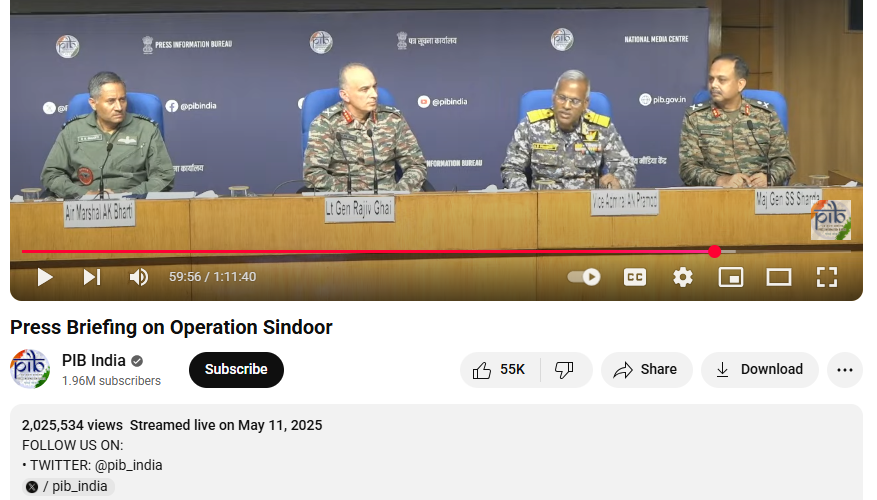

Fact Check:

Upon conducting a reverse image search on key frames from the video, we located the full version of the video on the official YouTube channel of the Press Information Bureau (PIB), published on 11 May 2025. In this video, at the 59:57-minute mark, the Vice Admiral can be heard saying:

“This time if Pakistan dares take any action, and Pakistan knows it, what we are going to do. That’s all.”

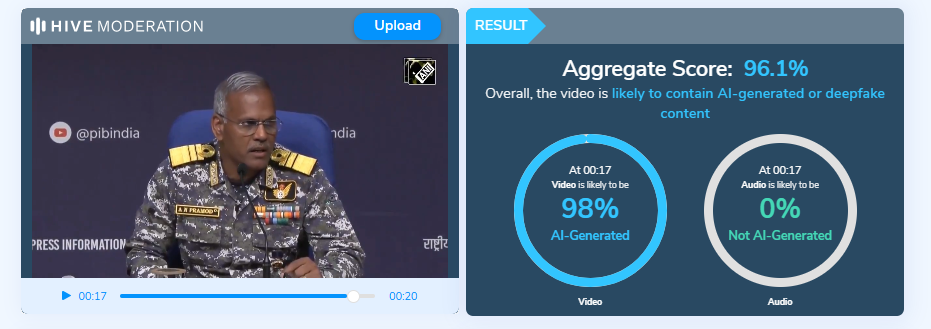

Further analysis was conducted using the Hive Moderation tool to examine the authenticity of the circulating clip. The results indicated that the video had been artificially generated, with clear signs of AI manipulation. This suggests that the content was not genuine but rather created with the intent to mislead viewers and spread misinformation.

Conclusion:

The viral video attributing remarks to Vice Admiral AN Pramod about India seeking U.S. and President Trump’s intervention against Pakistan is misleading. The extended speech, available on the Press Information Bureau’s official YouTube channel, contained no such statement. Instead of the alleged claim, the Vice Admiral concluded his comments by saying, “That’s all.” AI analysis using Hive Moderation further indicated that the viral clip had been artificially manipulated, with fabricated audio inserted to misrepresent his words. These findings confirm that the video is altered and does not reflect the Vice Admiral’s actual remarks.

Claim: Fake Viral Video Claiming Vice Admiral AN Pramod saying that next time if Pakistan Attack we will complain to US and Prez Trump.

Claimed On: Social Media

Fact Check: False and Misleading