#FactCheck: Viral image shows the Maldives mocking India with a "SURRENDER" sign on photo of Prime Minister Narendra Modi

Executive Summary:

A manipulated viral photo of a Maldivian building with an alleged oversized portrait of Indian Prime Minister Narendra Modi and the words "SURRENDER" went viral on social media. People responded with fear, indignation, and anxiety. Our research, however, showed that the image was manipulated and not authentic.

Claim:

A viral image claims that the Maldives displayed a huge portrait of PM Narendra Modi on a building front, along with the phrase “SURRENDER,” implying an act of national humiliation or submission.

Fact Check:

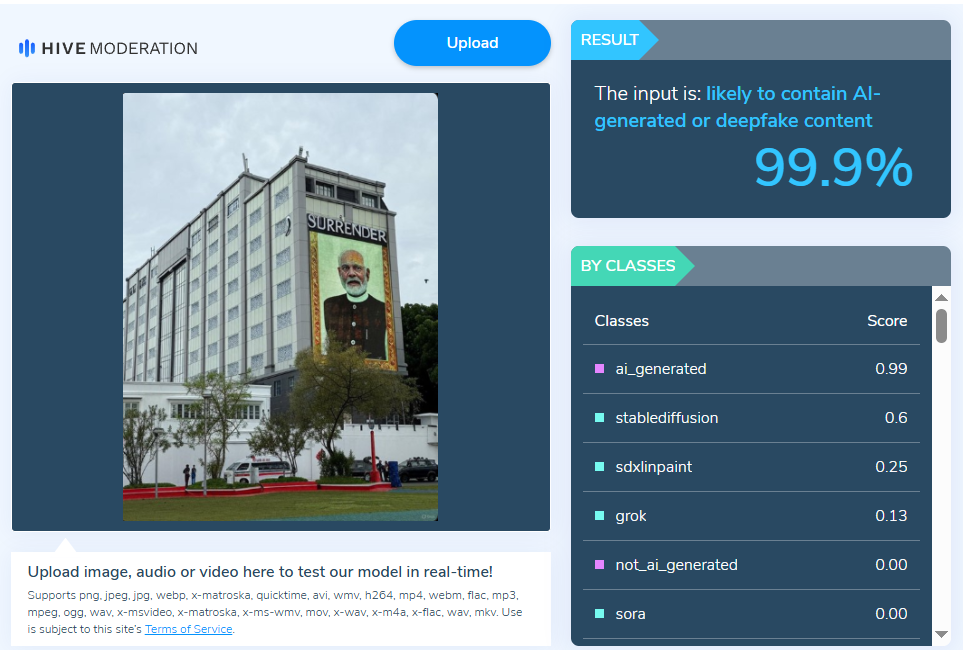

After a thorough examination of the viral post, we got to know that it had been altered. While the image displayed the same building, it was wrong to say it included Prime Minister Modi’s portrait along with the word “SURRENDER” shown in the viral version. We also checked the image with the Hive AI Detector, which marked it as 99.9% fake. This further confirmed that the viral image had been digitally altered.

During our research, we also found several images from Prime Minister Modi’s visit, including one of the same building displaying his portrait, shared by the official X handle of the Maldives National Defence Force (MNDF). The post mentioned “His Excellency Prime Minister Shri @narendramodi was warmly welcomed by His Excellency President Dr.@MMuizzu at Republic Square, where he was honored with a Guard of Honor by #MNDF on his state visit to Maldives.” This image, captured from a different angle, also does not feature the word “surrender.

Conclusion:

The claim that the Maldives showed a picture of PM Modi with a surrender message is incorrect and misleading. The image is altered and is being spread to mislead people and stir up controversy. Users should check the authenticity of photos before sharing.

- Claim: Viral image shows the Maldives mocking India with a surrender sign

- Claimed On: Social Media

- Fact Check: False and Misleading

Related Blogs

.webp)

Introduction

The Senate bill introduced on 19 March 2024 in the United States would require online platforms to obtain consumer consent before using their data for Artificial Intelligence (AI) model training. If a company fails to obtain this consent, it would be considered a deceptive or unfair practice and result in enforcement action from the Federal Trade Commission (FTC) under the AI consumer opt-in, notification standards, and ethical norms for training (AI Consent) bill. The legislation aims to strengthen consumer protection and give Americans the power to determine how their data is used by online platforms.

The proposed bill also seeks to create standards for disclosures, including requiring platforms to provide instructions to consumers on how they can affirm or rescind their consent. The option to grant or revoke consent should be made available at any time through an accessible and easily navigable mechanism, and the selection to withhold or reverse consent must be at least as prominent as the option to accept while taking the same number of steps or fewer as the option to accept.

The AI Consent bill directs the FTC to implement regulations to improve transparency by requiring companies to disclose when the data of individuals will be used to train AI and receive consumer opt-in to this use. The bill also commissions an FTC report on the technical feasibility of de-identifying data, given the rapid advancements in AI technologies, evaluating potential measures companies could take to effectively de-identify user data.

The definition of ‘Artificial Intelligence System’ under the proposed bill

ARTIFICIALINTELLIGENCE SYSTEM- The term artificial intelligence system“ means a machine-based system that—

- Is capable of influencing the environment by producing an output, including predictions, recommendations or decisions, for a given set of objectives; and

- 2. Uses machine or human-based data and inputs to

(i) Perceive real or virtual environments;

(ii) Abstract these perceptions into models through analysis in an automated manner (such as by using machine learning) or manually; and

(iii) Use model inference to formulate options for outcomes.

Importance of the proposed AI Consent Bill USA

1. Consumer Data Protection: The AI Consent bill primarily upholds the privacy rights of an individual. Consent is necessitated from the consumer before data is used for AI Training; the bill aims to empower individuals with unhinged autonomy over the use of personal information. The scope of the bill aligns with the greater objective of data protection laws globally, stressing the criticality of privacy rights and autonomy.

2. Prohibition Measures: The proposed bill intends to prohibit covered entities from exploiting the data of consumers for training purposes without their consent. This prohibition extends to the sale of data, transfer to third parties and usage. Such measures aim to prevent data misuse and exploitation of personal information. The bill aims to ensure companies are leveraged by consumer information for the development of AI without a transparent process of consent.

3. Transparent Consent Procedures: The bill calls for clear and conspicuous disclosures to be provided by the companies for the intended use of consumer data for AI training. The entities must provide a comprehensive explanation of data processing and its implications for consumers. The transparency fostered by the proposed bill allows consumers to make sound decisions about their data and its management, hence nurturing a sense of accountability and trust in data-driven practices.

4. Regulatory Compliance: The bill's guidelines call for strict requirements for procuring the consent of an individual. The entities must follow a prescribed mechanism for content solicitation, making the process streamlined and accessible for consumers. Moreover, the acquisition of content must be independent, i.e. without terms of service and other contractual obligations. These provisions underscore the importance of active and informed consent in data processing activities, reinforcing the principles of data protection and privacy.

5. Enforcement and Oversight: To enforce compliance with the provisions of the bill, robust mechanisms for oversight and enforcement are established. Violations of the prescribed regulations are treated as unfair or deceptive acts under its provisions. Empowering regulatory bodies like the FTC to ensure adherence to data privacy standards. By holding covered entities accountable for compliance, the bill fosters a culture of accountability and responsibility in data handling practices, thereby enhancing consumer trust and confidence in the digital ecosystem.

Importance of Data Anonymization

Data Anonymization is the process of concealing or removing personal or private information from the data set to safeguard the privacy of the individual associated with it. Anonymised data is a sort of information sanitisation in which data anonymisation techniques encrypt or delete personally identifying information from datasets to protect data privacy of the subject. This reduces the danger of unintentional exposure during information transfer across borders and allows for easier assessment and analytics after anonymisation. When personal information is compromised, the organisation suffers not just a security breach but also a breach of confidence from the client or consumer. Such assaults can result in a wide range of privacy infractions, including breach of contract, discrimination, and identity theft.

The AI consent bill asks the FTC to study data de-identification methods. Data anonymisation is critical to improving privacy protection since it reduces the danger of re-identification and unauthorised access to personal information. Regulatory bodies can increase privacy safeguards and reduce privacy risks connected with data processing operations by investigating and perhaps implementing anonymisation procedures.

The AI consent bill emphasises de-identification methods, as well as the DPDP Act 2023 in India, while not specifically talking about data de-identification, but it emphasises the data minimisation principles, which highlights the potential future focus on data anonymisation processes or techniques in India.

Conclusion

The proposed AI Consent bill in the US represents a significant step towards enhancing consumer privacy rights and data protection in the context of AI development. Through its stringent prohibitions, transparent consent procedures, regulatory compliance measures, and robust enforcement mechanisms, the bill strives to strike a balance between fostering innovation in AI technologies while safeguarding the privacy and autonomy of individuals.

References:

- https://fedscoop.com/consumer-data-consent-training-ai-models-senate-bill/#:~:text=%E2%80%9CThe%20AI%20CONSENT%20Act%20gives,Welch%20said%20in%20a%20statement

- https://www.dataguidance.com/news/usa-bill-ai-consent-act-introduced-house#:~:text=USA%3A%20Bill%20for%20the%20AI%20Consent%20Act%20introduced%20to%20House%20of%20Representatives,-ConsentPrivacy%20Law&text=On%20March%2019%2C%202024%2C%20US,the%20U.S.%20House%20of%20Representatives

- https://datenrecht.ch/en/usa-ai-consent-act-vorgeschlagen/

- https://www.lujan.senate.gov/newsroom/press-releases/lujan-welch-introduce-billto-require-online-platforms-receive-consumers-consent-before-using-their-personal-data-to-train-ai-models/

Introduction

The appeal is to be heard by the TDSAT (telecommunication dispute settlement & appellate tribunal) regarding several changes under Digital personal data protection. The Changes should be a removal of the deemed consent, a change in appellate mechanism, No change in delegation legislation, and under data breach. And there are some following other changes in the bill, and the digital personal data protection bill 2023 will now provide a negative list of countries that cannot transfer the data.

New Version of the DPDP Bill

The Digital Personal Data Protection Bill has a new version. There are three major changes in the 2022 draft of the digital personal data protection bill. The changes are as follows: The new version proposes changes that there shall be no deemed consent under the bill and that the personal data processing should be for limited uses only. By giving the deemed consent, there shall be consent for the processing of data for any purposes. That is why there shall be no deemed consent.

- In the interest of the sovereignty

- The integrity of India and the National Security

- For the issue of subsidies, benefits, services, certificates, licenses, permits, etc

- To comply with any judgment or order under the law

- To protect, assist, or provide service in a medical or health emergency, a disaster situation, or to maintain public order

- In relation to an employee and his/her rights

The 2023 version now includes an appeals mechanism

It states that the Board will have the authority to issue directives for data breach remediation or mitigation, investigate data breaches and complaints, and levy financial penalties. It would be authorised to submit complaints to alternative dispute resolution, accept voluntary undertakings from data fiduciaries, and advise the government to prohibit a data fiduciary’s website, app, or other online presence if the terms of the law were regularly violated. The Telecom Disputes Settlement and Appellate Tribunal will hear any appeals.

The other change is in delegated legislation, as one of the criticisms of the 2022 version bill was that it gave the government extensive rule-making powers. The committee also raised the same concern with the ministry. The committed wants that the provisions that cannot be fully defined within the scope of the bill can be addressed.

The other major change raised in the new version bill is regarding the data breach; there will be no compensation for the data breach. This raises a significant concern for the victims, If the victims suffer a data breach and he approaches the relevant court or authority, he will not be awarded compensation for the loss he has suffered due to the data breach.

Need of changes under DPDP

There is a need for changes in digital personal data protection as we talk about the deemed consent so simply speaking, by ‘deeming’ consent for subsequent uses, your data may be used for purposes other than what it has been provided for and, as there is no provision for to be informed of this through mandatory notice, there may never even come to know about it.

Conclusion

The bill requires changes to meet the need of evolving digital landscape in the digital personal data protection 2022 draft. The removal of deemed consent will ultimately protect the data of the data principal. And the data of the data principal will be used or processed only for the purpose for which the consent is given. The change in the appellate mechanism is also crucial as it meets the requirements of addressing appeals. However, the no compensation for a data breach is derogatory to the interest of the victim who has suffered a data breach.

Introduction

India's Computer Emergency Response Team (CERT-In) has unfurled its banner of digital hygiene, heralding the initiative 'Cyber Swachhta Pakhwada,' a clarion call to the nation's citizens to fortify their devices against the insidious botnet scourge. The government's Cyber Swachhta Kendra (CSK)—a Botnet Cleaning and Malware Analysis Centre—stands as a bulwark in this ongoing struggle. It is a digital fortress, conceived under the aegis of the National Cyber Security Policy, with a singular vision: to engender a secure cyber ecosystem within India's borders. The CSK's mandate is clear and compelling—to detect botnet infections within the subcontinent and to notify, enable cleaning, and secure systems of end users to stymie further infections.

What are Bots?

Bots are automated rogue software programs crafted with malevolent intent, lurking in the shadows of the internet. They are the harbingers of harm, capable of data theft, disseminating malware, and orchestrating cyberattacks, among other digital depredations.

A botnet infection is like a parasitic infestation within the electronic sinews of our devices—smartphones, computers, tablets—transforming them into unwitting soldiers in a hacker's malevolent legion. Once ensnared within the botnet's web, these devices become conduits for a plethora of malicious activities: the dissemination of spam, the obstruction of communications, and the pilfering of sensitive information such as banking details and personal credentials.

How, then, does one's device fall prey to such a fate? The vectors are manifold: an infected email attachment opened in a moment of incaution, a malicious link clicked in haste, a file downloaded from the murky depths of an untrusted source, or the use of an unsecured public Wi-Fi network. Each action can be the key that unlocks the door to digital perdition.

In an era where malware attacks and scams proliferate like a plague, the security of our personal devices has ascended to a paramount concern. To address this exigency and to aid individuals in the fortification of their smartphones, the Department of Telecommunications(DoT) has unfurled a suite of free bot removal tools. The government's outreach extends into the ether, dispatching SMS notifications to the populace and disseminating awareness of these digital prophylactics.

Stay Cyber Safe

To protect your device from botnet infections and malware, the Government of India, through CERT-In, recommends downloading the 'Free Bot Removal Tool' at csk.gov.in.' This SMS is not merely a reminder but a beacon guiding users to a safe harbor in the tumultuous seas of cyberspace.

Cyber Swachhta Kendra

The Cyber Swachhta Kendra portal emerges as an oasis in the desert of digital threats, offering free malware detection tools to the vigilant netizen. This portal, also known as the Botnet Cleaning and Malware Analysis Centre, operates in concert with Internet Service Providers (ISPs) and antivirus companies, under the stewardship ofCERT-In. It is a repository of knowledge and tools, a digital armoury where users can arm themselves against the specters of botnet infection.

To extricate your device from the clutches of a botnet or to purge the bots and malware that may lurk within, one must embark on a journey to the CSK website. There, under the 'Security Tools' tab, lies the arsenal of antivirus companies, each offering their own bot removal tool. For Windows users, the choice includes stalwarts such as eScan Antivirus, K7 Security, and Quick Heal. Android users, meanwhile, can venture to the Google Play Store and seek out the 'eScan CERT-IN Bot Removal ' tool or 'M-Kavach2,' a digital shield forged by C-DAC Hyderabad.

Once the chosen app is ensconced within your device, it will commence its silent vigil, scanning the digital sinews for any trace of malware, excising any infections with surgical precision. But the CSK portal's offerings extend beyond mere bot removal tools; it also proffers other security applications such as 'USB Pratirodh' and 'AppSamvid.' These tools are not mere utilities but sentinels standing guard over the sanctity of our digital lives.

USB Pratirodh

'USB Pratirodh' is a desktop guardian, regulating the ingress and egress of removable storage media. It demands authentication with each new connection, scanning for malware, encrypting data, and allowing changes to read/write permissions. 'AppSamvid,' on the other hand, is a gatekeeper for Windows users, permitting only trusted executables and Java files to run, safeguarding the system from the myriad threats that lurk in the digital shadows.

Conclusion

In this odyssey through the digital safety frontier, the Cyber Swachhta Kendra stands as a testament to the power of collective vigilance. It is a reminder that in the vast, interconnected web of the internet, the security of one is the security of all. As we navigate the dark corners of the internet, let us equip ourselves with knowledge and tools, and may our devices remain steadfast sentinels in the ceaseless battle against the unseen adversaries of the digital age.

References

- https://timesofindia.indiatimes.com/gadgets-news/five-government-provided-botnet-and-malware-cleaning-tools/articleshow/107951686.cms

- https://indianexpress.com/article/technology/tech-news-technology/cyber-swachhta-kendra-free-botnet-detection-removal-tools-digital-india-8650425/