#FactCheck: Fake viral AI video captures a real-time bridge failure incident in Bihar

Executive Summary:

A video went viral on social media claiming to show a bridge collapsing in Bihar. The video prompted panic and discussions across various social media platforms. However, an exhaustive inquiry determined this was not real video but AI-generated content engineered to look like a real bridge collapse. This is a clear case of misinformation being harvested to create panic and ambiguity.

Claim:

The viral video shows a real bridge collapse in Bihar, indicating possible infrastructure failure or a recent incident in the state.

Fact Check:

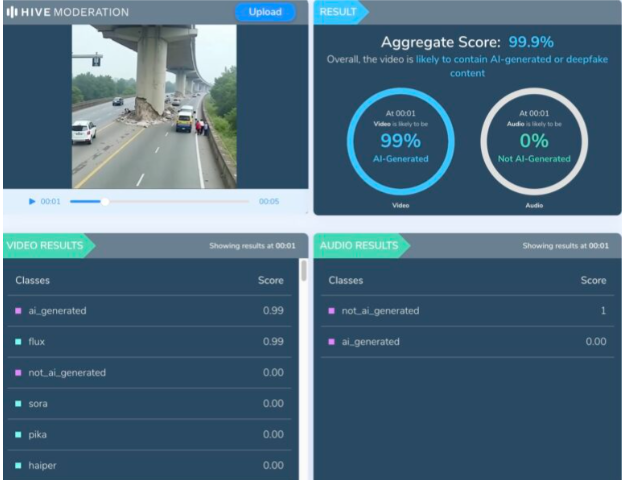

Upon examination of the viral video, various visual anomalies were highlighted, such as unnatural movements, disappearing people, and unusual debris behavior which suggested the footage was generated artificially. We used Hive AI Detector for AI detection, and it confirmed this, labelling the content as 99.9% AI. It is also noted that there is the absence of realism with the environment and some abrupt animation like effects that would not typically occur in actual footage.

No valid news outlet or government agency reported a recent bridge collapse in Bihar. All these factors clearly verify that the video is made up and not real, designed to mislead viewers into thinking it was a real-life disaster, utilizing artificial intelligence.

Conclusion:

The viral video is a fake and confirmed to be AI-generated. It falsely claims to show a bridge collapsing in Bihar. This kind of video fosters misinformation and illustrates a growing concern about using AI-generated videos to mislead viewers.

Claim: A recent viral video captures a real-time bridge failure incident in Bihar.

Claimed On: Social Media

Fact Check: False and Misleading

Related Blogs

.webp)

Introduction: The Internet’s Foundational Ideal of Openness

The Internet was built as a decentralised network to foster open communication and global collaboration. Unlike traditional media or state infrastructure, no single government, company, or institution controls the Internet. Instead, it has historically been governed by a consensus of the multiple communities, like universities, independent researchers, and engineers, who were involved in building it. This bottom-up, cooperative approach was the foundation of Internet governance and ensured that the Internet remained open, interoperable, and accessible to all. As the Internet began to influence every aspect of life, including commerce, culture, education, and politics, it required a more organised governance model. This compelled the rise of the multi-stakeholder internet governance model in the early 2000s.

The Rise of Multistakeholder Internet Governance

Representatives from governments, civil society, technical experts, and the private sector congregated at the United Nations World Summit on Information Society (WSIS), and adopted the Tunis Agenda for the Information Society. Per this Agenda, internet governance was defined as “… the development and application by governments, the private sector, and civil society in their respective roles of shared principles, norms, rules, decision-making procedures, and programmes that shape the evolution and use of the Internet.” Internet issues are cross-cutting across technical, political, economic, and social domains, and no one actor can manage them alone. Thus, stakeholders with varying interests are meant to come together to give direction to issues in the digital environment, like data privacy, child safety, cybersecurity, freedom of expression, and more, while upholding human rights.

Internet Governance in Practice: A History of Power Shifts

While the idea of democratizing Internet governance is a noble one, the Tunis Agenda has been criticised for reflecting geopolitical asymmetries and relegating the roles of technical communities and civil society to the sidelines. Throughout the history of the internet, certain players have wielded more power in shaping how it is managed. Accordingly, internet governance can be said to have undergone three broad phases.

In the first phase, the Internet was managed primarily by technical experts in universities and private companies, which contributed to building and scaling it up. The standards and protocols set during this phase are in use today and make the Internet function the way it does. This was the time when the Internet was a transformative invention and optimistically hailed as the harbinger of a utopian society, especially in the USA, where it was invented.

In the second phase, the ideal of multistakeholderism was promoted, in which all those who benefit from the Internet work together to create processes that will govern it democratically. This model also aims to reduce the Internet’s vulnerability to unilateral decision-making, an ideal that has been under threat because this phase has seen the growth of Big Tech. What started as platforms enabling access to information, free speech, and creativity has turned into a breeding ground for misinformation, hate speech, cybercrime, Child Sexual Abuse Material (CSAM), and privacy concerns. The rise of generative AI only compounds these challenges. Tech giants like Google, Meta, X (formerly Twitter), OpenAI, Microsoft, Apple, etc. have amassed vast financial capital, technological monopoly, and user datasets. This gives them unprecedented influence not only over communications but also culture, society, and technology governance.

The anxieties surrounding Big Tech have fed into the third phase, with increasing calls for government regulation and digital nationalism. Governments worldwide are scrambling to regulate AI, data privacy, and cybersecurity, often through processes that lack transparency. An example is India’s Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021, which was passed without parliamentary debate. Governments are also pressuring platforms to take down content through opaque takedown orders. Laws like the UK’s Investigatory Powers Act, 2016, are criticised for giving the government the power to indirectly mandate encryption backdoors, compromising the strength of end-to-end encryption systems. Further, the internet itself is fragmenting into the “splinternet” amid rising geopolitical tensions, in the form of Russia’s “sovereign internet” or through China’s Great Firewall.

Conclusion

While multistakeholderism is an ideal, Internet governance is a playground of contesting power relations in practice. As governments assert digital sovereignty and Big Tech consolidates influence, the space for meaningful participation of other stakeholders has been negligible. Consultation processes have often been symbolic. The principles of openness, inclusivity, and networked decision-making are once again at risk of being sidelined in favour of nationalism or profit. The promise of a decentralised, rights-respecting, and interoperable internet will only be fulfilled if we recommit to the spirit of Multi-Stakeholder Internet Governance, not just its structure. Efficient internet governance requires that the multiple stakeholders be empowered to carry out their roles, not just talk about them.

References

- https://www.newyorker.com/magazine/2024/02/05/can-the-internet-be-governed

- https://www.internetsociety.org/wp-content/uploads/2017/09/ISOC-PolicyBrief-InternetGovernance-20151030-nb.pdf

- https://itp.cdn.icann.org/en/files/government-engagement-ge/multistakeholder-model-internet-governance-fact-sheet-05-09-2024-en.pdf\

- https://nrs.help/post/internet-governance-and-its-importance/

- https://daidac.thecjid.org/how-data-power-is-skewing-internet-governance-to-big-tech-companies-and-ai-tech-guys/

Introduction

As India moves full steam ahead towards a trillion-dollar digital economy, how user data is gathered, processed and safeguarded is under the spotlight. One of the most pervasive but least known technologies used to gather user data is the cookie. Cookies are inserted into every website and application to improve functionality, measure usage and customize content. But they also present enormous privacy threats, particularly when used without explicit user approval.

In 2023, India passed the Digital Personal Data Protection Act (DPDP) to give strong legal protection to data privacy. Though the act does not refer to cookies by name, its language leaves no doubt as to the inclusion of any technology that gathers or processes personal information and thus cookies regulation is at the centre of digital compliance in India. This blog covers what cookies are, how international legislation, such as the GDPR, has addressed them and how India's DPDP will regulate their use.

What Are Cookies and Why Do They Matter?

Cookies are simply small pieces of data that a website stores in the browser. They were originally designed to help websites remember useful information about users, such as your login session or what is in your shopping cart. Netscape initially built them in 1994 to make web surfing more efficient.

Cookies exist in various types. Session cookies are volatile and are deleted when the browser is shut down, whereas persistent cookies are stored on the device to monitor users over a period of time. First-party cookies are made by the site one is visiting, while third-party cookies are from other domains, usually utilised for advertisements or analytics. Special cookies, such as secure cookies, zombie cookies and tracking cookies, differ in intent and danger. They gather information such as IP addresses, device IDs and browsing history information associated with a person, thus making it personal data per the majority of data protection regulations.

A Brief Overview of the GDPR and Cookie Policy

The GDPR regulates how personal data can be processed in general. However, if a cookie collects personal data (like IP addresses or identifiers that can track a person), then GDPR applies as well, because it sets the rules on how that personal data may be processed, what lawful bases are required, and what rights the user has.

The ePrivacy Directive (also called the “Cookie Law”) specifically regulates how cookies and similar technologies can be used. Article 5(3) of the ePrivacy Directive says that storing or accessing information (such as cookies) on a user’s device requires prior, informed consent, unless the cookie is strictly necessary for providing the service requested by the user.

In the seminal Planet49 decision, the Court of Justice of the European Union held that pre-ticked boxes do not represent valid consent. Another prominent enforcement saw Amazon fined €35 million by France's CNIL for using tracking cookies without user consent.

Cookies and India’s Digital Personal Data Protection Act (DPDP), 2023

India's Digital Personal Data Protection Act, 2023 does not refer to cookies specifically but its provisions necessarily come into play when cookies harvest personal data like user activity, IP addresses, or device data. According to DPDP, personal data is to be processed for legitimate purposes with the individual's consent. The consent has to be free, informed, clear and unambiguous. The individuals have to be informed of what data is collected, how it will be processed.. The Act also forbids behavioural monitoring and targeted advertising in the case of children.

The Ministry of Electronics and IT released the Business Requirements Document for Consent Management Systems (BRDCMS) in June 2025. Although it is not binding by law, it provides operational advice on cookie consent. It recommends that websites use cookie banners with "Accept," "Reject," and "Customize" choices. Users must be able to withdraw or change their consent at any moment. Multi-language handling and automatic expiry of cookie preferences are also suggested to suit accessibility and privacy requirements.

The DPDP Act and the BRDCMS together create a robust user-rights model, even in the absence of a special cookie law.

What Should Indian Websites Do?

For the purposes of staying compliant, Indian websites and online platforms need to act promptly to harmonise their use of cookies with DPDP principles. This begins with a transparent and simple cookie banner providing users with an opportunity to accept or decline non-essential cookies. Consent needs to be meaningful; coercive tactics such as cookie walls must not be employed. Websites need to classify cookies (e.g., necessary, analytics and ads) and describe each category's function in plain terms under the privacy policy. Users must be given the option to modify cookie settings anytime using a Consent Management Platform (CMP). Monitoring children or their behavioural information must be strictly off-limits.

These are not only about being compliant with the law, they're about adhering to ethical data stewardship and user trust building.

What Should Users Do?

Cookies need to be understood and controlled by users to maintain online personal privacy. Begin by reading cookie notices thoroughly and declining unnecessary cookies, particularly those associated with tracking or advertising. The majority of browsers today support blocking third-party cookies altogether or deleting them periodically.

It is also recommended to check and modify privacy settings on websites and mobile applications. It is possible to minimise surveillance with the use of browser add-ons such as ad blockers or privacy extensions. Users are also recommended not to blindly accept "accept all" in cookie notices and instead choose "customise" or "reject" where not necessary for their use.

Finally, keeping abreast of data rights under Indian law, such as the right to withdraw consent or to have data deleted, will enable people to reclaim control over their online presence.

Conclusion

Cookies are a fundamental component of the modern web, but they raise significant concerns about individual privacy. India's DPDP Act, 2023, though not explicitly referring to cookies, contains an effective legal framework that regulates any data collection activity involving personal data, including those facilitated by cookies.

As India continues to make progress towards comprehensive rulemaking and regulation, companies need to implement privacy-first practices today. And so must the users, in an active role in their own digital lives. Collectively, compliance, transparency and awareness can build a more secure and ethical internet ecosystem where privacy is prioritised by design.

References

- https://prsindia.org/billtrack/digital-personal-data-protection-bill-2023

- https://gdpr-info.eu/

- https://d38ibwa0xdgwxx.cloudfront.net/create-edition/7c2e2271-6ddd-4161-a46c-c53b8609c09d.pdf

- https://oag.ca.gov/privacy/ccpa

- https://www.barandbench.com/columns/cookie-management-under-the-digital-personal-data-protection-act-2023#:~:text=The%20Business%20Requirements%20Document%20for,the%20DPDP%20Act%20and%20Rules.

- https://samistilegal.in/cookies-meaning-legal-regulations-and-implications/#

- https://secureprivacy.ai/blog/india-digital-personal-data-protection-act-dpdpa-cookie-consent-requirements

- https://law.asia/cookie-use-india/

- https://www.cookielawinfo.com/major-gdpr-fines-2020-2021/#:~:text=4.,French%20websites%20could%20refuse%20cookies.

Introduction

Fundamentally, artificial intelligence (AI) is the greatest extension of human intelligence. It is the culmination of centuries of logic, reasoning, math, and creativity, machines trained to reflect cognition. However, such intelligence no longer resembles intelligence at all when it is put in the hands of the irresponsible, the one with malice, or the perverse, unleashed into the wild with minimal safeguards. Instead, distortion seems as a tool of debasement rather than enlightenment.

Recent incidents involving sexually explicit photographs created by AI on social media sites reveal an extremely unsettling reality. When intelligence is detached from accountability, morality, and governance, it corrodes society rather than elevates it. We are seeing a failure of stewardship rather than just a failure of technology.

The Cost of Unchecked Intelligence

The AI chatbot Grok, which operates under Elon Musk’s X (formerly Twitter), is the subject of a debate that goes beyond a single platform or product. The romanticisation of “unfiltered” knowledge and the perilous notion that innovation should come before accountability are signs of a bigger lapse in the digital ecosystem. We have allowed mechanisms that can be used as weapons against human dignity, especially the dignity of women and children, in the name of freedom.

We are no longer discussing artistic expression or experimental AI when a machine can digitally undress women, morph photos, or produce sexualised portrayals of kids with a few keystrokes. We stand in the face of algorithmic violence. Even if the physical touch is absent, the harm caused by it is genuine, long-lasting, and extremely personal.

The Regulatory Red Line

A major inflexion was reached when the Indian government responded by ordering a thorough technical, procedural, and governance-level audit. It acknowledges that AI systems are not isolated entities. Platforms that use them are not neutral pipes, but rather intermediaries with responsibilities. The Bhartiya Nyay Sanhita, the IT Act, the IT Rules 2021, and the possible removal of Section 79 safe-harbour safeguards all make it quite evident that innovation is not automatic immunity.

However, the fundamental dilemma cannot be resolved by legislation alone. AI is hailed as a force multiplier for innovation, productivity, and advancement, but when incentives are biased towards engagement, virality, and shock value, its misuse shows how easily intelligence can turn into ugliness. The output receives greater attention the more provocative it is. Profit increases with attention. Restraint turns into a business disadvantage in this ecology.

The Aftermath

Grok’s own acknowledgement that “safeguard lapses” enabled the creation of pictures showing children wearing skimpy attire underscores a troubling reality, safety was not absent due to impossibility, but due to insufficiency. It was always possible to implement sophisticated filtering, more robust monitoring, and stricter oversight. They were simply not prioritised. When a system asserts that “no system is 100% foolproof,” it must also acknowledge that there is no acceptable margin of error when it comes to child protection.

The casual normalisation of such lapses is what is most troubling. By characterising these instances as “isolated cases,” systemic design decisions run the risk of being trivialised. In addition to intelligence, AI systems that have been taught on enormous amounts of human data also inherit bias, misogyny, and power imbalances.

Conclusion

What is required today is recalibration. Platforms need to shift from reactive compliance to proactive accountability. Safeguards must be incorporated at the architectural level; they cannot be cosmetic or post-facto. Governance must encompass enforced ethical boundaries in addition to terms of service. The idea that “edgy” AI is a sign of advancement must also be rejected by society.

Artificial Intelligence has never promised freedom under the guise of vulgarity. It was improvement, support, and augmentation. The fundamental core of intelligence is lost when it is used as a tool for degradation.So what’s left is a decision between principled innovation and unbridled novelty. Between responsibility and spectacle, between intelligence as purpose and intellect as power.

References

https://www.rediff.com/news/report/govt-orders-x-review-of-grok-over-explicit-content/20260103.htm