#FactCheck - AI Generated image of Virat Kohli falsely claims to be sand art of a child

Executive Summary:

The picture of a boy making sand art of Indian Cricketer Virat Kohli spreading in social media, claims to be false. The picture which was portrayed, revealed not to be a real sand art. The analyses using AI technology like 'Hive' and ‘Content at scale AI detection’ confirms that the images are entirely generated by artificial intelligence. The netizens are sharing these pictures in social media without knowing that it is computer generated by deep fake techniques.

Claims:

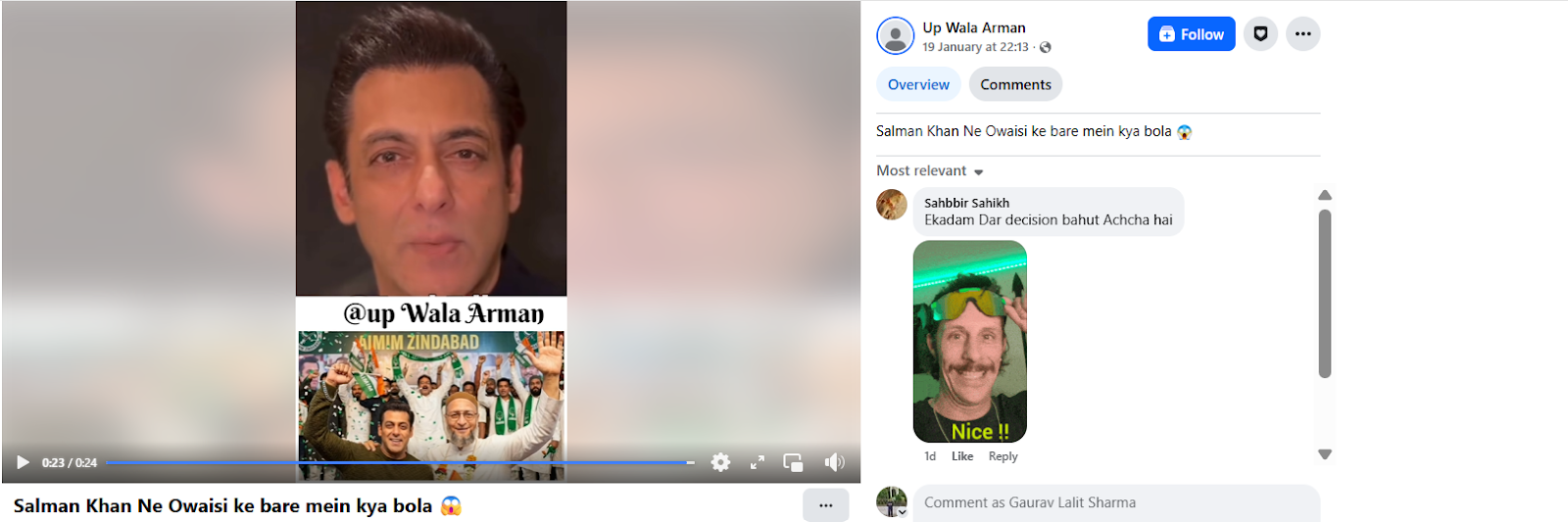

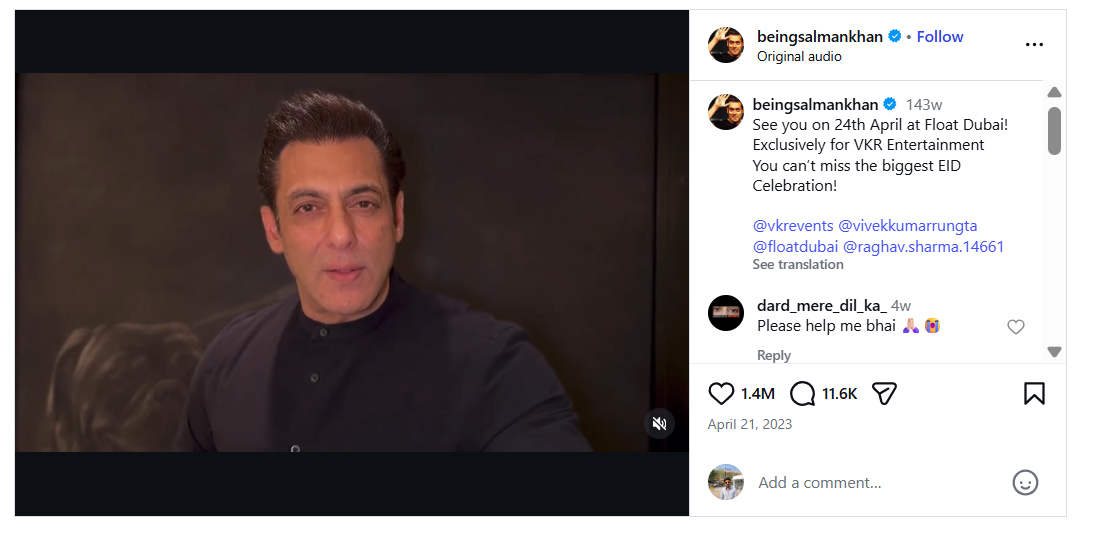

The collage of beautiful pictures displays a young boy creating sand art of Indian Cricketer Virat Kohli.

Fact Check:

When we checked on the posts, we found some anomalies in each photo. Those anomalies are common in AI-generated images.

The anomalies such as the abnormal shape of the child’s feet, blended logo with sand color in the second image, and the wrong spelling ‘spoot’ instead of ‘sport’n were seen in the picture. The cricket bat is straight which in the case of sand made portrait it’s odd. In the left hand of the child, there’s a tattoo imprinted while in other photos the child's left hand has no tattoo. Additionally, the face of the boy in the second image does not match the face in other images. These made us more suspicious of the images being a synthetic media.

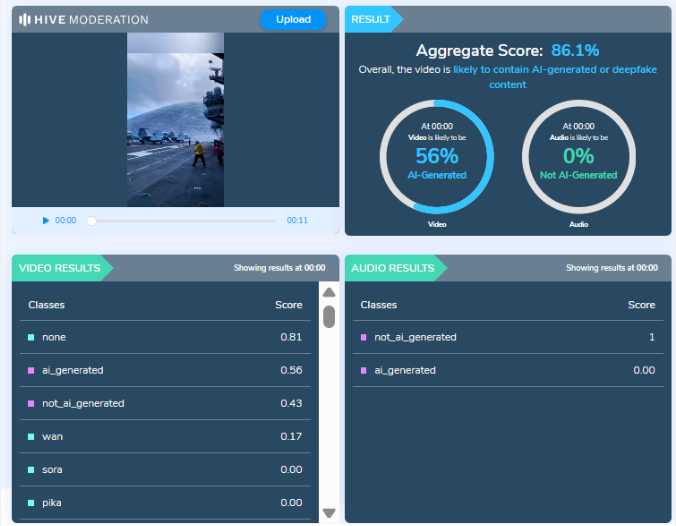

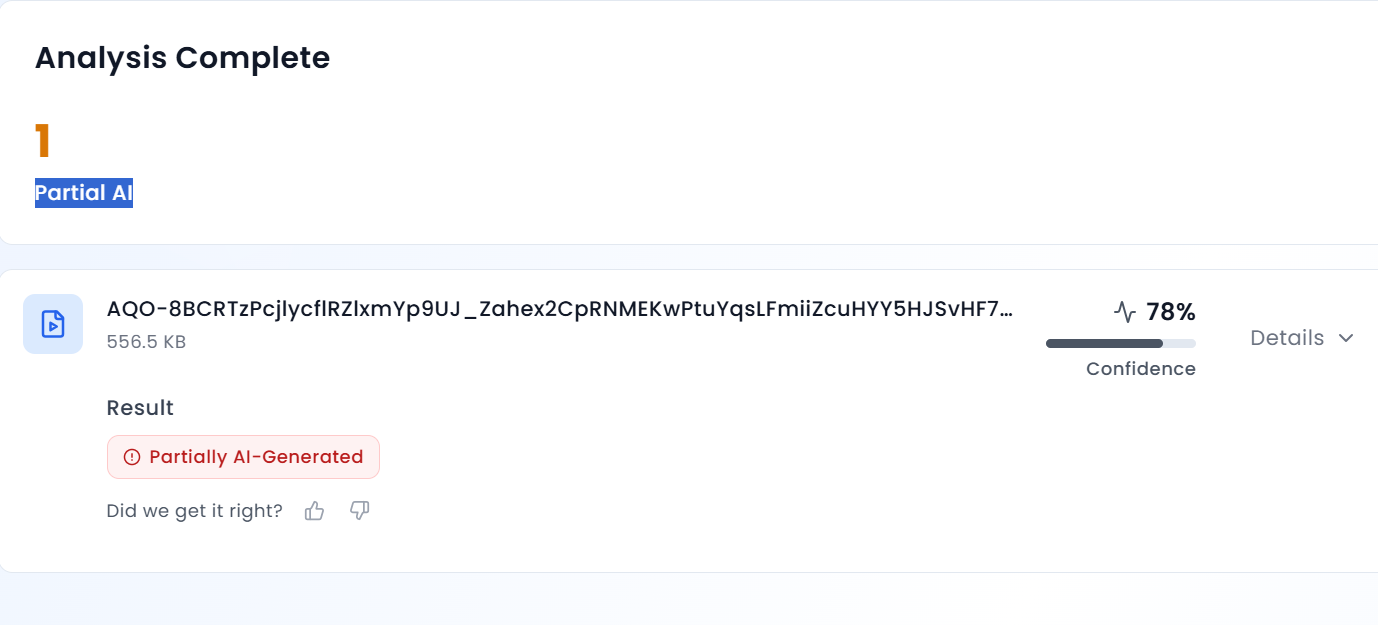

We then checked on an AI-generated image detection tool named, ‘Hive’. Hive was found to be 99.99% AI-generated. We then checked from another detection tool named, “Content at scale”

Hence, we conclude that the viral collage of images is AI-generated but not sand art of any child. The Claim made is false and misleading.

Conclusion:

In conclusion, the claim that the pictures showing a sand art image of Indian cricket star Virat Kohli made by a child is false. Using an AI technology detection tool and analyzing the photos, it appears that they were probably created by an AI image-generated tool rather than by a real sand artist. Therefore, the images do not accurately represent the alleged claim and creator.

Claim: A young boy has created sand art of Indian Cricketer Virat Kohli

Claimed on: X, Facebook, Instagram

Fact Check: Fake & Misleading