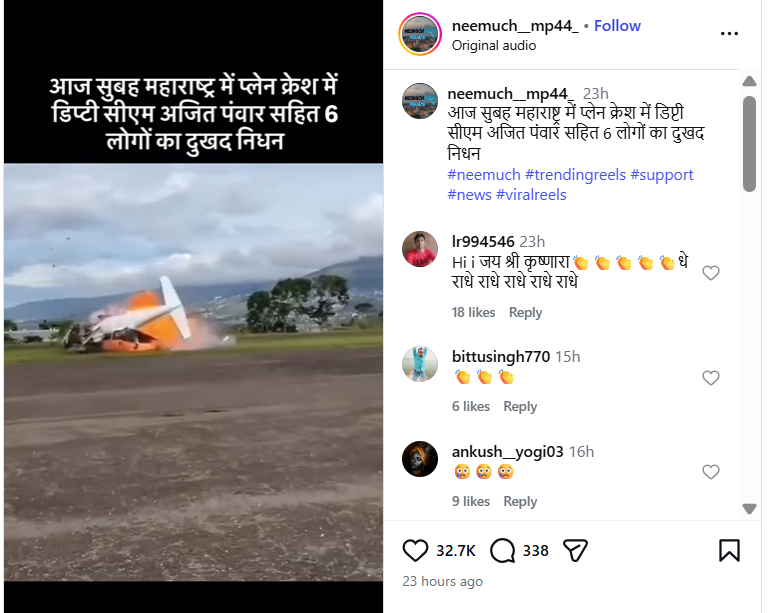

#FactCheck -Viral Video Falsely Linked to Baramati Plane Crash Involving Ajit Pawar

Executive Summary:

A video claiming to show the plane crash that allegedly killed Maharashtra Deputy Chief Minister Ajit Pawar has been widely circulated on social media. The circulation began soon after reports emerged of a tragic aircraft accident in Baramati, Maharashtra, on January 28, 2026, in which Ajit Pawar and five others were reported to have died. The viral video shows a plane crashing to the ground moments after take-off. Social media users have claimed that the footage captures the exact incident in which Ajit Pawar was on board. However, an research by the CyberPeacehas found that this claim is false.

Claim:

An Instagram user shared the video on January 28, 2026, claiming that it showed the plane crash in Maharashtra in which Deputy Chief Minister Ajit Pawar and others allegedly lost their lives. The caption accompanying the video read:“This morning, Deputy CM Ajit Pawar and six others tragically died in a plane crash in Maharashtra.”

Links to the post and its archived version are provided below.

Fact Check:

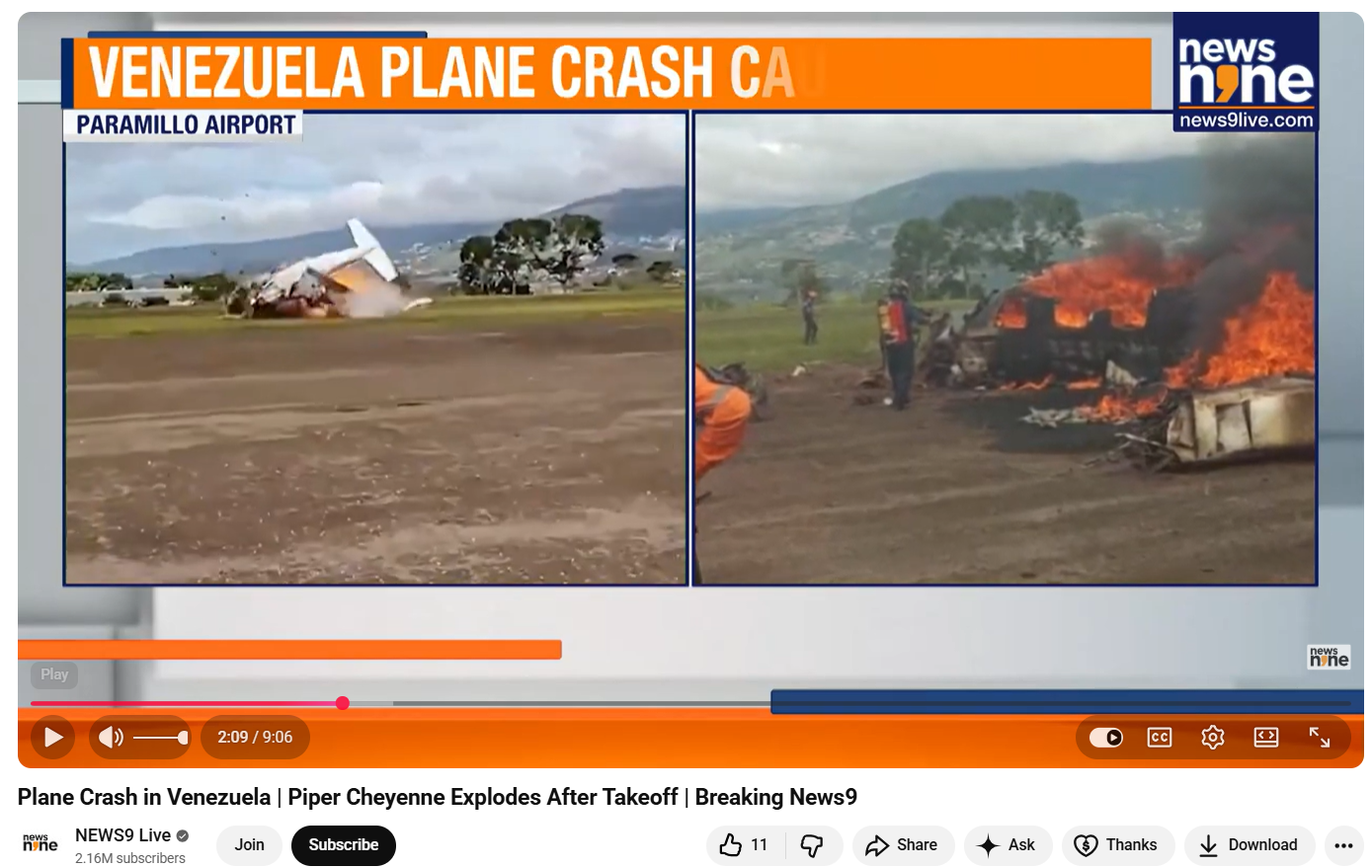

To verify the authenticity of the viral video, the CyberPeaceconducted a reverse image search of its keyframes. During this process, the same visuals were found in a video report uploaded on News9 Live’s official YouTube channel on October 23, 2025.

According to the report, the footage shows a plane crash in Venezuela, not India. The incident occurred shortly after a Piper Cheyenne aircraft took off from Paramillo Airport in Táchira, Venezuela. The aircraft crashed within seconds of take-off, killing both occupants on board. The deceased were identified as pilot José Bortone and co-pilot Juan Maldonado. Further confirmation came from a report published on October 22, 2025, by Latin American news outlet El Tiempo. The Spanish-language report also featured the same video visuals and stated that a small aircraft lost control and crashed on the runway at Paramillo Airport in Venezuela, resulting in the deaths of the pilot and co-pilot.

Conclusion

The CyberPeace’s research clearly establishes that the viral video being shared as footage of Ajit Pawar’s alleged plane crash in Baramati is misleading. The video actually shows a plane crash that occurred in Venezuela in October 2025 and has been falsely linked to a tragic claim in India.

Related Blogs

Executive Summary

A viral image claims that an Israeli helicopter shot down in South Lebanon. This investigation evaluates the possible authenticity of the picture, concluding that it was an old photograph, taken out of context for a more modern setting.

Claims

The viral image circulating online claims to depict an Israeli helicopter recently shot down in South Lebanon during the ongoing conflict between Israel and militant groups in the region.

Factcheck:

Upon Reverse Image Searching, we found a post from 2019 on Arab48.com with the exact viral picture.

Thus, reverse image searches led fact-checkers to the original source of the image, thus putting an end to the false claim.

There are no official reports from the main news agencies and the Israeli Defense Forces that confirm a helicopter shot down in southern Lebanon during the current hostilities.

Conclusion

Cyber Peace Research Team has concluded that the viral image claiming an Israeli helicopter shot down in South Lebanon is misleading and has no relevance to the ongoing news. It is an old photograph which has been widely shared using a different context, fueling the conflict. It is advised to verify claims from credible sources and not spread false narratives.

- Claim: Israeli helicopter recently shot down in South Lebanon

- Claimed On: Facebook

- Fact Check: Misleading, Original Image found by Google Reverse Image Search

Introduction

In a landmark move for India’s growing artificial intelligence (AI) ecosystem, ten cutting-edge Indian startups have been selected to participate in the prestigious Global AI Accelerator Programme in Paris. This initiative, jointly facilitated by the Ministry of Electronics and Information Technology (MeitY) under the IndiaAI mission, aims to project India’s AI innovation on the global stage, empower startups to scale impactful solutions while fostering cross-border collaboration.

Launched in alignment with the vision of India as a global AI powerhouse, the IndiaAI initiative has been working on strengthening domestic AI capabilities. Participation in the Paris Accelerator Programme is a direct extension of this mission, offering Indian startups access to world-class mentorship, investor networks, and a thriving innovation ecosystem in France, one of Europe’s AI capitals.

Global Acceleration for Local Impact

The ten selected startups represent diverse verticals, from conversational AI to cybersecurity, edtech and surveillance intelligence. This selection was made after a rigorous evaluation of innovation potential, scalability, and societal impact. Each of these ventures represents India's technological ambition and capacity to solve real-world problems through AI.

The significance of this opportunity goes beyond business growth. It sets the foundation for collaborative policy dialogues, ethical AI development, and bilateral innovation frameworks. With rising global scrutiny on issues such as AI safety, bias, and misinformation, the need for making efforts for a more responsible innovation takes centre stage.

CyberPeace Outlook

India’s participation opens up a pivotal chapter in India's AI diplomacy. Through such initiatives, the importance of AI is not confined just to commercial tools but also as a cornerstone of national security, citizen safety, and digital sovereignty can be explored. As AI systems increasingly integrate with critical infrastructure from health to law enforcement, the role of cyber resilience becomes significant. With the increasing engagement of AI in several sensitive sectors like audio-video surveillance and digital edtech, there is an urgent need for secure-by-design innovation. Including parameters such as security, ethics, and accountability into the development lifecycle becomes important, aligning with its broader goal of harmonising with digital progress.

Conclusion

India’s participation in the Paris Accelerator Programme signifies its commitment to shaping global AI norms and innovation diplomacy. As Indian startups interact with European regulators, investors, and technologists, they carry the responsibility of representing not just business acumen but the values of an open, inclusive, and secure digital future.

This global exposure also feeds directly into India’s domestic AI strategies, a global platform informing policy evolution, enhancing research and development networks, and building a robust talent pipeline. Programmes like these act as bridges, ensuring India remains adaptive in the ever-evolving AI landscape. Encouraging such global engagements while actively working with stakeholders to build frameworks safeguarding national interests, protecting civil liberties, and fostering innovation becomes paramount. As India takes this global leap, the journey ahead must be shaped by innovation, collaboration, and vigilance.

References

- https://egov.eletsonline.com/2025/05/indiaai-selects-10-innovative-startups-for-prestigious-ai-accelerator-programme-in-paris/#:~:text=The%2010%20startups%20selected%20for,audio%2Dvideo%20analytics%20for%20surveillance.

- https://www.pib.gov.in/PressReleasePage.aspx?PRID=2132377

- https://inc42.com/buzz/meet-the-10-indian-ai-startups-selected-for-global-acceleration-programme/

- https://www.businessworld.in/article/govt-to-send-10-ai-startups-to-paris-accelerator-in-push-for-global-reach-558251

.webp)

Executive Summary:

In late 2024 an Indian healthcare provider experienced a severe cybersecurity attack that demonstrated how powerful AI ransomware is. This blog discusses the background to the attack, how it took place and the effects it caused (both medical and financial), how organisations reacted, and the final result of it all, stressing on possible dangers in the healthcare industry with a lack of sufficiently adequate cybersecurity measures in place. The incident also interrupted the normal functioning of business and explained the possible economic and image losses from cyber threats. Other technical results of the study also provide more evidence and analysis of the advanced AI malware and best practices for defending against them.

1. Introduction

The integration of artificial intelligence (AI) in cybersecurity has revolutionised both defence mechanisms and the strategies employed by cybercriminals. AI-powered attacks, particularly ransomware, have become increasingly sophisticated, posing significant threats to various sectors, including healthcare. This report delves into a case study of an AI-powered ransomware attack on a prominent Indian healthcare provider in 2024, analysing the attack's execution, impact, and the subsequent response, along with key technical findings.

2. Background

In late 2024, a leading healthcare organisation in India which is involved in the research and development of AI techniques fell prey to a ransomware attack that was AI driven to get the most out of it. With many businesses today relying on data especially in the healthcare industry that requires real-time operations, health care has become the favourite of cyber criminals. AI aided attackers were able to cause far more detailed and damaging attack that severely affected the operation of the provider whilst jeopardising the safety of the patient information.

3. Attack Execution

The attack began with the launch of a phishing email designed to target a hospital administrator. They received an email with an infected attachment which when clicked in some cases injected the AI enabled ransomware into the hospitals network. AI incorporated ransomware was not as blasé as traditional ransomware, which sends copies to anyone, this studied the hospital’s IT network. First, it focused and targeted important systems which involved implementation of encryption such as the electronic health records and the billing departments.

The fact that the malware had an AI feature allowed it to learn and adjust its way of propagation in the network, and prioritise the encryption of most valuable data. This accuracy did not only increase the possibility of the potential ransom demand but also it allowed reducing the risks of the possibility of early discovery.

4. Impact

- The consequences of the attack were immediate and severe: The consequences of the attack were immediate and severe.

- Operational Disruption: The centralization of important systems made the hospital cease its functionality through the acts of encrypting the respective components. Operations such as surgeries, routine medical procedures and admitting of patients were slowed or in some cases referred to other hospitals.

- Data Security: Electronic patient records and associated billing data became off-limit because of the vulnerability of patient confidentiality. The danger of data loss was on the verge of becoming permanent, much to the concern of both the healthcare provider and its patients.

- Financial Loss: The attackers asked for 100 crore Indian rupees (approximately 12 USD million) for the decryption key. Despite the hospital not paying for it, there were certain losses that include the operational loss due to the server being down, loss incurred by the patients who were affected in one way or the other, loss incurred in responding to such an incident and the loss due to bad reputation.

5. Response

As soon as the hotel’s management was informed about the presence of ransomware, its IT department joined forces with cybersecurity professionals and local police. The team decided not to pay the ransom and instead recover the systems from backup. Despite the fact that this was an ethically and strategically correct decision, it was not without some challenges. Reconstruction was gradual, and certain elements of the patients’ records were permanently erased.

In order to avoid such attacks in the future, the healthcare provider put into force several organisational and technical actions such as network isolation and increase of cybersecurity measures. Even so, the attack revealed serious breaches in the provider’s IT systems security measures and protocols.

6. Outcome

The attack had far-reaching consequences:

- Financial Impact: A healthcare provider suffers a lot of crashes in its reckoning due to substantial service disruption as well as bolstering cybersecurity and compensating patients.

- Reputational Damage: The leakage of the data had a potential of causing a complete loss of confidence from patients and the public this affecting the reputation of the provider. This, of course, had an effect on patient care, and ultimately resulted in long-term effects on revenue as patients were retained.

- Industry Awareness: The breakthrough fed discussions across the country on how to improve cybersecurity provisions in the healthcare industry. It woke up the other care providers to review and improve their cyber defence status.

7. Technical Findings

The AI-powered ransomware attack on the healthcare provider revealed several technical vulnerabilities and provided insights into the sophisticated mechanisms employed by the attackers. These findings highlight the evolving threat landscape and the importance of advanced cybersecurity measures.

7.1 Phishing Vector and Initial Penetration

- Sophisticated Phishing Tactics: The phishing email was crafted with precision, utilising AI to mimic the communication style of trusted contacts within the organisation. The email bypassed standard email filters, indicating a high level of customization and adaptation, likely due to AI-driven analysis of previous successful phishing attempts.

- Exploitation of Human Error: The phishing email targeted an administrative user with access to critical systems, exploiting the lack of stringent access controls and user awareness. The successful penetration into the network highlighted the need for multi-factor authentication (MFA) and continuous training on identifying phishing attempts.

7.2 AI-Driven Malware Behavior

- Dynamic Network Mapping: Once inside the network, the AI-powered malware executed a sophisticated mapping of the hospital's IT infrastructure. Using machine learning algorithms, the malware identified the most critical systems—such as Electronic Health Records (EHR) and the billing system—prioritising them for encryption. This dynamic mapping capability allowed the malware to maximise damage while minimising its footprint, delaying detection.

- Adaptive Encryption Techniques: The malware employed adaptive encryption techniques, adjusting its encryption strategy based on the system's response. For instance, if it detected attempts to isolate the network or initiate backup protocols, it accelerated the encryption process or targeted backup systems directly, demonstrating an ability to anticipate and counteract defensive measures.

- Evasive Tactics: The ransomware utilised advanced evasion tactics, such as polymorphic code and anti-forensic features, to avoid detection by traditional antivirus software and security monitoring tools. The AI component allowed the malware to alter its code and behaviour in real time, making signature-based detection methods ineffective.

7.3 Vulnerability Exploitation

- Weaknesses in Network Segmentation: The hospital’s network was insufficiently segmented, allowing the ransomware to spread rapidly across various departments. The malware exploited this lack of segmentation to access critical systems that should have been isolated from each other, indicating the need for stronger network architecture and micro-segmentation.

- Inadequate Patch Management: The attackers exploited unpatched vulnerabilities in the hospital’s IT infrastructure, particularly within outdated software used for managing patient records and billing. The failure to apply timely patches allowed the ransomware to penetrate and escalate privileges within the network, underlining the importance of rigorous patch management policies.

7.4 Data Recovery and Backup Failures

- Inaccessible Backups: The malware specifically targeted backup servers, encrypting them alongside primary systems. This revealed weaknesses in the backup strategy, including the lack of offline or immutable backups that could have been used for recovery. The healthcare provider’s reliance on connected backups left them vulnerable to such targeted attacks.

- Slow Recovery Process: The restoration of systems from backups was hindered by the sheer volume of encrypted data and the complexity of the hospital’s IT environment. The investigation found that the backups were not regularly tested for integrity and completeness, resulting in partial data loss and extended downtime during recovery.

7.5 Incident Response and Containment

- Delayed Detection and Response: The initial response was delayed due to the sophisticated nature of the attack, with traditional security measures failing to identify the ransomware until significant damage had occurred. The AI-powered malware’s ability to adapt and camouflage its activities contributed to this delay, highlighting the need for AI-enhanced detection and response tools.

- Forensic Analysis Challenges: The anti-forensic capabilities of the malware, including log wiping and data obfuscation, complicated the post-incident forensic analysis. Investigators had to rely on advanced techniques, such as memory forensics and machine learning-based anomaly detection, to trace the malware’s activities and identify the attack vector.

8. Recommendations Based on Technical Findings

To prevent similar incidents, the following measures are recommended:

- AI-Powered Threat Detection: Implement AI-driven threat detection systems capable of identifying and responding to AI-powered attacks in real time. These systems should include behavioural analysis, anomaly detection, and machine learning models trained on diverse datasets.

- Enhanced Backup Strategies: Develop a more resilient backup strategy that includes offline, air-gapped, or immutable backups. Regularly test backup systems to ensure they can be restored quickly and effectively in the event of a ransomware attack.

- Strengthened Network Segmentation: Re-architect the network with robust segmentation and micro-segmentation to limit the spread of malware. Critical systems should be isolated, and access should be tightly controlled and monitored.

- Regular Vulnerability Assessments: Conduct frequent vulnerability assessments and patch management audits to ensure all systems are up to date. Implement automated patch management tools where possible to reduce the window of exposure to known vulnerabilities.

- Advanced Phishing Defences: Deploy AI-powered anti-phishing tools that can detect and block sophisticated phishing attempts. Train staff regularly on the latest phishing tactics, including how to recognize AI-generated phishing emails.

9. Conclusion

The AI empowered ransomware attack on the Indian healthcare provider in 2024 makes it clear that the threat of advanced cyber attacks has grown in the healthcare facilities. Sophisticated technical brief outlines the steps used by hackers hence underlining the importance of ongoing active and strong security. This event is a stark message to all about the importance of not only remaining alert and implementing strong investments in cybersecurity but also embarking on the formulation of measures on how best to counter such incidents with limited harm. AI is now being used by cybercriminals to increase the effectiveness of the attacks they make and it is now high time all healthcare organisations ensure that their crucial systems and data are well protected from such attacks.