#FactCheck - Viral Video of Aircraft Carrier Destroyed in Sea Storm Is AI-Generated

Social media users are widely sharing a video claiming to show an aircraft carrier being destroyed after getting trapped in a massive sea storm. In the viral clip, the aircraft carrier can be seen breaking apart amid violent waves, with users describing the visuals as a “wrath of nature.”

However, CyberPeace Foundation’s research has found this claim to be false. Our fact-check confirms that the viral video does not depict a real incident and has instead been created using Artificial Intelligence (AI).

Claim:

An X (formerly Twitter) user shared the viral video with the caption,“Nature’s wrath captured on camera.”The video shows an aircraft carrier appearing to be devastated by a powerful ocean storm. The post can be viewed here, and its archived version is available here.

https://x.com/Maailah1712/status/2011672435255624090

Fact Check:

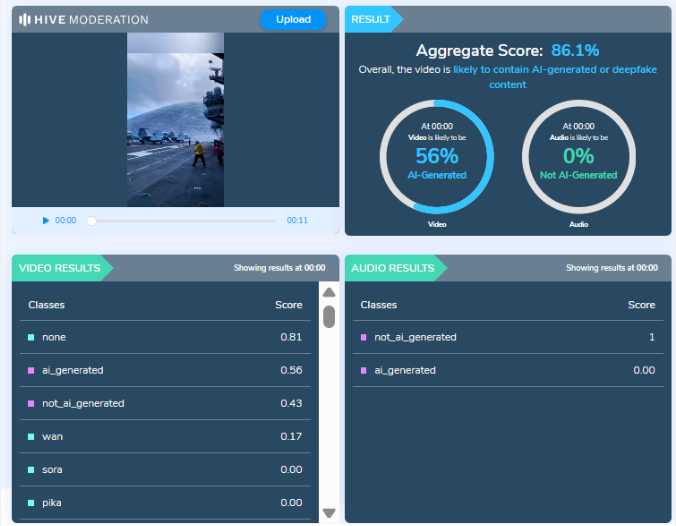

At first glance, the visuals shown in the viral video appear highly unrealistic and cinematic, raising suspicion about their authenticity. The exaggerated motion of waves, structural damage to the vessel, and overall animation-like quality suggest that the video may have been digitally generated. To verify this, we analyzed the video using AI detection tools.

The analysis conducted by Hive Moderation, a widely used AI content detection platform, indicates that the video is highly likely to be AI-generated. According to Hive’s assessment, there is nearly a 90 percent probability that the visual content in the video was created using AI.

Conclusion

The viral video claiming to show an aircraft carrier being destroyed in a sea storm is not related to any real incident.It is a computer-generated, AI-created video that is being falsely shared online as a real natural disaster. By circulating such fabricated visuals without verification, social media users are contributing to the spread of misinformation.

Related Blogs

Executive Summary:

Old footage of Indian Cricketer Virat Kohli celebrating Ganesh Chaturthi in September 2023 was being promoted as footage of Virat Kohli at the Ram Mandir Inauguration. A video of cricketer Virat Kohli attending a Ganesh Chaturthi celebration last year has surfaced, with the false claim that it shows him at the Ram Mandir consecration ceremony in Ayodhya on January 22. The Hindi newspaper Dainik Bhaskar and Gujarati newspaper Divya Bhaskar also displayed the now-viral video in their respective editions on January 23, 2024, escalating the false claim. After thorough Investigation, it was found that the Video was old and it was Ganesh Chaturthi Festival where the cricketer attended.

Claims:

Many social media posts, including those from news outlets such as Dainik Bhaskar and Gujarati News Paper Divya Bhaskar, show him attending the Ram Mandir consecration ceremony in Ayodhya on January 22, where after investigation it was found that the Video was of Virat Kohli attending Ganesh Chaturthi in September, 2023.

The caption of Dainik Bhaskar E-Paper reads, “ क्रिकेटर विराट कोहली भी नजर आए ”

Fact Check:

CyberPeace Research Team did a reverse Image Search of the Video where several results with the Same Black outfit was shared earlier, from where a Bollywood Entertainment Instagram Profile named Bollywood Society shared the same Video in its Page, the caption reads, “Virat Kohli snapped for Ganapaati Darshan” the post was made on 20 September, 2023.

Taking an indication from this we did some keyword search with the Information we have, and it was found in an article by Free Press Journal, Summarizing the article we got to know that Virat Kohli paid a visit to the residence of Shiv Sena leader Rahul Kanal to seek the blessings of Lord Ganpati. The Viral Video and the claim made by the news outlet is false and Misleading.

Conclusion:

The recent Claim made by the Viral Videos and News Outlet is an Old Footage of Virat Kohli attending Ganesh Chaturthi the Video back to the year 2023 but not of the recent auspicious day of Ram Mandir Pran Pratishtha. To be noted that, we also confirmed that Virat Kohli hadn’t attended the Program; there was no confirmation that Virat Kohli attended on 22 January at Ayodhya. Hence, we found this claim to be fake.

- Claim: Virat Kohli attending the Ram Mandir consecration ceremony in Ayodhya on January 22

- Claimed on: Youtube, X

- Fact Check: Fake

Overview:

In today’s digital landscape, safeguarding personal data and communications is more crucial than ever. WhatsApp, as one of the world’s leading messaging platforms, consistently enhances its security features to protect user interactions, offering a seamless and private messaging experience

App Lock: Secure Access with Biometric Authentication

To fortify security at the device level, WhatsApp offers an app lock feature, enabling users to protect their app with biometric authentication such as fingerprint or Face ID. This feature ensures that only authorized users can access the app, adding an additional layer of protection to private conversations.

How to Enable App Lock:

- Open WhatsApp and navigate to Settings.

- Select Privacy.

- Scroll down and tap App Lock.

- Activate Fingerprint Lock or Face ID and follow the on-screen instructions.

Chat Lock: Restrict Access to Private Conversations

WhatsApp allows users to lock specific chats, moving them to a secured folder that requires biometric authentication or a passcode for access. This feature is ideal for safeguarding sensitive conversations from unauthorized viewing.

How to Lock a Chat:

- Open WhatsApp and select the chat to be locked.

- Tap on the three dots (Android) or More Options (iPhone).

- Select Lock Chat

- Enable the lock using Fingerprint or Face ID.

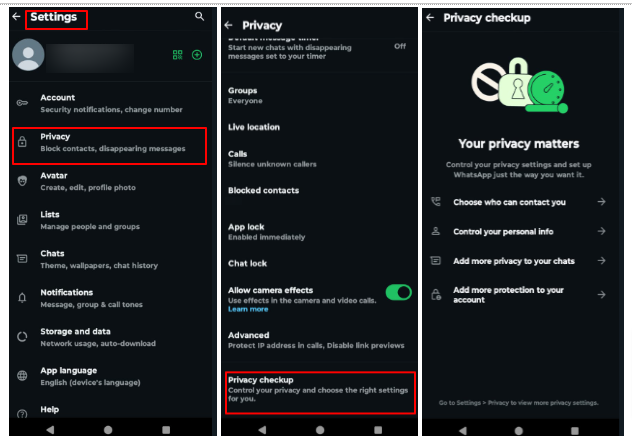

Privacy Checkup: Strengthening Security Preferences

The privacy checkup tool assists users in reviewing and customizing essential security settings. It provides guidance on adjusting visibility preferences, call security, and blocked contacts, ensuring a personalized and secure communication experience.

How to Run Privacy Checkup:

- Open WhatsApp and navigate to Settings.

- Tap Privacy.

- Select Privacy Checkup and follow the prompts to adjust settings.

Automatic Blocking of Unknown Accounts and Messages

To combat spam and potential security threats, WhatsApp automatically restricts unknown accounts that send excessive messages. Users can also manually block or report suspicious contacts to further enhance security.

How to Manage Blocking of Unknown Accounts:

- Open WhatsApp and go to Settings.

- Select Privacy.

- Tap to Advanced

- Enable Block unknown account messages

IP Address Protection in Calls

To prevent tracking and enhance privacy, WhatsApp provides an option to hide IP addresses during calls. When enabled, calls are routed through WhatsApp’s servers, preventing location exposure via direct connections.

How to Enable IP Address Protection in Calls:

- Open WhatsApp and go to Settings.

- Select Privacy, then tap Advanced.

- Enable Protect IP Address in Calls.

Disappearing Messages: Auto-Deleting Conversations

Disappearing messages help maintain confidentiality by automatically deleting sent messages after a predefined period—24 hours, 7 days, or 90 days. This feature is particularly beneficial for reducing digital footprints.

How to Enable Disappearing Messages:

- Open the chat and tap the Chat Name.

- Select Disappearing Messages.

- Choose the preferred duration before messages disappear.

View Once: One-Time Access to Media Files

The ‘View Once’ feature ensures that shared photos and videos can only be viewed a single time before being automatically deleted, reducing the risk of unauthorized storage or redistribution.

How to Send View Once Media:

- Open a chat and tap the attachment icon.

- Choose Camera or Gallery to select media.

- Tap the ‘1’ icon before sending the media file.

Group Privacy Controls: Manage Who Can Add You

WhatsApp provides users with the ability to control group invitations, preventing unwanted additions by unknown individuals. Users can restrict group invitations to ‘Everyone,’ ‘My Contacts,’ or ‘My Contacts Except…’ for enhanced privacy.

How to Adjust Group Privacy Settings:

- Open WhatsApp and go to Settings.

- Select Privacy and tap Groups.

- Choose from the available options: Everyone, My Contacts, or My Contacts Except

Conclusion

WhatsApp continuously enhances its security features to protect user privacy and ensure safe communication. With tools like App Lock, Chat Lock, Privacy Checkup, IP Address Protection, and Disappearing Messages, users can safeguard their data and interactions. Features like View Once and Group Privacy Controls further enhance confidentiality. By enabling these settings, users can maintain a secure and private messaging experience, effectively reducing risks associated with unauthorized access, tracking, and digital footprints. Stay updated and leverage these features for enhanced security.

Introduction

The Data Protection Data Privacy Act 2023 is the most essential step towards protecting, prioritising, and promoting the users’ privacy and data protection. The Act is designed to prioritize user consent in data processing while assuring uninterrupted services like online shopping, intermediaries, etc. The Act specifies that once a user provides consent to the following intermediary platforms, the platforms can process the data until the user withdraws the rights of it. This policy assures that the user has the entire control over their data and is accountable for its usage.

A keen Outlook

The Following Act also provides highlights for user-specific purpose, which is limited to data processing. This step prevents the misuse of data and also ensures that the processed data is being for the purpose for which it was obtained at the initial stage from the user.

- Data Fudiary and Processing of Online Shopping Platforms: The Act Emphasises More on Users’ Consent. Once provided, the Data Fudiary can constantly process the data until it is specifically withdrawn by the Data Principal.

- Detailed Analysis

- Consent as a Foundation: The Act places the user's consent as a backbone to the data processing. It sets clear boundaries for data processing. It can be Collecting, Processing, and Storing, and must comply with users’ consent before being used.

- Uninterrupted Data processing: With the given user consent, the intermediaries are not time-restrained. As long as the user does not obligate their consent, the process will be ongoing.

- Consent and Order Fulfillment: Consent, once provided, encloses all the activities related to the specific purpose for which it was meant to the data it was given for subsequent actions such as order fulfilment.

- Detailed Analysis

- Purpose-Limited Consent: The consent given is purpose-limited. The platform cannot misuse the obtained data for its personal use.

- Seamless User Experience: By ensuring that the user consent covers the full transactions, spared from the unwanted annoyance of repeated consent requests from the actual ongoing activities.

- Data Retention and Rub Out on Online Platforms: Platforms must ensure data minimisation post its utilisation period. This extends to any kind of third-party processors they might take on.

- Detailed Analysis

- Minimization and Security Assurance: By compulsory data removal on post ultization,This step helps to reduce the volume of data platforms hold, which leads to minimizing the risk to data.

- Third-Party Accountability, User Privacy Protection.

Influence from Global frameworks

The impactful changes based on global trends and similar legislation( European Union’s GDPR) here are some fruitful changes in intermediaries and social media platforms experienced after the implementation of the DPDP Act 2023.

- Solidified Consent Mechanism: Platforms and intermediatries need to ensure the users’ consent is categorically given, and informed, and should be specific to which the data is obtained. This step may lead to user-friendly consent forms activities and prompts.

- Data Minimizations: Platforms that tend to need to collect the only data necessary for the specific purpose mentioned and not retain information beyond its utility.

- Transparency and Accountability: Data collecting Platforms need to ensure transparency in data collecting, data processing, and sharing practices. This involves more detailed policy and regular audits.

- Data Portability: Users have the right to request for a copy of their own data used in format, allowing them to switch platforms effectively.

- Right to Obligation: Users can have the request right to deletion of their data, also referred to as the “Right to be forgotten”.

- Prescribed Reporting: Under circumstances of data breaches, intermediary platforms are required to report the issues and instability to the regulatory authorities within a specific timeline.

- Data Protection Authorities: Due to the increase in data breaches, Large platforms indeed appoint data protection officers, which are responsible for the right compliance with data protection guidelines.

- Disciplined Policies: Non-compliance might lead to a huge amount of fines, making it indispensable to invest in data protection measures.

- Third-Party Audits: Intermediaries have to undergo security audits by external auditors to ensure they are meeting the expeditions of the following compliances.

- Third-Party Information Sharing Restrictions: Sharing personal information and users’ data with third parties (such as advertisers) come with more detailed and disciplined guideline and user consent.

Conclusion

The Data Protection Data Privacy Act 2023 prioritises user consent, ensuring uninterrupted services and purpose-limited data processing. It aims to prevent data misuse, emphasising seamless user experiences and data minimisation. Drawing inspiration from global frameworks like the EU's GDPR, it introduces solidified consent mechanisms, transparency, and accountability. Users gain rights such as data portability and data deletion requests. Non-compliance results in significant fines. This legislation sets a new standard for user privacy and data protection, empowering users and holding platforms accountable. In an evolving digital landscape, it plays a crucial role in ensuring data security and responsible data handling.

References:

- https://www.meity.gov.in/writereaddata/files/Digital%20Personal%20Data%20Protection%20Act%202023.pdf

- https://www.mondaq.com/india/privacy-protection/1355068/data-protection-law-in-india-analysis-of-dpdp-act-2023-for-businesses--part-i

- https://www.hindustantimes.com/technology/explained-indias-new-digital-personal-data-protection-framework-101691912775654.html