#FactCheck - 2013 Aircraft Video Misleadingly Shared as Ajit Pawar’s Plane Accident

Executive Summary:

A video showing poor runway visibility from inside an aircraft cockpit is being widely shared on social media, linking it to an alleged aircraft accident involving Maharashtra Deputy Chief Minister Ajit Pawar in Baramati on January 28, 2025. Users claim that the footage captured the final moments before the crash, suggesting that the runway visibility disappeared just seconds before landing. However, research conducted by the CyberPeace found the viral claim to be misleading. The research revealed that the video has no connection to any aircraft accident involving Deputy Chief Minister Ajit Pawar. In reality, the video dates back to 2013 and shows a pilot attempting to land an aircraft amid heavy rain. During the approach, the runway briefly disappears from the pilot’s view, prompting the pilot to abort the landing and execute a go-around. The aircraft later lands safely after weather conditions improve.

Claim

An Instagram user shared the viral video on January 29, 2026, claiming:“Baramati plane crash: video of the aircraft accident surfaces. Runway disappears just three seconds before landing.” (The link to the post, its archived version, and screenshots are provided below.)

Fact Check

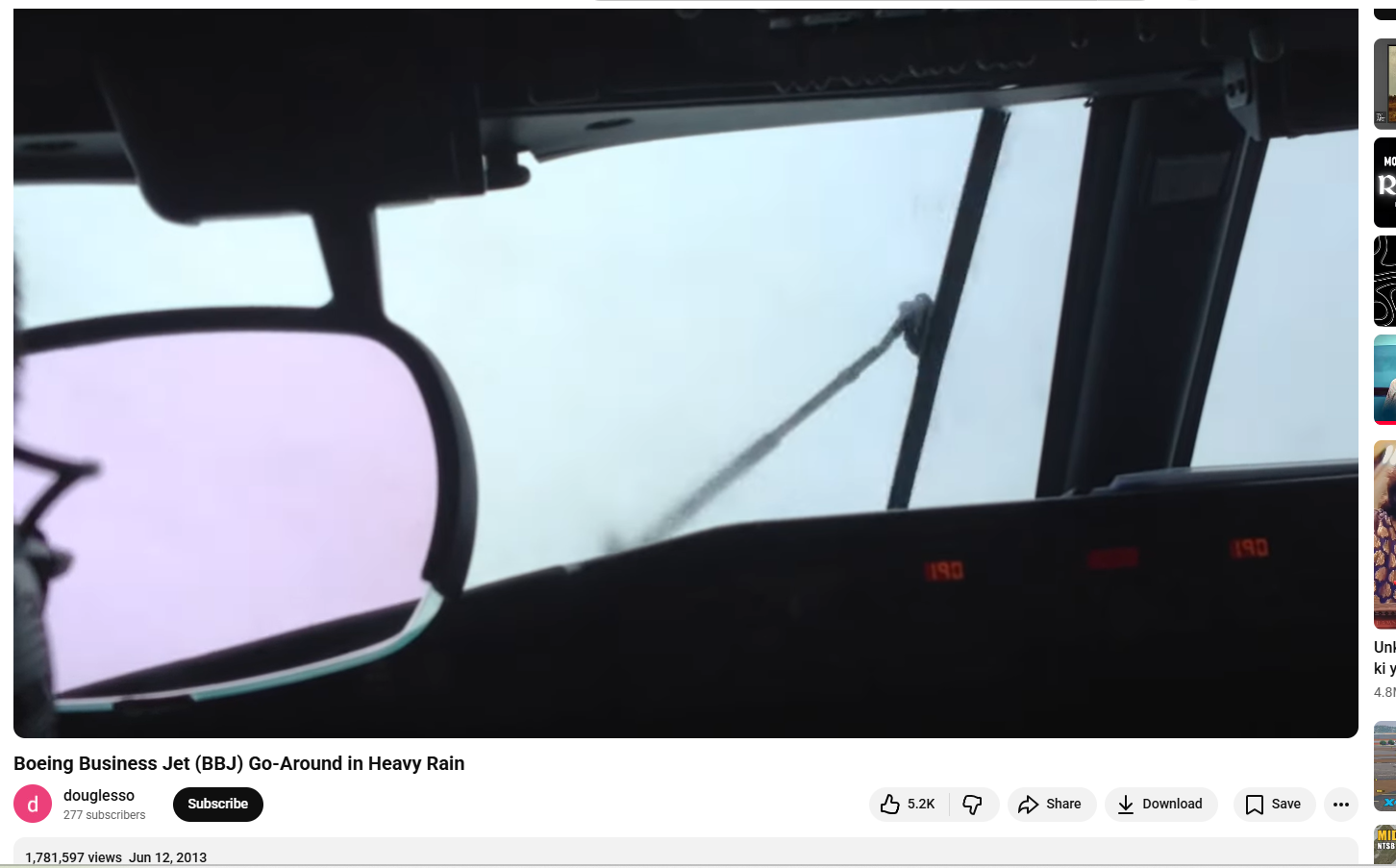

To verify the claim, we extracted keyframes from the viral video and conducted a reverse image search using Google Lens. The search led us to the same video uploaded on a YouTube channel named douglesso, which was published on June 12, 2013. (Footage link and screenshot available below.)

Further research led us to a report published by the American media website CNET, which featured the same visual. According to the report, the video shows a Boeing Business Jet attempting to land during heavy rainfall. The aircraft was conducting a CAT I Instrument Landing System (ILS) approach when a sudden downpour drastically reduced visibility at decision height. As the runway briefly disappeared from view, the pilots aborted the landing and carried out a go-around. The aircraft later landed safely once weather conditions improved. (The link to the CNET report and its screenshot are provided below.)

- https://www.cnet.com/culture/this-is-what-happens-when-a-plane-is-landing-and-the-runway-disappears/

Conclusion

Our research confirms that the video circulating on social media is unrelated to any recent aircraft accident involving Maharashtra Deputy Chief Minister Ajit Pawar. The clip is an old video from 2013, which is now being shared with a false and misleading claim.

.webp)