#FactCheck

Executive Summary:

The video that allegedly showed cars running into an Indian flag while Pakistan flags flying in the air in Indian states, went viral on social media but it has been established to be misleading. The video posted is neither from Kerala nor Tamil Nadu as claimed, instead from Karachi, Pakistan. There are specific details like the shop's name, Pakistani flags, car’s number plate, geolocation analyses that locate where the video comes from. The false information underscores the importance of verifying information before sharing it.

Claims:

A video circulating on social media shows cars trampling the Indian Tricolour painted on a road, as Pakistani flags are raised in pride, with the incident allegedly taking place in Tamil Nadu or Kerala.

Fact Check:

Upon receiving the post we closely watched the video, and found several signs that indicated the video was from Pakistan but not from any place in India.

We divided the video into keyframes and found a shop name near the road.

We enhanced the image quality to see the shop name clearly.

We can see that it’s written as ‘Sanam’, also we can see Pakistan flags waving on the road. Taking a cue from this we did some keyword searches with the shop name. We found some shops with the name and one of the shop's name ‘Sanam Boutique’ located in Karachi, Pakistan, was found to be similar when analyzed using geospatial Techniques.

We also found a similar structure of the building while geolocating the place with the viral video.

Additional confirmation of the place is the car’s number plate found in the keyframes of the video.

We found a website that shows the details of the number Plate in Karachi, Pakistan.

Upon thorough investigation, it was found that the location in the viral video is from Karachi, Pakistan, but not from Kerala or Tamil Nadu as claimed by different users in Social Media. Hence, the claim made is false and misleading.

Conclusion:

The video circulating on social media, claiming to show cars trampling the Indian Tricolour on a road while Pakistani flags are waved, does not depict an incident in Kerala or Tamil Nadu as claimed. By fact-checking methodologies, it has been confirmed now that the location in the video is actually from Karachi, Pakistan. The misrepresentation shows the importance of verifying the source of any information before sharing it on social media to prevent the spread of false narratives.

- Claim: A video shows cars trampling the Indian Tricolour painted on a road, as Pakistani flags are raised in pride, taking place in Tamil Nadu or Kerala.

- Claimed on: X (Formerly known as Twitter)

- Fact Check: Fake & Misleading

Executive Summary:

The viral social media posts circulating several photos of Indian Army soldiers eating their lunch in the extremely hot weather near the border area in Barmer/ Jaisalmer, Rajasthan, have been detected as AI generated and proven to be false. The images contain various faults such as missing shadows, distorted hand positioning and misrepresentation of the Indian flag and soldiers body features. The various AI generated tools were also used to validate the same. Before sharing any pictures in social media, it is necessary to validate the originality to avoid misinformation.

Claims:

The photographs of Indian Army soldiers having their lunch in extreme high temperatures at the border area near to the district of Barmer/Jaisalmer, Rajasthan have been circulated through social media.

Fact Check:

Upon the study of the given images, it can be observed that the images have a lot of similar anomalies that are usually found in any AI generated image. The abnormalities are lack of accuracy in the body features of the soldiers, the national flag with the wrong combination of colors, the unusual size of spoon, and the absence of Army soldiers’ shadows.

Additionally it is noticed that the flag on Indian soldiers’ shoulder appears wrong and it is not the traditional tricolor pattern. Another anomaly, soldiers with three arms, strengtheness the idea of the AI generated image.

Furthermore, we used the HIVE AI image detection tool and it was found that each photo was generated using an Artificial Intelligence algorithm.

We also checked with another AI Image detection tool named Isitai, it was also found to be AI-generated.

After thorough analysis, it was found that the claim made in each of the viral posts is misleading and fake, the recent viral images of Indian Army soldiers eating food on the border in the extremely hot afternoon of Badmer were generated using the AI Image creation tool.

Conclusion:

In conclusion, the analysis of the viral photographs claiming to show Indian army soldiers having their lunch in scorching heat in Barmer, Rajasthan reveals many anomalies consistent with AI-generated images. The absence of shadows, distorted hand placement, irregular showing of the Indian flag, and the presence of an extra arm on a soldier, all point to the fact that the images are artificially created. Therefore, the claim that this image captures real-life events is debunked, emphasizing the importance of analyzing and fact-checking before sharing in the era of common widespread digital misinformation.

- Claim: The photo shows Indian army soldiers having their lunch in extreme heat near the border area in Barmer/Jaisalmer, Rajasthan.

- Claimed on: X (formerly known as Twitter), Instagram, Facebook

- Fact Check: Fake & Misleading

Executive Summary:

An image has been spread on social media about the truck carrying money and gold coins impounded by Jharkhand Police that also during lok sabha elections in 2024. The Research Wing, CyberPeace has verified the image and found it to be generated using artificial intelligence. There are no credible news articles supporting claims about the police having made such a seizure in Jharkhand. The images were checked using AI image detection tools and proved to be AI made. It is advised to share any image or content after verifying its authenticity.

Claims:

The viral social media post depicts a truck intercepted by the Jharkhand Police during the 2024 Lok Sabha elections. It was claimed that the truck was filled with large amounts of cash and gold coins.

Fact Check:

On receiving the posts, we started with keyword-search to find any relevant news articles related to this post. If such a big incident really happened it would have been covered by most of the media houses. We found no such similar articles. We have closely analysed the image to find any anomalies that are usually found in AI generated images. And found the same.

The texture of the tree in the image is found to be blended. Also, the shadow of the people seems to be odd, which makes it more suspicious and is a common mistake in most of the AI generated images. If we closely look at the right hand of the old man wearing white attire, it is clearly visible that the thumb finger is blended with his apparel.

We then analysed the image in an AI image detection tool named ‘Hive Detector’. Hive Detector found the image to be AI-generated.

To validate the AI fabrication, we checked with another AI image detection tool named ‘ContentAtScale AI detection’ and it detected the image as 82% AI. Generated.

After validation of the viral post using AI detection tools, it is apparent that the claim is misleading and fake.

Conclusion:

The viral image of the truck impounded by Jharkhand Police is found to be fake and misleading. The viral image is found to be AI-generated. There has been no credible source that can support the claim made. Hence, the claim made is false and misleading. The Research Wing, CyberPeace previously debunked such AI-generated images with misleading claims. Netizens must verify such news that circulates in Social Media with bogus claims before sharing it further.

- Claim: The photograph shows a truck intercepted by Jharkhand Police during the 2024 Lok Sabha elections, which was allegedly loaded with huge amounts of cash and gold coins.

- Claimed on: Facebook, Instagram, X (Formerly known as Twitter)

- Fact Check: Fake & Misleading

Executive Summary:

A video clip being circulated on social media allegedly shows the Hon’ble President of India, Smt. Droupadi Murmu, the TV anchor Anjana Om Kashyap and the Hon’ble Chief Minister of Uttar Pradesh, Shri Yogi Adityanath promoting a medicine for diabetes. While The CyberPeace Research Team did a thorough investigation, the claim was found to be not true. The video was digitally edited, with original footage of the heavy weight persons being altered to falsely suggest their endorsement of the medication. Specific discrepancies were found in the lip movements and context of the clips which indicated AI Manipulation. Additionally, the distinguished persons featured in the video were actually discussing unrelated topics in their original footage. Therefore, the claim that the video shows endorsements of a diabetes drug by such heavy weights is debunked. The conclusion drawn from the analysis is that the video is an AI creation and does not reflect any genuine promotion. Furthermore, it's also detected by AI voice detection tools.

Claims:

A video making the rounds on social media purporting to show the Hon'ble President of India, Smt. Draupadi Murmu, TV anchor Anjana Om Kashyap, and Hon'ble Chief Minister of Uttar Pradesh Shri Yogi Adityanath giving their endorsement to a diabetes medicine.

Fact Check:

Upon receiving the post we carefully watched the video and certainly found some discrepancies between lip synchronization and the word that we can hear. Also the voice of Chief Minister of Uttar Pradesh Shri Yogi Adityanath seems to be suspicious which clearly indicates some sign of fabrication. In the video, we can hear Hon'ble President of India Smt. Droupadi Murmu endorses a medicine that cured her diabetes. We then divided the video into keyframes, and reverse-searched one of the frames of the video. We landed on a video uploaded by Aaj Tak on their official YouTube Channel.

We found something similar to the same viral video, we can see the courtesy written as Sansad TV. Taking a cue from this we did some keyword searches and found another video uploaded by the YouTube Channel Sansad TV. In this video, we found no mention of any diabetes medicine. It was actually the Swearing in Ceremony of the Hon’ble President of India, Smt. Droupadi Murmu.

In the second part, there was a man addressed as Dr. Abhinash Mishra who allegedly invented the medicine that cures diabetes. We reverse-searched the image of that person and landed at a CNBC news website where the same face was identified as Dr Atul Gawande who is a professor at Harvard School of Public Health. We watched the video and found no sign of endorsing or talking about any diabetes medicine he invented.

We also extracted the audio from the viral video and analyzed it using the AI audio detection tool named Eleven Labs, which found the audio very likely to be created using the AI Voice generation tool with the probability of 98%.

Hence, the Claim made in the viral video is false and misleading. The Video is digitally edited using different clips and the audio is generated using the AI Voice creation tool to mislead netizens. It is worth noting that we have previously debunked such voice-altered news with bogus claims.

Conclusion:

In conclusion, the viral video claiming that Hon'ble President of India, Smt. Droupadi Murmu and Chief Minister of Uttar Pradesh Shri Yogi Adityanath promoted a diabetes medicine that cured their diabetes, is found to be false. Upon thorough investigation it was found that the video is digitally edited from different clips, the clip of Hon'ble President of India, Smt. Droupadi Murmu is taken from the clip of Oath Taking Ceremony of 15th President of India and the claimed doctor Abhinash Mishra whose video was found in CNBC News Outlet. The real name of the person is Dr. Atul Gawande who is a professor at Harvard School of Public Health. Online users must be careful while receiving such posts and should verify before sharing them with others.

Claim: A video is being circulated on social media claiming to show distinguished individuals promoting a particular medicine for diabetes treatment.

Claimed on: Facebook

Fact Check: Fake & Misleading

Executive Summary:

A video has gone viral that claims to show Hon'ble Minister of Home Affairs, Shri Amit Shah stating that the BJP-Led Central Government intends to end quotas for scheduled castes (SCs), scheduled tribes (STs), and other backward classes (OBCs). On further investigation, it turns out this claim is false as we found the original clip from an official source, while he delivered the speech at Telangana, Shah talked about falsehoods about religion-based reservations, with specific reference to Muslim reservations. It is a digitally altered video and thus the claim is false.

Claims:

The video which allegedly claims that the Hon'ble Minister of Home Affairs, Shri Amit Shah will be terminating the reservation quota systems of scheduled castes (SCs), scheduled tribes (STs) and other backward classes (OBCs) if BJP government was formed again has been viral on social media platforms.

English Translation: If the BJP government is formed again we will cancel ST, SC reservations: Hon'ble Minister of Home Affairs, Shri Amit Shah

Fact Check:

When the video was received we closely observed the name of the news media channel, and it was V6 News. We divided the video into keyframes and reverse searched the images. For one of the keyframes of the video, we found a similar video with the caption “Union Minister Amit Shah Comments Muslim Reservations | V6 Weekend Teenmaar” uploaded by the V6 News Telugu’s verified Youtube channel on April 23, 2023. Taking a cue from this, we also did some keyword searches to find any relevant sources. In the video at the timestamp of 2:38, Hon'ble Minister of Home Affairs, Shri Amit Shah talks about religion-based reservations calling ‘unconstitutional Muslim Reservation’ and that the Government will remove it.

Further, he talks about the SC, ST, and OBC reservations having full rights for quota but not the Muslim reservation.

While doing the reverse image, we found many other videos uploaded by other media outlets like ANI, Hindustan Times, The Economic Times, etc about ending Muslim reservations from Telangana state, but we found no such evidence that supports the viral claim of removing SC, ST, OBC quota system. After further analysis for any sign of alteration, we found that the viral video was edited while the original information is different. Hence, it’s misleading and false.

Conclusion:

The video featuring the Hon'ble Minister of Home Affairs, Shri Amit Shah announcing that they will remove the reservation quota system of SC, ST and OBC if the new BJP government is formed again in the ongoing Lok sabha election, is debunked. After careful analysis, it was found that the video was fake and was created to misrepresent the actual statement of Hon'ble Minister of Home Affairs, Shri Amit Shah. The original footage surfaced on the V6 News Telugu YouTube channel, in which Hon'ble Minister of Home Affairs, Shri Amit Shah was explaining about religion-based reservations, particularly Muslim reservations in Telangana. Unfortunately, the fake video was false and Hon'ble Minister of Home Affairs, Shri Amit Shah did not mention the end of SC, ST, and OBC reservations.

- Claim: The viral video covers the assertion of Hon'ble Minister of Home Affairs, Shri Amit Shah that the BJP government will soon remove reservation quotas for scheduled castes (SCs), scheduled tribes (STs), and other backward classes (OBCs).

- Claimed on: X (formerly known as Twitter)

- Fact Check: Fake & Misleading

Executive Summary:

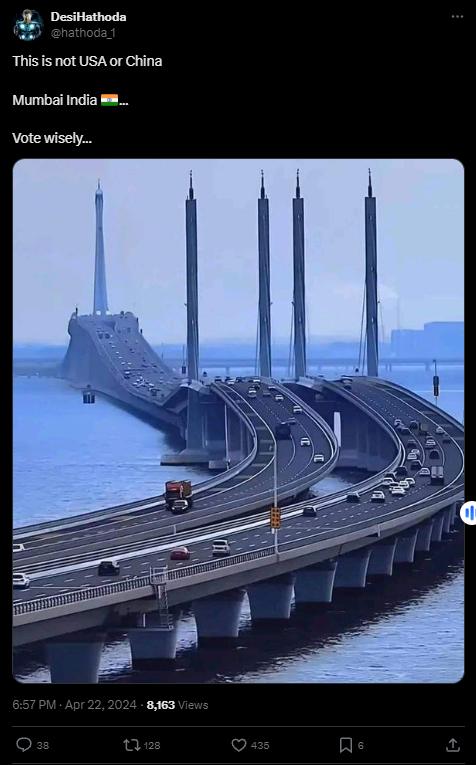

The photograph of a bridge allegedly in Mumbai, India circulated through social media was found to be false. Through investigations such as reverse image searches, examination of similar videos, and comparison with reputable news sources and google images, it has been found that the bridge in the viral photo is the Qingdao Jiaozhou Bay Bridge located in Qingdao, China. Multiple pieces of evidence, including matching architectural features and corroborating videos tell us that the bridge is not from Mumbai. No credible reports or sources have been found to prove the existence of a similar bridge in Mumbai.

Claims:

Social media users claim a viral image of the bridge is from Mumbai.

Fact Check:

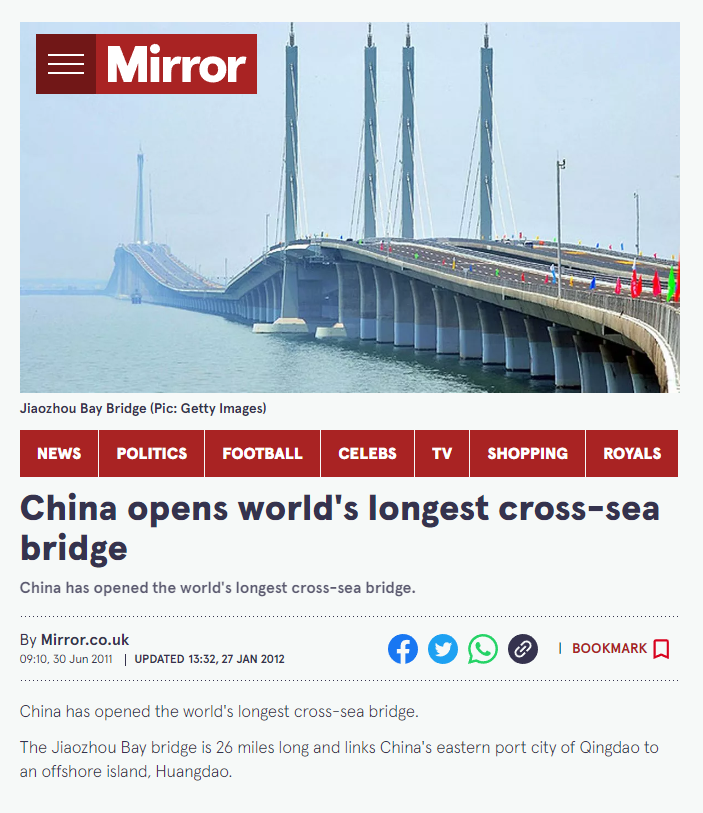

Once the image was received, it was investigated under the reverse image search to find any lead or any information related to it. We found an image published by Mirror News media outlet, though we are still unsure but we can see the same upper pillars and the foundation pillars with the same color i.e white in the viral image.

The name of the Bridge is Jiaozhou Bay Bridge located in China, which connects the eastern port city of the country to an offshore island named Huangdao.

Taking a cue from this we then searched for the Bridge to find any other relatable images or videos. We found a YouTube Video uploaded by a channel named xuxiaopang, which has some similar structures like pillars and road design.

In reverse image search, we found another news article that tells about the same bridge in China, which is more likely similar looking.

Upon lack of evidence and credible sources for opening a similar bridge in Mumbai, and after a thorough investigation we concluded that the claim made in the viral image is misleading and false. It’s a bridge located in China not in Mumbai.

Conclusion:

In conclusion, after fact-checking it was found that the viral image of the bridge allegedly in Mumbai, India was claimed to be false. The bridge in the picture climbed to be Qingdao Jiaozhou Bay Bridge actually happened to be located in Qingdao, China. Several sources such as reverse image searches, videos, and reliable news outlets prove the same. No evidence exists to suggest that there is such a bridge like that in Mumbai. Therefore, this claim is false because the actual bridge is in China, not in Mumbai.

- Claim: The bridge seen in the popular social media posts is in Mumbai.

- Claimed on: X (formerly known as Twitter), Facebook,

- Fact Check: Fake & Misleading

Executive Summary:

An alleged video is making the rounds on the internet featuring Ranveer Singh criticizing the Prime Minister Narendra Modi and his Government. But after examining the video closely it revealed that it has been tampered with to change the audio. In fact, the original videos posted by different media outlets actually show Ranveer Singh praising Varanasi, professing his love for Lord Shiva, and acknowledging Modiji’s role in enhancing the cultural charms and infrastructural development of the city. Differences in lip synchronization and the fact that the original video has no sign of criticizing PM Modi show that the video has been potentially manipulated in order to spread misinformation.

Claims:

The Viral Video of Bollywood actor Ranveer Singh criticizing Prime Minister Narendra Modi.

Fact Check:

Upon receiving the Video we divided the video into keyframes and reverse-searched one of the images, we landed on another video of Ranveer Singh with lookalike appearance, posted by an Instagram account named, “The Indian Opinion News''. In the video Ranveer Singh talks about his experience of visiting Kashi Vishwanath Temple with Bollywood actress Kriti Sanon. When we watched the Full video we found no indication of criticizing PM Modi.

Taking a cue from this we did some keyword search to find the full video of the interview. We found many videos uploaded by media outlets but none of the videos indicates criticizing PM Modi as claimed in the viral video.

Ranveer Singh shared his thoughts about how he feels about Lord Shiva, his opinions on the city and the efforts undertaken by the Prime Minister Modi to keep history and heritage of Varanasi alive as well as the city's ongoing development projects. The discrepancy in the viral video clip is clearly seen when we look at it closely. The lips are not in synchronization with the words which we can hear. It is clearly seen in the original video that the lips are in perfect synchronization with the words of audio. Upon lack of evidence to the claim made and discrepancies in the video prove that the video was edited to misrepresent the original interview of Bollywood Actor Ranveer Singh. Hence, the claim made is misleading and false.

Conclusion:

The video that claims Ranveer Singh criticizing PM Narendra Modi is not genuine. Further investigation shows that it has been edited by changing the audio. The original footage actually shows Singh speaking positively about Varanasi and Modi's work. Differences in lip-syncing and upon lack of evidence highlight the danger of misinformation created by simple editing. Ultimately, the claim made is false and misleading.

- Claim: A viral featuring Ranveer Singh criticizing the Prime Minister Narendra Modi and his Government.

- Claimed on: X (formerly known as Twitter)

- Fact Check: Fake & Misleading

Executive Summary:

A recent claim going around on social media that a child created sand sculptures of cricket legend Mahendra Singh Dhoni, has been proven false by the CyberPeace Research Team. The team discovered that the images were actually produced using an AI tool. Evident from the unusual details like extra fingers and unnatural characteristics in the sculptures, the Research Team discerned the likelihood of artificial creation. This suspicion was further substantiated by AI detection tools. This incident underscores the need to fact-check information before posting, as misinformation can quickly go viral on social media. It is advised everyone to carefully assess content to stop the spread of false information.

Claims:

The claim is that the photographs published on social media show sand sculptures of cricketer Mahendra Singh Dhoni made by a child.

Fact Check:

Upon receiving the posts, we carefully examined the images. The collage of 4 pictures has many anomalies which are the clear sign of AI generated images.

In the first image the left hand of the sand sculpture has 6 fingers and in the word INDIA, ‘A’ is not properly aligned i.e not in the same line as other letters. In the second image, the finger of the boy is missing and the sand sculpture has 4 fingers in its front foot and has 3 legs. In the third image the slipper of the boy is not visible whereas some part of the slipper is visible, and in the fourth image the hand of the boy is not looking like a hand. These are some of the major discrepancies clearly visible in the images.

We then checked using an AI Image detection tool named ‘Hive’ image detection, Hive detected the image as 100.0% AI generated.

We then checked it in another AI image detection named ContentAtScale AI image detection, and it found to be 98% AI generated.

From this we concluded that the Image is AI generated and has no connection with the claim made in the viral social media posts. We have also previously debunked AI Generated artwork of sand sculpture of Indian Cricketer Virat Kohli which had the same types of anomalies as those seen in this case.

Conclusion:

Taking into consideration the distortions spotted in the images and the result of AI detection tools, it can be concluded that the claim of the pictures representing the child's sand sculptures of cricketer Mahendra Singh Dhoni is false. The pictures are created with Artificial Intelligence. It is important to check and authenticate the content before posting it to social media websites.

- Claim: The frame of pictures shared on social media contains child's sand sculptures of cricket player Mahendra Singh Dhoni.

- Claimed on: X (formerly known as Twitter), Instagram, Facebook, YouTube

- Fact Check: Fake & Misleading

Executive Summary:

The picture that went viral with the false story that Dhoni was supporting the Congress party, actually shows his joy over Chennai Super Kings' victory in the achievement of 6 million followers on X (formerly known as Twitter) in 2020. Dhoni's gesture was misinterpreted by many, which resulted in the spread of false information. The Research team of CyberPeace did an in-depth investigation of the photo's roots and confirmed its authenticity through a reverse image search, highlighting how news outlets and CSK's official social media channels shared it. The case illustrates the value of fact verification and the role of real information in preventing the fake news epidemic.

Claims:

An image of former Indian Cricket captain Mahendra Singh Dhoni, showed him urging people to vote for the Congress party, wearing the Chennai Super Kings (CSK) jersey and showing his right palm visible and gesturing the number 'one' with his left index finger. In reality he is celebrating Chennai Super Kings' milestone achievement on X (formerly Twitter) in 2020. Many people are sharing the misinterpretation knowingly or unknowingly over social media platforms.

Fact Check:

After receiving the post, we ran a reverse image search of the image and found a news article published by NDTV. According to the news outlet, Dhoni and his teammates were celebrating CSK's milestone of reaching six million followers on X (formerly known as Twitter) in the photos.

In the image it is written as a tweet of @chennaiipl, to get an idea we dig into the official account of Chennai Super Kings on X (formerly known as Twitter). And Voila! we found the exact post which surfaced on the X (formerly known as Twitter) on 5th October 2020.

Additionally, we found a video posted on the X (formerly known as Twitter) handle of CSK, featuring other cricketers celebrating the Six Million Followers milestone for which they are thanking the audience for their support. Again, it was posted on Oct 05, 2020. The caption of the video is written as “Chennai Super #SixerOnTwitter! A big thanks to all the super fans for each and every bouquet and brickbat throughout the last decade. All the #yellove to you. #WhistlePodu”

Therefore it is easy to conclude that the viral image of MS Dhoni supporting Congress is wrong and misleading.

Conclusion:

The information that circulated online media regarding a picture of Mahendra Singh Dhoni supporting the Congress Party has been proven to be untrue. The actual photograph was of Dhoni congratulating the Chennai Super Kings for having six million followers on social media in the year 2020. This highlights the need for checking the facts of any news circulating online.

- Claim: A photo allegedly depicting former Indian cricket captain Mahendra Singh Dhoni encouraging people to support the Congress party in elections surfaced online.

- Claimed on: X (Formerly known as Twitter)

- Fact Check: Fake & Misleading

Executive Summary

The viral video, in which south actor Allu Arjun is seen supporting the Congress Party's campaign for the upcoming Lok Sabha Election, suggests that he has joined Congress Party. Over the course of an investigation, the CyberPeace Research Team uncovered that the video is a close up of Allu Arjun marching as the Grand Marshal of the 2022 India Day parade in New York to celebrate India’s 75th Independence Day. Reverse image searches, Allu Arjun's official YouTube channel, the news coverage, and stock images websites are also proofs of this fact. Thus, it has been firmly established that the claim that Allu Arjun is in a Congress Party's campaign is fabricated and misleading

Claims:

The viral video alleges that the south actor Allu Arjun is using his popularity and star status as a way of campaigning for the Congress party during the 2024 upcoming Lok Sabha elections.

Fact Check:

Initially, after hearing the news, we conducted a quick search using keywords to relate it to actor Allu Arjun joining the Congress Party but came across nothing related to this. However, we found a video by SoSouth posted on Feb 20, 2022, of Allu Arjun’s Father-in-law Kancharla Chandrasekhar Reddy joining congress and quitting former chief minister K Chandrasekhar Rao's party.

Next, we segmented the video into keyframes, and then reverse searched one of the images which led us to the Federation of Indian Association website. It says that the picture is from the 2022 India Parade. The picture looks similar to the viral video, and we can compare the two to help us determine if they are from the same event.

Taking a cue from this, we again performed a keyword search using “India Day Parade 2022”. We found a video uploaded on the official Allu Arjun YouTube channel, and it’s the same video that has been shared on Social Media in recent times with different context. The caption of the original video reads, “Icon Star Allu Arjun as Grand Marshal @ 40th India Day Parade in New York | Highlights | #IndiaAt75”

The Reverse Image search results in some more evidence of the real fact, we found the image on Shutterstock, the description of the photo reads, “NYC India Day Parade, New York, NY, United States - 21 Aug 2022 Parade Grand Marshall Actor Allu Arjun is seen on a float during the annual Indian Day Parade on Madison Avenue in New York City on August 21, 2022.”

With this, we concluded that the Claim made in the viral video of Allu Arjun supporting the Lok Sabha Election campaign 2024 is baseless and false.

Conclusion:

The viral video circulating on social media has been put out of context. The clip, which depicts Allu Arjun's participation in the Indian Day parade in 2022, is not related to the ongoing election campaigns for any Political Party.

Hence, the assertion that Allu Arjun is campaigning for the Congress party is false and misleading.

- Claim: A video, which has gone viral, says that actor Allu Arjun is rallying for the Congress party.

- Claimed on: X (Formerly known as Twitter) and YouTube

- Fact Check: Fake & Misleading

Executive Summary

A viral video allegedly featuring cricketer Virat Kohli endorsing a betting app named ‘Aviator’ is being shared widely across the social platform. CyberPeace Research Team’s Investigations revealed that the same has been made using the deepfake technology. In the viral video, we found some potential anomalies that can be said to have been created using Synthetic Media, also no genuine celebrity endorsements for the app exist, we have also previously debunked such Deep Fake videos of cricketer Virat Kohli regarding the misuse of deep fake technology. The spread of such content underscores the need for social media platforms to implement robust measures to combat online scams and misinformation.

Claims:

The claim made is that a video circulating on social media depicts Indian cricketer Virat Kohli endorsing a betting app called "Aviator." The video features an Indian News channel named India TV, where the journalist reportedly endorses the betting app followed by Virat Kohli's experience with the betting app.

Fact Check:

Upon receiving the news, we thoroughly watched the video and found some featured anomalies that are usually found in regular deep fake videos such as the lip sync of the journalist is not proper, and if we see it carefully the lips do not match with the audio that we can hear in the Video. It’s the same case when Virat Kohli Speaks in the video.

We then divided the video into keyframes and reverse searched one of the frames from the Kohli’s part, we found a video similar to the one spread, where we could see Virat Kohli wearing the same brown jacket in that video, uploaded on his verified Instagram handle which is an ad promotion in collaboration with American Tourister.

After going through the entire video, it is evident that Virat Kohli is not endorsing any betting app, rather he is talking about an ad promotion collaborating with American Tourister.

We then did some keyword searches to see if India TV had published any news as claimed in the Viral Video, but we didn’t find any credible source.

Therefore, upon noticing the major anomalies in the video and doing further analysis found that the video was created using Synthetic Media, it's a fake and misleading one.

Conclusion:

The video of Virat Kohli promoting a betting app is fake and does not actually feature the celebrity endorsing the app. This brings up many concerns regarding how Artificial Intelligence is being used for fraudulent activities. Social media platforms need to take action against the spread of fake videos like these.

Claim: Video surfacing on social media shows Indian cricket star Virat Kohli promoting a betting application known as "Aviator."

Claimed on: Facebook

Fact Check: Fake & Misleading

Executive Summary:

A manipulated image showing someone making an offensive gesture towards Prime Minister Narendra Modi is circulating on social media. However, the original photo does not display any such behavior towards the Prime Minister. The CyberPeace Research Team conducted an analysis and found that the genuine image was published in a Hindustan Times article in May 2019, where no rude gesture was visible. A comparison of the viral and authentic images clearly shows the manipulation. Moreover, The Hitavada also published the same image in 2019. Further investigation revealed that ABPLive also had the image.

Claims:

A picture showing an individual making a derogatory gesture towards Prime Minister Narendra Modi is being widely shared across social media platforms.

Fact Check:

Upon receiving the news, we immediately ran a reverse search of the image and found an article by Hindustan Times, where a similar photo was posted but there was no sign of such obscene gestures shown towards PM Modi.

ABP Live and The Hitavada also have the same image published on their website in May 2019.

Comparing both the viral photo and the photo found on official news websites, we found that almost everything resembles each other except the derogatory sign claimed in the viral image.

With this, we have found that someone took the original image, published in May 2019, and edited it with a disrespectful hand gesture, and which has recently gone viral across social media and has no connection with reality.

Conclusion:

In conclusion, a manipulated picture circulating online showing someone making a rude gesture towards Prime Minister Narendra Modi has been debunked by the Cyberpeace Research team. The viral image is just an edited version of the original image published in 2019. This demonstrates the need for all social media users to check/ verify the information and facts before sharing, to prevent the spread of fake content. Hence the viral image is fake and Misleading.

- Claim: A picture shows someone making a rude gesture towards Prime Minister Narendra Modi

- Claimed on: X, Instagram

- Fact Check: Fake & Misleading