#FactCheck - Manipulated Image Alleging Disrespect Towards PM Circulates Online

Executive Summary:

A manipulated image showing someone making an offensive gesture towards Prime Minister Narendra Modi is circulating on social media. However, the original photo does not display any such behavior towards the Prime Minister. The CyberPeace Research Team conducted an analysis and found that the genuine image was published in a Hindustan Times article in May 2019, where no rude gesture was visible. A comparison of the viral and authentic images clearly shows the manipulation. Moreover, The Hitavada also published the same image in 2019. Further investigation revealed that ABPLive also had the image.

Claims:

A picture showing an individual making a derogatory gesture towards Prime Minister Narendra Modi is being widely shared across social media platforms.

Fact Check:

Upon receiving the news, we immediately ran a reverse search of the image and found an article by Hindustan Times, where a similar photo was posted but there was no sign of such obscene gestures shown towards PM Modi.

ABP Live and The Hitavada also have the same image published on their website in May 2019.

Comparing both the viral photo and the photo found on official news websites, we found that almost everything resembles each other except the derogatory sign claimed in the viral image.

With this, we have found that someone took the original image, published in May 2019, and edited it with a disrespectful hand gesture, and which has recently gone viral across social media and has no connection with reality.

Conclusion:

In conclusion, a manipulated picture circulating online showing someone making a rude gesture towards Prime Minister Narendra Modi has been debunked by the Cyberpeace Research team. The viral image is just an edited version of the original image published in 2019. This demonstrates the need for all social media users to check/ verify the information and facts before sharing, to prevent the spread of fake content. Hence the viral image is fake and Misleading.

- Claim: A picture shows someone making a rude gesture towards Prime Minister Narendra Modi

- Claimed on: X, Instagram

- Fact Check: Fake & Misleading

Related Blogs

Introduction

Recent advances in space exploration and technology have increased the need for space laws to control the actions of governments and corporate organisations. India has been attempting to create a robust legal framework to oversee its space activities because it is a prominent player in the international space business. In this article, we’ll examine India’s current space regulations and compare them to the situation elsewhere in the world.

Space Laws in India

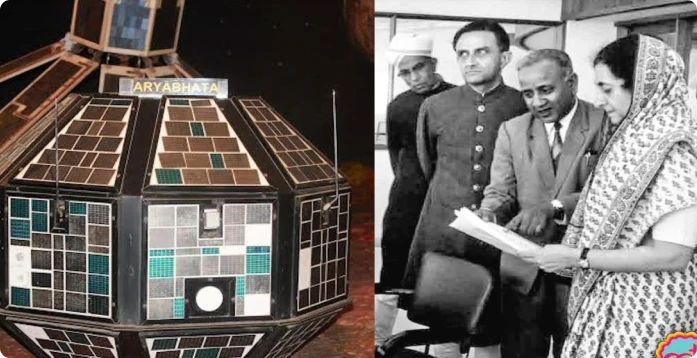

India started space exploration with Aryabhtta, the first satellite, and Rakesh Sharma, the first Indian astronaut, and now has a prominent presence in space as many international satellites are now launched by India. NASA and ISRO work closely on various projects

India currently lacks any space-related legislation. Only a few laws and regulations, such as the Indian Space Research Organisation (ISRO) Act of 1969 and the National Remote Sensing Centre (NRSC) Guidelines of 2011, regulate space-related operations. However, more than these rules and regulations are essential to control India’s expanding space sector. India is starting to gain traction as a prospective player in the global commercial space sector. Authorisation, contracts, dispute resolution, licencing, data processing and distribution related to earth observation services, certification of space technology, insurance, legal difficulties related to launch services, and stamp duty are just a few of the topics that need to be discussed. The necessary statute and laws need to be updated to incorporate space law-related matters into domestic laws.

India’s Space Presence

Space research activities were initiated in India during the early 1960s when satellite applications were in experimental stages, even in the United States. With the live transmission of the Tokyo Olympic Games across the Pacific by the American Satellite ‘Syncom-3’ demonstrating the power of communication satellites, Dr Vikram Sarabhai, the founding father of the Indian space programme, quickly recognised the benefits of space technologies for India.

As a first step, the Department of Atomic Energy formed the INCOSPAR (Indian National Committee for Space Research) under the leadership of Dr Sarabhai and Dr Ramanathan in 1962. The Indian Space Research Organisation (ISRO) was formed on August 15, 1969. The prime objective of ISRO is to develop space technology and its application to various national needs. It is one of the six largest space agencies in the world. The Department of Space (DOS) and the Space Commission were set up in 1972, and ISRO was brought under DOS on June 1, 1972.

Since its inception, the Indian space programme has been orchestrated well. It has three distinct elements: satellites for communication and remote sensing, the space transportation system and application programmes. Two major operational systems have been established – the Indian National Satellite (INSAT) for telecommunication, television broadcasting, and meteorological services and the Indian Remote Sensing Satellite (IRS) for monitoring and managing natural resources and Disaster Management Support.

Global Scenario

The global space race has been on and ever since the moon landing in 1969, and it has now transformed into the new cold war among developed and developing nations. The interests and assets of a nation in space need to be safeguarded by the help of effective and efficient policies and internationally ratified laws. All nations with a presence in space do not believe in good for all policy, thus, preventive measures need to be incorporated into the legal system. A thorough legal framework for space activities is being developed by the United Nations Office for Outer Space Affairs (UNOOSA). The “Outer Space Treaty,” a collection of five international agreements on space law, establishes the foundation of international space law. The agreements address topics such as the peaceful use of space, preventing space from becoming militarised, and who is responsible for damage caused by space objects. Well-established space laws govern both the United States and the United Kingdom. The National Aeronautics and Space Act, which was passed in the US in 1958 and established the National Aeronautics and Space Administration (NASA) to oversee national space programmes, is in place there. The Outer Space Act of 1986 governs how UK citizens and businesses can engage in space activity.

Conclusion

India must create a thorough legal system to govern its space endeavours. In the space sector, there needs to be a legal framework to avoid ambiguity and confusion, which may have detrimental effects. The Pacific use of space for the benefit of humanity should be covered by domestic space legislation in India. The overall scenario demonstrates the requirement for a clearly defined legal framework for the international acknowledgement of a nation’s space activities. India is fifth in the world for space technology, which is an impressive accomplishment, and a strong legal system will help India maintain its place in the space business.

.webp)

Introduction

The scam involving "drugs in parcels' has resurfaced again with a new face. Cybercriminals impersonating and acting as FedEx, Police and various other authorities and in actuality, they are the perpetrators or bad actors behind the renewed "drugs in parcel" scam, which entails pressuring victims into sending money and divulging private information in order to escape fictitious legal repercussions.

Modus operandi

The modus operandi followed in this scam usually begins with a hacker calling someone on their cell phone posing as FedEx. They say that they are the recipients of a package under their name that includes illegal goods like jewellery, narcotics, or other items. The victim would feel afraid and apprehensive by now. Then there will be a video call with someone else who is posing as a police officer. The victim will be asked to keep the matter confidential while it is being investigated by this "fake officer."

After the call, they would get falsified paperwork from the CBI and RBI stating that an arrest warrant had been issued. Once the victim has fallen entirely under their sway, they would claim that the victim's Aadhaar has been used to carry out the unlawful conduct. They then request that the victim submit their bank account information and Aadhaar data for investigation. Subsequently, the hackers request that the victim transfer funds to a bank account for RBI validation. The victims thus submit money to the hackers believing it to be true for clearing their name.

Recent incidence:

In the most recent instance of a "drug-in-parcel" scam, an IT expert in Pune was defrauded of Rs 27.9 lakh by internet con artists acting as members of the Mumbai police's Cyber Crime Cell. The victim filed the First Information Report (FIR) in this matter at the police station. The victim stated that on November 11, 2023, the complainant received a call from a fraudster posing as a Mumbai police Cyber Crime Cell officer. The scammer falsely claimed to have discovered illegal narcotics in a package addressed to the complainant sent from Mumbai to Taiwan, along with an expired passport and an SBI card. To avoid arrest in a fabricated drug case, the fraudster coerced the complainant into providing bank account information under the guise of "verification." The victim, fearing legal consequences, transferred Rs 27,98,776 in ten online transactions to two separate bank accounts as instructed. Upon realizing the deception, the complainant reported the incident to the police, leading to an investigation.

In another such incident, the victim received an online bogus identity card from the scammers who had phoned him on the phone in October 2023. In an attempt to "clear the case" and issue a "no-objection certificate (NOC)," the fraudster persuaded the victim to wire money to a bank account, claiming to have seized narcotics in a shipment shipped from Mumbai to Thailand under his name. Fraudsters threatened to arrest the victim for mailing the narcotics package if money was not provided.

Furthermore, In August 2023, fraudsters acting as police officers and executives of courier companies defrauded a 25-year-old advertising student of Rs 53 lakh. They extorted money from her under the guise of avoiding legal action, which would include arrest, and informed her that narcotics had been discovered in a package she had delivered to Taiwan. According to the police, callers acting as police officers threatened to arrest the girl and forced her to complete up to 34 transactions totalling Rs 53.63 lakh from her and her mother's bank accounts to different bank accounts.

Measures to protect oneself from such scams

Call Verification:

- Be sure to always confirm the legitimacy of unexpected calls, particularly those purporting to be from law enforcement or delivery services. Make use of official contact information obtained from reliable sources to confirm the information presented.

Confidentiality:

- Use caution while disclosing personal information online or over the phone, particularly Aadhaar and bank account information. In general, legitimate authorities don't ask for private information in this way.

Official Documentation:

- Request official documents via the appropriate means. Make sure that any documents—such as arrest warrants or other government documents—are authentic by getting in touch with the relevant authorities.

No Haste in Transactions:

- Proceed with caution when responding hastily to requests for money or quick fixes. Creating a sense of urgency is a common tactic used by scammers to coerce victims into acting quickly.

Knowledge and Awareness:

- Remain up to date on common fraud schemes and frauds. Keep up with the most recent strategies employed by online fraudsters to prevent falling for fresh scam iterations.

Report Suspicious Activity:

- Notify the local police or other appropriate authorities of any suspicious calls or activities. Reports received in a timely manner can help investigations and shield others from falling for the same fraud.

2fA:

- Enable two-factor authentication (2FA) wherever you can to provide online accounts and transactions an additional degree of protection. This may lessen the chance of unwanted access.

Cybersecurity Software:

- To defend against malware, phishing attempts, and other online risks, install and update reputable antivirus and anti-malware software on a regular basis.

Educate Friends and Family:

- Inform friends and family about typical scams and how to avoid falling victim to fraud. A safer online environment can be achieved through increased collective knowledge.

Be skeptical

- Whenever anything looks strange or too good to be true, it most often is. Trust your instincts. Prior to acting, follow your gut and confirm the information.

By taking these precautions and exercising caution, people may lessen their vulnerability to scams and safeguard their money and personal data from online fraudsters.

Conclusion:

Verifying calls, maintaining secrecy, checking official papers, transacting cautiously, and keeping up to date are all examples of protective measures for protecting ourselves from such scams. Using cybersecurity software, turning on two-factor authentication, and reporting suspicious activity are essential in stopping these types of frauds. Raising awareness and working together are essential to making the internet a safer place and resisting the activities of cybercriminals.

References:

- https://indianexpress.com/article/cities/pune/pune-cybercrime-drug-in-parcel-cyber-scam-it-duping-9058298/#:~:text=In%20August%20this%20year%2C%20a,avoiding%20legal%20action%20including%20arrest.

- https://www.the420.in/pune-it-professional-duped-of-rs-27-9-lakh-in-drug-in-parcel-scam/

- https://www.newindianexpress.com/states/tamil-nadu/2023/oct/16/the-return-of-drugs-in-parcel-scam-2624323.html

- https://timesofindia.indiatimes.com/city/hyderabad/2-techies-fall-prey-to-drug-parcel-scam/articleshow/102786234.cms

The rapid innovation of technology and its resultant proliferation in India has integrated businesses that market technology-based products with commerce. Consumer habits have now shifted from traditional to technology-based products, with many consumers opting for smart devices, online transactions and online services. This migration has increased potential data breaches, product defects, misleading advertisements and unfair trade practices.

The need to regulate technology-based commercial industry is seen in the backdrop of various threats that technologies pose, particularly to data. Most devices track consumer behaviour without the authorisation of the consumer. Additionally, products are often defunct or complex to use and the configuration process may prove to be lengthy with a vague warranty.

It is noted that consumers also face difficulties in the technology service sector, even while attempting to purchase a product. These include vendor lock-ins (whereby a consumer finds it difficult to migrate from one vendor to another), dark patterns (deceptive strategies and design practices that mislead users and violate consumer rights), ethical concerns etc.

Against this backdrop, consumer laws are now playing catch up to adequately cater to new consumer rights that come with technology. Consumer laws now have to evolve to become complimentary with other laws and legislation that govern and safeguard individual rights. This includes emphasising compliance with data privacy regulations, creating rules for ancillary activities such as advertising standards and setting guidelines for both product and product seller/manufacturer.

The Legal Framework in India

Currently, Consumer Laws in India while not tech-targeted, are somewhat adequate; The Consumer Protection Act 2019 (“Act”) protects the rights of consumers in India. It places liability on manufacturers, sellers and service providers for any harm caused to a consumer by faulty/defective products. As a result, manufacturers and sellers of ‘Internet & technology-based products’ are brought under the ambit of this Act. The Consumer Protection Act 2019 may also be viewed in light of the Digital Personal Data Protection Act 2023, which mandates the security of the digital personal data of an individual. Envisioned provisions such as those pertaining to mandatory consent, purpose limitation, data minimization, mandatory security measures by organisations, data localisation, accountability and compliance by the DPDP Act can be applied to information generated by and for consumers.

Multiple regulatory authorities and departments have also tasked themselves to issue guidelines that imbibe the principle of caveat venditor. To this effect, the Networks & Technologies (NT) wing of the Department of Telecommunications (DoT) on 2 March 2023, issued the Advisory Guidelines to M2M/IoT stakeholders for securing consumer IoT (“Guidelines”) aiming for M2M/IoT (i.e. Machine to Machine/Internet of things) compliance with the safety and security standards and guidelines in order to protect the users and the networks that connect these devices. The comprehensive Guidelines suggest the removal of universal default passwords and usernames such as “admin” that come preprogrammed with new devices and mandate the password reset process to be done after user authentication. Web services associated with the product are required to use Multi-Factor Authentication and duty is cast on them to not expose any unnecessary user information prior to authentication. Further, M2M/IoT stakeholders are required to provide a public point of contact for reporting vulnerability and security issues. Such stakeholders must also ensure that the software components are updateable in a secure and timely manner. An end-of-life policy is to be published for end-point devices which states the assured duration for which a device will receive software updates.

The involvement of regulatory authorities depends on the nature of technology products; a single product or technical consumer threat may see multiple guidelines. The Advertising Standards Council of India (ASCI) notes that cryptocurrency and related products were considered as the most violative category to commit fraud. In an attempt to protect consumer safety, it introduced guidelines to regulate advertising and promotion of virtual digital assets (VDA) exchange and trading platforms and associated services as a necessary interim measure in February 2022. It mandates that all VDA ads must carry the stipulated disclaimer “Crypto products and NFTs are unregulated and can be highly risky. There may be no regulatory recourse for any loss from such transactions.” must be made in a prominent and unmissable manner.

Further, authorities such as Securities and Exchange Board of India (SEBI) and the Reserve Bank of India (RBI) also issue cautionary notes to consumers and investors against crypto trading and ancillary activities. Even bodies like Bureau of Indian Standards (BIS) act as a complimenting authority, since product quality, including electronic products, is emphasised by mandating compliance to prescribed standards.

It is worth noting that ASCI has proactively responded to new-age technology-induced threats to consumers by attempting to tackle “dark patterns” through its existing Code on Misleading Ads (“Code”), since it is applicable across media to include online advertising on websites and social media handles. It was noted by ASCI that 29% of advertisements were disguised ads by influencers, which is a form of dark pattern. Although the existing Code addressed some issues, a need was felt to encompass other dark patterns.

Perhaps in response, the Central Consumer Protection Authority in November 2023 released guidelines addressing “dark patterns” under the Consumer Protection Act 2019 (“Guidelines”). The Guidelines define dark patterns as deceptive strategies and design practices that mislead users and violate consumer rights. These may include creating false urgency, scarcity or popularity of a product, basket sneaking (whereby additional services are added automatically on purchase of a product or service), confirm shaming (it refers to statements such as “I will stay unsecured” when opting out of travel insurance on booking of transportation tickets), etc. The Guidelines also cater to several data privacy considerations; for example, they stipulate a bar on encouraging consumers from divulging more personal information while making purchases due to difficult language and complex settings of their privacy policies, thereby ensuring compliance of technology product sellers and e-commerce platforms/vendors with data privacy laws in India. It is to be noted that the Guidelines are applicable on all platforms that systematically offer goods and services in India, advertisers and sellers.

Conclusion

Consumer laws for technology-based products in India play a pivotal role in safeguarding the rights and interests of individuals in an era marked by rapid technological advancements. These legislative frameworks, spanning facets such as data protection, electronic transactions, and product liability, assume a pivotal role in establishing a regulatory equilibrium that addresses the nuanced challenges of the digital age. The dynamic evolution of the digital landscape necessitates an adaptive legal infrastructure that ensures ongoing consumer safeguarding amidst technological innovations. As the digital landscape evolves, it is imperative for regulatory frameworks to adapt, ensuring that consumers are protected from potential risks associated with emerging technologies. Striking a balance between innovation and consumer safety requires ongoing collaboration between policymakers, businesses, and consumers. By staying attuned to the evolving needs of the digital age, Indian consumer laws can provide a robust foundation for security and equitable relationships between consumers and technology-based products.

References:

- https://dot.gov.in/circulars/advisory-guidelines-m2miot-stakeholders-securing-consumer-iot

- https://www.mondaq.com/india/advertising-marketing--branding/1169236/asci-releases-guidelines-to-govern-ads-for-cryptocurrency

- https://www.ascionline.in/the-asci-code/#:~:text=Chapter%20I%20(4)%20of%20the,nor%20deceived%20by%20means%20of

- https://www.ascionline.in/wp-content/uploads/2022/11/dark-patterns.pdf