#FactCheck: Viral Photo Shows Sun Ways Project, Incorrectly Linked to Indian Railways

Executive Summary:

Social media has been overwhelmed by a viral post that claims Indian Railways is beginning to install solar panels directly on railway tracks all over the country for renewable energy purposes. The claim also purports that India will become the world's first country to undertake such a green effort in railway systems. Our research involved extensive reverse image searching, keyword analysis, government website searches, and global media verification. We found the claim to be completely false. The viral photos and information are all incorrectly credited to India. The images are actually from a pilot project by a Swiss start-up called Sun-Ways.

Claim:

According to a viral post on social media, Indian Railways has started an all-India initiative to install solar panels directly on railway tracks to generate renewable energy, limit power expenses, and make global history in environmentally sustainable rail operations.

Fact check:

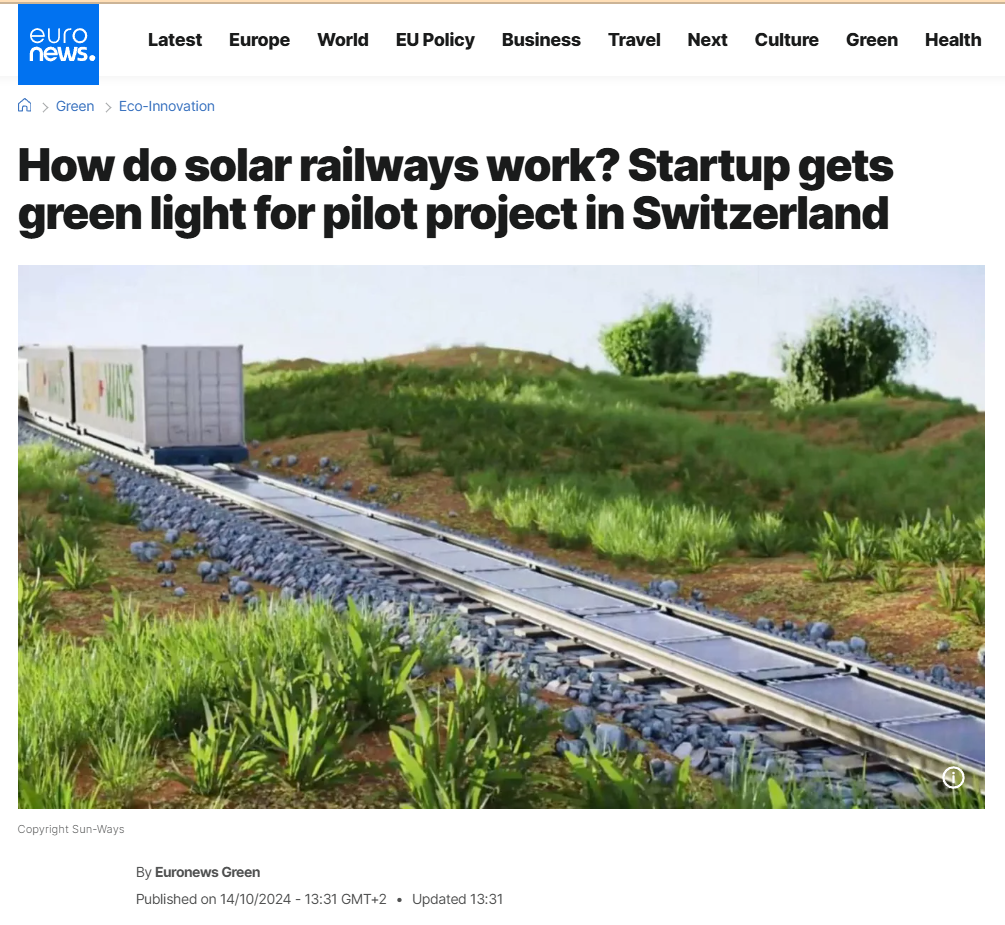

We did a reverse image search of the viral image and were soon directed to international media and technology blogs referencing a project named Sun-Ways, based in Switzerland. The images circulated on Indian social media were the exact ones from the Sun-Ways pilot project, whereby a removable system of solar panels is being installed between railway tracks in Switzerland to evaluate the possibility of generating energy from rail infrastructure.

We also thoroughly searched all the official Indian Railways websites, the Ministry of Railways news article, and credible Indian media. At no point did we locate anything mentioning Indian Railways engaging or planning something similar by installing solar panels on railway tracks themselves.

Indian Railways has been engaged in green energy initiatives beyond just solar panel installation on program rooftops, and also on railway land alongside tracks and on train coach roofs. However, Indian Railways have never installed solar panels on railway tracks in India. Meanwhile, we found a report of solar panel installations on the train launched on 14th July 2025, first solar-powered DEMU (diesel electrical multiple unit) train from the Safdarjung railway station in Delhi. The train will run from Sarai Rohilla in Delhi to Farukh Nagar in Haryana. A total of 16 solar panels, each producing 300 Wp, are fitted in six coaches.

We also found multiple links to support our claim from various media links: Euro News, World Economy Forum, Institute of Mechanical Engineering, and NDTV.

Conclusion:

After extensive research conducted through several phases including examining facts and some technical facts, we can conclude that the claim that Indian Railways has installed solar panels on railway tracks is false. The concept and images originate from Sun-Ways, a Swiss company that was testing this concept in Switzerland, not India.

Indian Railways continues to use renewable energy in a number of forms but has not put any solar panels on railway tracks. We want to highlight how important it is to fact-check viral content and other unverified content.

- Claim: India’s solar track project will help Indian Railways run entirely on renewable energy.

- Claimed On: Social Media

- Fact Check: False and Misleading