#FactCheck: Viral Fake Post Claims Central Government Offers Unemployment Allowance Under ‘PM Berojgari Bhatta Yojna’

Executive Summary:

A viral thumbnail and numerous social posts state that the government of India is giving unemployed youth ₹4,500 a month under a program labeled "PM Berojgari Bhatta Yojana." This claim has been shared on multiple online platforms.. It has given many job-seeking individuals hope, however, when we independently researched the claim, there was no verified source of the scheme or government notification.

Claim:

The viral post states: "The Central Government is conducting a scheme called PM Berojgari Bhatta Yojana in which any unemployed youth would be given ₹ 4,500 each month. Eligible candidates can apply online and get benefits." Several videos and posts show suspicious and unverified website links for registration, trying to get the general public to share their personal information.

Fact check:

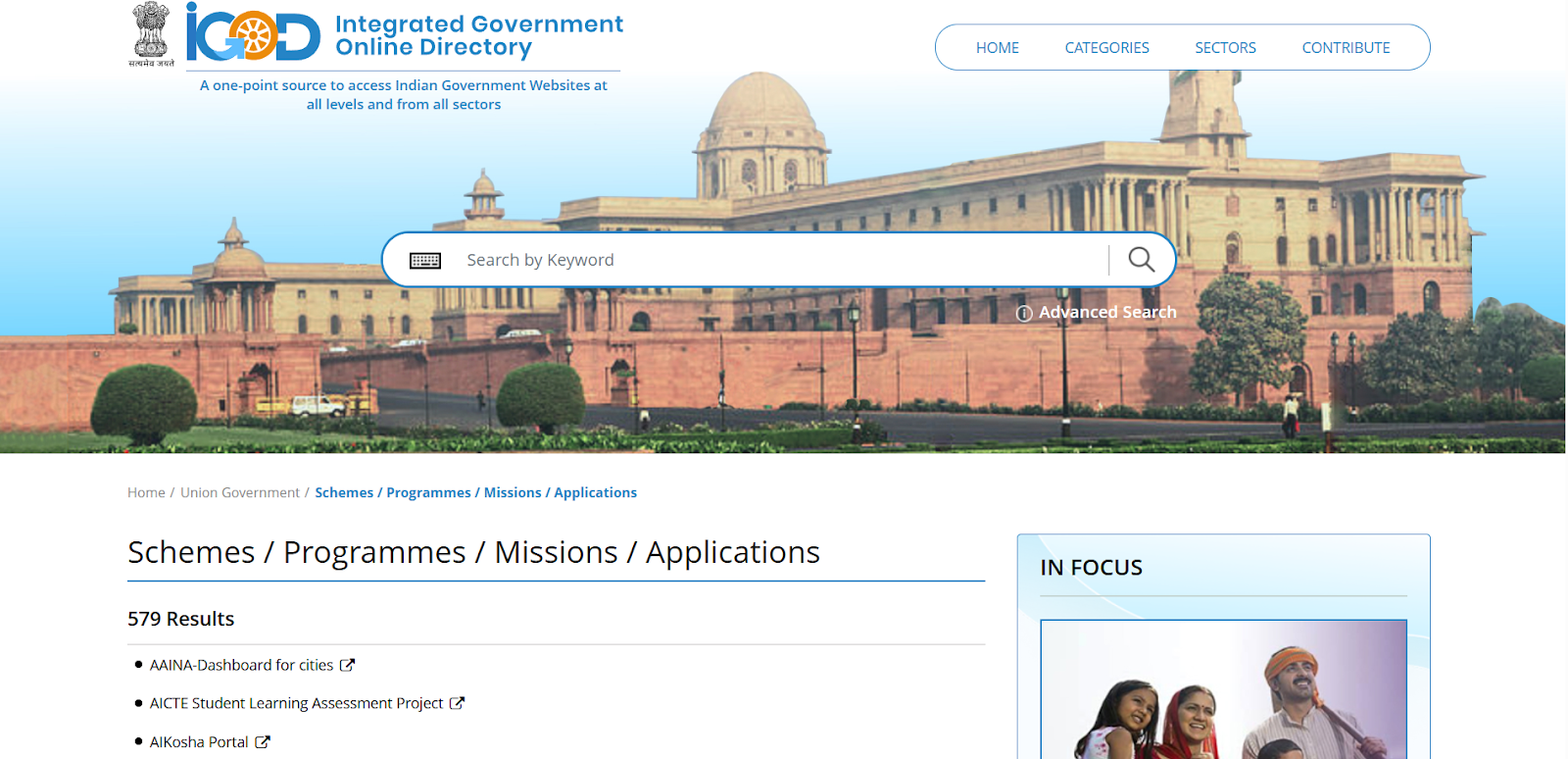

In the course of our verification, we conducted a research of all government portals that are official, in this case, the Ministry of Labour and Employment, PMO India, MyScheme, MyGov, and Integrated Government Online Directory, which lists all legitimate Schemes, Programmes, Missions, and Applications run by the Government of India does not posted any scheme related to the PM Berojgari Bhatta Yojana.

Numerous YouTube channels seem to be monetizing false narratives at the expense of sentiment, leading users to misleading websites. The purpose of these scams is typically to either harvest data or market pay-per-click ads that suspend disbelief in outrageous claims.

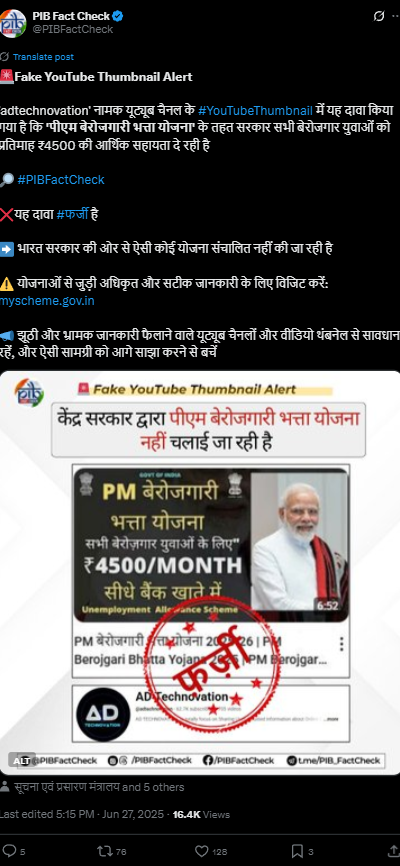

Our research findings were backed up later by the PIB Fact Check which shared a clarification on social media. stated that: “No such scheme called ‘PM Berojgari Bhatta Yojana’ is in existence. The claim that has gone viral is fake”.

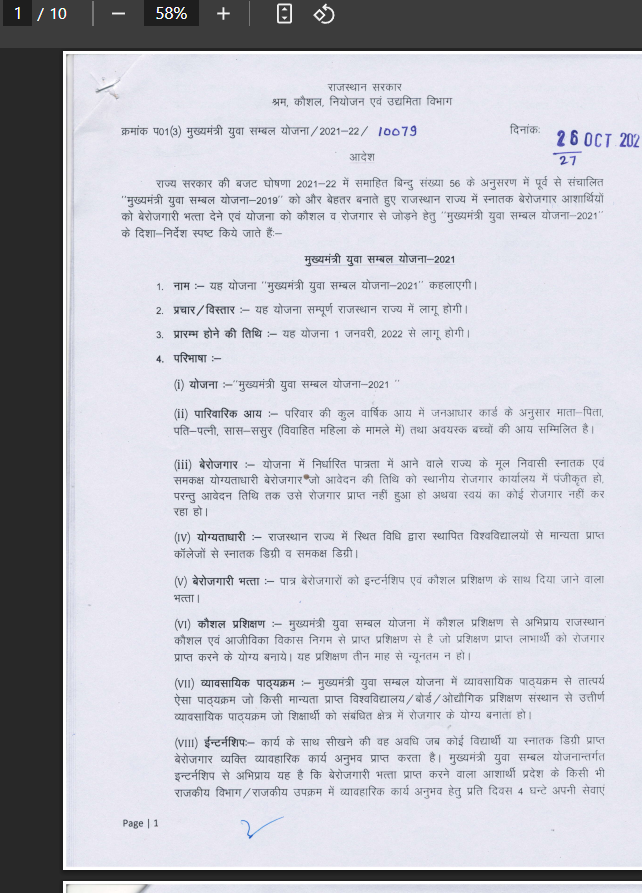

To provide some perspective, in 2021-22, the Rajasthan government launched a state-level program under the Mukhyamantri Udyog Sambal Yojana (MUSY) that provided ₹4,500/month to unemployed women and transgender persons, and ₹4000/month to unemployed males. This was not a Central Government program, and the current viral claim falsely contextualizes past, local initiatives as nationwide policy.

Conclusion:

The claim of a ₹4,500 monthly unemployment benefit under the PM Berojgari Bhatta Yojana is incorrect. The Central Government or any government department has not launched such a scheme. Our claim aligns with PIB Fact Check, which classifies this as a case of misinformation. We encourage everyone to be vigilant and avoid reacting to viral fake news. Verify claims through official sources before sharing or taking action. Let's work together to curb misinformation and protect citizens from false hopes and data fraud.

- Claim: A central policy offers jobless individuals ₹4,500 monthly financial relief

- Claimed On: Social Media

- Fact Check: False and Misleading

Related Blogs

Introduction

A disturbing trend of courier-related cyber scams has emerged, targeting unsuspecting individuals across India. In these scams, fraudsters pose as officials from reputable organisations, such as courier companies or government departments like the narcotics bureau. Using sophisticated social engineering tactics, they deceive victims into divulging personal information and transferring money under false pretences. Recently, a woman IT professional from Mumbai fell victim to such a scam, losing Rs 1.97 lakh.

Instances of courier-related cyber scams

Recently, two significant cases of courier-related cyber scams have surfaced, illustrating the alarming prevalence of such fraudulent activities.

- Case in Delhi: A doctor in Delhi fell victim to an online scam, resulting in a staggering loss of approximately Rs 4.47 crore. The scam involved fraudsters posing as representatives of a courier company. They informed the doctor about a seized package and requested substantial money for verification purposes. Tragically, the doctor trusted the callers and lost substantial money.

- Case in Mumbai: In a strikingly similar incident, an IT professional from Mumbai, Maharashtra, lost Rs 1.97 lakh to cyber fraudsters pretending to be officials from the narcotics department. The fraudsters contacted the victim, claiming her Aadhaar number was linked to the criminals’ bank accounts. They coerced the victim into transferring money for verification through deceptive tactics and false evidence, resulting in a significant financial loss.

These recent cases highlight the growing threat of courier-related cyber scams and the devastating impact they can have on unsuspecting individuals. It emphasises the urgent need for increased awareness, vigilance, and preventive measures to protect oneself from falling victim to such fraudulent schemes.

Nature of the Attack

The cyber scam typically begins with a fraudulent call from someone claiming to be associated with a courier company. They inform the victim that their package is stuck or has been seized, escalating the situation by involving law enforcement agencies, such as the narcotics department. The fraudsters manipulate victims by creating a sense of urgency and fear, convincing them to download communication apps like Skype to establish credibility. Fabricated evidence and false claims trick victims into sharing personal information, including Aadhaar numbers, and coercing them to make financial transactions for verification purposes.

Best Practices to Stay Safe

To protect oneself from courier-related cyber scams and similar frauds, individuals should follow these best practices:

- Verify Calls and Identity: Be cautious when receiving calls from unknown numbers. Verify the caller’s identity by cross-checking with relevant authorities or organisations before sharing personal information.

- Exercise Caution with Personal Information: Avoid sharing sensitive personal information, such as Aadhaar numbers, bank account details, or passwords, over the phone or through messaging apps unless necessary and with trusted sources.

- Beware of Urgency and Threats: Scammers often create a sense of urgency or threaten legal consequences to manipulate victims. Remain vigilant and question any unexpected demands for money or personal information.

- Double-Check Suspicious Claims: If contacted by someone claiming to be from a government department or law enforcement agency, independently verify their credentials by contacting the official helpline or visiting the department’s official website.

- Educate and Spread Awareness: Share information about these scams with friends, family, and colleagues to raise awareness and collectively prevent others from falling victim to such frauds.

Legal Remedies

In case of falling victim to a courier-related cyber scam, individuals can sort to take the following legal actions:

- File a First Information Report (FIR): In case of falling victim to a courier-related cyber scam or any similar online fraud, individuals have legal options available to seek justice and potentially recover their losses. One of the primary legal actions that can be taken is to file a First Information Report (FIR) with the local police. The following sections of Indian law may be applicable in such cases:

- Section 419 of the Indian Penal Code (IPC): This section deals with the offence of cheating by impersonation. It states that whoever cheats by impersonating another person shall be punished with imprisonment of either description for a term which may extend to three years, or with a fine, or both.

- Section 420 of the IPC: This section covers the offence of cheating and dishonestly inducing delivery of property. It states that whoever cheats and thereby dishonestly induces the person deceived to deliver any property shall be punished with imprisonment of either description for a term which may extend to seven years and shall also be liable to pay a fine.

- Section 66(C) of the Information Technology (IT) Act, 2000: This section deals with the offence of identity theft. It states that whoever, fraudulently or dishonestly, makes use of the electronic signature, password, or any other unique identification feature of any other person shall be punished with imprisonment of either description for a term which may extend to three years and shall also be liable to pay a fine.

- Section 66(D) of the IT Act, 2000 pertains to the offence of cheating by personation by using a computer resource. It states that whoever, by means of any communication device or computer resource, cheats by personating shall be punished with imprisonment of either description for a term which may extend to three years and shall also be liable to pay a fine.

- National Cyber Crime Reporting Portal- One powerful resource available to victims is the National Cyber Crime Reporting Portal, equipped with a 24×7 helpline number, 1930. This portal serves as a centralised platform for reporting cybercrimes, including financial fraud.

Conclusion:

The rise of courier-related cyber scams demands increased vigilance from individuals to protect themselves against fraud. Heightened awareness, caution, and scepticism when dealing with unknown callers or suspicious requests are crucial. By following best practices, such as verifying identities, avoiding sharing sensitive information, and staying updated on emerging scams, individuals can minimise the risk of falling victim to these fraudulent schemes. Furthermore, spreading awareness about such scams and promoting cybersecurity education will play a vital role in creating a safer digital environment for everyone.

The World Wide Web was created as a portal for communication, to connect people from far away, and while it started with electronic mail, mail moved to instant messaging, which let people have conversations and interact with each other from afar in real-time. But now, the new paradigm is the Internet of Things and how machines can communicate with one another. Now one can use a wearable gadget that can unlock the front door upon arrival at home and can message the air conditioner so that it switches on. This is IoT.

WHAT EXACTLY IS IoT?

The term ‘Internet of Things’ was coined in 1999 by Kevin Ashton, a computer scientist who put Radio Frequency Identification (RFID) chips on products in order to track them in the supply chain, while he worked at Proctor & Gamble (P&G). And after the launch of the iPhone in 2007, there were already more connected devices than people on the planet.

Fast forward to today and we live in a more connected world than ever. So much so that even our handheld devices and household appliances can now connect and communicate through a vast network that has been built so that data can be transferred and received between devices. There are currently more IoT devices than users in the world and according to the WEF’s report on State of the Connected World, by 2025 there will be more than 40 billion such devices that will record data so it can be analyzed.

IoT finds use in many parts of our lives. It has helped businesses streamline their operations, reduce costs, and improve productivity. IoT also helped during the Covid-19 pandemic, with devices that could help with contact tracing and wearables that could be used for health monitoring. All of these devices are able to gather, store and share data so that it can be analyzed. The information is gathered according to rules set by the people who build these systems.

APPLICATION OF IoT

IoT is used by both consumers and the industry.

Some of the widely used examples of CIoT (Consumer IoT) are wearables like health and fitness trackers, smart rings with near-field communication (NFC), and smartwatches. Smartwatches gather a lot of personal data. Smart clothing, with sensors on it, can monitor the wearer’s vital signs. There are even smart jewelry, which can monitor sleeping patterns and also stress levels.

With the advent of virtual and augmented reality, the gaming industry can now make the experience even more immersive and engrossing. Smart glasses and headsets are used, along with armbands fitted with sensors that can detect the movement of arms and replicate the movement in the game.

At home, there are smart TVs, security cameras, smart bulbs, home control devices, and other IoT-enabled ‘smart’ appliances like coffee makers, that can be turned on through an app, or at a particular time in the morning so that it acts as an alarm. There are also voice-command assistants like Alexa and Siri, and these work with software written by manufacturers that can understand simple instructions.

Industrial IoT (IIoT) mainly uses connected machines for the purposes of synchronization, efficiency, and cost-cutting. For example, smart factories gather and analyze data as the work is being done. Sensors are also used in agriculture to check soil moisture levels, and these then automatically run the irrigation system without the need for human intervention.

Statistics

- The IoT device market is poised to reach $1.4 trillion by 2027, according to Fortune Business Insight.

- The number of cellular IoT connections is expected to reach 3.5 billion by 2023. (Forbes)

- The amount of data generated by IoT devices is expected to reach 73.1 ZB (zettabytes) by 2025.

- 94% of retailers agree that the benefits of implementing IoT outweigh the risk.

- 55% of companies believe that 3rd party IoT providers should have to comply with IoT security and privacy regulations.

- 53% of all users acknowledge that wearable devices will be vulnerable to data breaches, viruses,

- Companies could invest up to 15 trillion dollars in IoT by 2025 (Gigabit)

CONCERNS AND SOLUTIONS

- Two of the biggest concerns with IoT devices are the privacy of users and the devices being secure in order to prevent attacks by bad actors. This makes knowledge of how these things work absolutely imperative.

- It is worth noting that these devices all work with a central hub, like a smartphone. This means that it pairs with the smartphone through an app and acts as a gateway, which could compromise the smartphone as well if a hacker were to target that IoT device.

- With technology like smart television sets that have cameras and microphones, the major concern is that hackers could hack and take over the functioning of the television as these are not adequately secured by the manufacturer.

- A hacker could control the camera and cyberstalk the victim, and therefore it is very important to become familiar with the features of a device and ensure that it is well protected from any unauthorized usage. Even simple things, like keeping the camera covered when it is not being used.

- There is also the concern that since IoT devices gather and share data without human intervention, they could be transmitting data that the user does not want to share. This is true of health trackers. Users who wear heart and blood pressure monitors have their data sent to the insurance company, who may then decide to raise the premium on their life insurance based on the data they get.

- IoT devices often keep functioning as normal even if they have been compromised. Most devices do not log an attack or alert the user, and changes like higher power or bandwidth usage go unnoticed after the attack. It is therefore very important to make sure the device is properly protected.

- It is also important to keep the software of the device updated as vulnerabilities are found in the code and fixes are provided by the manufacturer. Some IoT devices, however, lack the capability to be patched and are therefore permanently ‘at risk’.

CONCLUSION

Humanity inhabits this world that is made up of all these nodes that talk to each other and get things done. Users can harmonize their devices so that everything runs like a tandem bike – completely in sync with all other parts. But while we make use of all the benefits, it is also very important that one understands what they are using, how it is functioning, and how one can tackle issues should they come up. This is also important to understand because once people get used to IoT, it will be that much more difficult to give up the comfort and ease that these systems provide, and therefore it would make more sense to be prepared for any eventuality. A lot of times, good and sensible usage alone can keep devices safe and services intact. But users should be aware of any issues because forewarned is forearmed.

Introduction

A message has recently circulated on WhatsApp alleging that voice and video chats made through the app will be recorded, and devices will be linked to the Ministry of Electronics and Information Technology’s system from now on. WhatsApp from now, record the chat activities and forward the details to the Government. The Anti-Government News has been shared on social media.

Message claims

- The fake WhatsApp message claims that an 11-point new communication guideline has been established and that voice and video calls will be recorded and saved. It goes on to say that WhatsApp devices will be linked to the Ministry’s system and that Facebook, Twitter, Instagram, and all other social media platforms will be monitored in the future.

- The fake WhatsApp message further advises individuals not to transmit ‘any nasty post or video against the government or the Prime Minister regarding politics or the current situation’. The bogus message goes on to say that it is a “crime” to write or transmit a negative message on any political or religious subject and that doing so could result in “arrest without a warrant.”

- The false message claims that any message in a WhatsApp group with three blue ticks indicates that the message has been noted by the government. It also notifies Group members that if a message has 1 Blue tick and 2 Red ticks, the government is checking their information, and if a member has 3 Red ticks, the government has begun procedures against the user, and they will receive a court summons shortly.

WhatsApp does not record voice and video calls

There has been news which is spreading that WhatsApp records voice calls and video calls of the users. the news is spread through a message that has been recently shared on social media. As per the Government, the news is fake, that WhatsApp cannot record voice and video calls. Only third-party apps can record voice and video calls. Usually, users use third-party Apps to record voice and video calls.

Third-party apps used for recording voice and video calls

- App Call recorder

- Call recorder- Cube ACR

- Video Call Screen recorder for WhatsApp FB

- AZ Screen Recorder

- Video Call Recorder for WhatsApp

Case Study

In 2022 there was a fake message spreading on social media, suggesting that the government might monitor WhatsApp talks and act against users. According to this fake message, a new WhatsApp policy has been released, and it claims that from now on, every message that is regarded as suspicious will have three 3 Blue ticks, indicating that the government has taken note of that message. And the same fake news is spreading nowadays.

WhatsApp Privacy policies against recording voice and video chats

The WhatsApp privacy policies say that voice calls, video calls, and even chats cannot be recorded through WhatsApp because of end-to-end encryption settings. End-to-end encryption ensures that the communication between two people will be kept private and safe.

WhatsApp Brand New Features

- Chat lock feature: WhatsApp Chat Lock allows you to store chats in a folder that can only be viewed using your device’s password or biometrics such as a fingerprint. When you lock a chat, the details of the conversation are automatically hidden in notifications. The motive of WhatsApp behind the cha lock feature is to discover new methods to keep your messages private and safe. The feature allows the protection of most private conversations with an extra degree of security

- Edit chats feature: WhatsApp can now edit your WhatsApp messages up to 15 minutes after they have been sent. With this feature, the users can make the correction in the chat or can add some extra points, users want to add.

Conclusion

The spread of misinformation and fake news is a significant problem in the age of the internet. It can have serious consequences for individuals, communities, and even nations. The news is fake as per the government, as neither WhatsApp nor the government could have access to WhatsApp chats, voice, and video calls on WhatsApp because of end-to-end encryption. End-to-end encryption ensures to protect of the communications of the users. The government previous year blocked 60 social media platforms because of the spreading of Anti India News. There is a fact check unit which identifies misleading and false online content.