#FactCheck - Viral Video of Argentina Football Team Dancing to Bhojpuri Song is Misleading

Executive Summary:

A viral video of the Argentina football team dancing in the dressing room to a Bhojpuri song is being circulated in social media. After analyzing the originality, CyberPeace Research Team discovered that this video was altered and the music was edited. The original footage was posted by former Argentine footballer Sergio Leonel Aguero in his official Instagram page on 19th December 2022. Lionel Messi and his teammates were shown celebrating their win at the 2022 FIFA World Cup. Contrary to viral video, the song in this real-life video is not from Bhojpuri language. The viral video is cropped from a part of Aguero’s upload and the audio of the clip has been changed to incorporate the Bhojpuri song. Therefore, it is concluded that the Argentinian team dancing to Bhojpuri song is misleading.

Claims:

A video of the Argentina football team dancing to a Bhojpuri song after victory.

Fact Check:

On receiving these posts, we split the video into frames, performed the reverse image search on one of these frames and found a video uploaded to the SKY SPORTS website on 19 December 2022.

We found that this is the same clip as in the viral video but the celebration differs. Upon further analysis, We also found a live video uploaded by Argentinian footballer Sergio Leonel Aguero on his Instagram account on 19th December 2022. The viral video was a clip from his live video and the song or music that’s playing is not a Bhojpuri song.

Thus this proves that the news that circulates in the social media in regards to the viral video of Argentina football team dancing Bhojpuri is false and misleading. People should always ensure to check its authenticity before sharing.

Conclusion:

In conclusion, the video that appears to show Argentina’s football team dancing to a Bhojpuri song is fake. It is a manipulated version of an original clip celebrating their 2022 FIFA World Cup victory, with the song altered to include a Bhojpuri song. This confirms that the claim circulating on social media is false and misleading.

- Claim: A viral video of the Argentina football team dancing to a Bhojpuri song after victory.

- Claimed on: Instagram, YouTube

- Fact Check: Fake & Misleading

Related Blogs

.webp)

Introduction

As the 2024 Diwali festive season approaches, netizens eagerly embrace the spirit of celebration with online shopping, gifting, and searching for the best festive deals on online platforms. Historical web data from India shows that netizens' online activity spikes at this time as people shop online to upgrade their homes, buy unique presents for loved ones and look for services and products to make their celebrations more joyful.

However, with the increase in online transactions and digital interactions, cybercriminals take advantage of the festive rush by enticing users with fake schemes, fake coupons offering freebies, fake offers of discounted jewellery, counterfeit product sales, festival lotteries, fake lucky draws and charity appeals, malicious websites and more. Cybercrimes, especially phishing attempts, also spike in proportion to user activity and shopping trends at this time.

Hence, it becomes important for all netizens to stay alert, making sure their personal information and financial data is protected and ensure that they exercise due care and caution before clicking on any suspicious links or offers. Additionally, brands and platforms also must make strong cybersecurity a top priority to safeguard their customers and build trust.

Diwali Season and Phishing Attempts

Last year's report from CloudSEK's research team noted an uptick in cyber threats during the Diwali period, where cybercriminals leveraged the festive mood to launch phishing, betting and crypto scams. The report revealed that phishing attempts target the e-commerce industries and seek to damage the image of reputable brands. An astounding 828 distinct domains devoted to phishing activities were found in the Facebook Ads Library by CloudSEK's investigators. The report also highlighted the use of typosquatting techniques to create phony-but-plausible domains that trick users into believing they are legitimate websites, by exploiting common typing errors or misspellings of popular domain names. As fraudsters are increasingly misusing AI and deepfake technologies to their advantage, we expect even more of these dangers to surface this year over the festive season.

CyberPeace Advisory

It is important that netizens exercise caution, especially during the festive period and follow cyber safety practices to avoid cybercrimes and phishing attempts. Some of the cyber hygiene best practices suggested by CyberPeace are as follows:

- Netizens must verify the sender’s email, address, and domain with the official site for the brand/ entity the sender claims to be affiliated with.

- Netizens must avoid clicking links received through email, messages or shared on social media and consider visiting the official website directly.

- Beware of urgent, time-sensitive offers pressuring immediate action.

- Spot phishing signs like spelling errors and suspicious URLs to avoid typosquatting tactics used by cybercriminals.

- Netizens must enable two-factor authentication (2FA) for an additional layer of security.

- Have authenticated antivirus software and malware detection software installed on your devices.

- Be wary of unsolicited festive deals, gifts and offers.

- Stay informed on common tactics used by cybercriminals to launch phishing attacks and recognise the red flags of any phishing attempts.

- To report cybercrimes, file a complaint at cybercrime.gov.in or helpline number 1930. You can also seek assistance from the CyberPeace helpline at +91 9570000066.

References

- https://www.outlookmoney.com/plan/financial-plan/this-diwali-beware-of-these-financial-scams

- https://www.businesstoday.in/technology/news/story/diwali-and-pooja-domains-being-exploited-by-online-scams-see-tips-to-help-you-stay-safe-405323-2023-11-10

- https://www.abplive.com/states/bihar/bihar-crime-news-15-cyber-fraud-arrested-in-nawada-before-diwali-2024-ann-2805088

- https://economictimes.indiatimes.com/tech/technology/phishing-you-a-happy-diwali-ai-advancements-pave-way-for-cybercriminals/articleshow/113966675.cms?from=mdr

Introduction

In recent years, India has witnessed a significant rise in the popularity and recognition of esports, which refers to online gaming. Esports has emerged as a mainstream phenomenon, influencing players and youngsters worldwide. In India, with the penetration of the internet at 52%, the youth has got its attracted to Esports. In this blog post, we will look at how the government is booting the players, establishing professional leagues, and supporting gaming companies and sponsors in the best possible manner. As the ecosystem continues to rise in prominence and establish itself as a mainstream sporting phenomenon in India.

Factors Shaping Esports in India: A few factors are shaping and growing the love for esports in India here. Let’s have a look.

Technological Advances: The availability and affordability of high-speed internet connections and smart gaming equipment have played an important part in making esports more accessible to a broader audience in India. With the development of smartphones and low-cost gaming PCs, many people may now easily participate in and watch esports tournaments.

Youth Demographic: India has a large population of young people who are enthusiastic gamers and tech-savvy. The youth demographic’s enthusiasm for gaming has spurred the expansion of esports in the country, as they actively participate in competitive gaming and watch major esports competitions.

Increase in the Gaming community: Gaming has been deeply established in Indian society, with many people using it for enjoyment and social contact. As the competitive component of gaming, esports has naturally gained popularity among gamers looking for a more competitive and immersive experience.

Esports Infrastructure and Events: The creation of specialised esports infrastructure, such as esports arenas, gaming cafés, and tournament venues, has considerably aided esports growth in India. Major national and international esports competitions and leagues have also been staged in India, offering exposure and possibilities for prospective esports players. Also supports various platforms such as YouTube, Twitch, and Facebook gaming, which has played a vital role in showcasing and popularising Esports in India.

Government support: Corporate and government sectors in India have recognised the potential of esports and are actively supporting its growth. Major corporate investments, sponsorships, and collaborations with esports organisations have supplied the financial backing and resources required for the country’s esports development. Government attempts to promote esports have also been initiated, such as forming esports governing organisations and including esports in official sporting events.

Growing Popularity and Recognition: Esports in India has witnessed a significant surge in viewership and fanbase, all thanks to online streaming platforms such as Twitch, YouTube which have provided a convenient way for fans to watch live esports events at home and at high-definition quality social media platforms let the fans to interact with their favourite players and stay updated on the latest esports news and events.

Esports Leagues in India

The organisation of esports tournaments and leagues in India has increased, with the IGL being one of the largest and most popular. The ESL India Premiership is a major esports event the Electronic Sports League organised in collaboration with NODWIN Gaming. Viacom18, a well-known Indian media business, established UCypher, an esports league. It focuses on a range of gaming games such as CS: GO, Dota 2, and Tekken in order to promote esports as a professional sport in India. All of these platforms provide professional players with a venue to compete and establish their profile in the esports industry.

India’s Performance in Esports to Date

Indian esports players have achieved remarkable global success, including outstanding results in prominent events and leagues. Individual Indian esports players’ success stories illustrate their talent, determination, and India’s ability to flourish in the esports sphere. These accomplishments contribute to the worldwide esports landscape’s awareness and growth of Indian esports. To add the name of the players and their success stories that have bought pride to India, they are Tirth Metha, Known as “Ritr”, a CS:GO player, Abhijeet “Ghatak”, Ankit “V3nom”, Saloni “Meow16K”.Apart from this Indian women’s team has also done exceptionally well in CS:GO and has made it to the finale.

Government and Corporate Sectors support: The Indian esports business has received backing from the government and corporate sectors, contributing to its growth and acceptance as a genuine sport.

Government Initiatives: The Indian government has expressed increased support for esports through different initiatives. This involves recognising esports as an official sport, establishing esports regulating organisations, and incorporating esports into national sports federations. The government has also announced steps to give financial assistance, subsidies, and infrastructure development for esports, therefore providing a favourable environment for the industry’s growth. Recently, Kalyan Chaubey, joint secretary and acting CEO of the IOA, personally gave the athletes cutting-edge training gear during this occasion, providing kits to the players. The kit includes the following:

Advanced gaming mouse.

Keyboard built for quick responses.

A smooth mousepad

A headphone for crystal-clear communication

An eSports bag to carry the equipment.

Corporate Sponsorship and Partnerships

Indian corporations have recognised esports’ promise and actively sponsored and collaborated with esports organisations, tournaments, and individual players. Companies from various industries, including technology, telecommunications, and entertainment, have invested in esports to capitalise on its success and connect with the esports community. These sponsorships and collaborations give financial support, resources, and visibility to esports in India. The leagues and championships provide opportunities for young players to showcase their talent.

Challenges and future

While esports provides great job opportunities, several obstacles must be overcome in order for the industry to expand and gain recognition:

Infrastructure & Training Facilities: Ensuring the availability of high-quality training facilities and infrastructure is critical for developing talent and allowing players to realise their maximum potential. Continued investment in esports venues, training facilities, and academies is critical for the industry’s long-term success.

Fostering a culture of skill development and giving outlets for formal education in esports would improve the professionalism and competitiveness of Indian esports players. Collaborations between educational institutions and esports organisations can result in the development of specialised programs in areas such as game analysis, team management, and sports psychology.

Establishing a thorough legal framework and governance structure for esports will help it gain legitimacy as a professional sport. Clear standards on player contracts, player rights, anti-doping procedures, and fair competition policies are all part of this.

Conclusion

Esports in India provide massive professional opportunities and growth possibilities for aspiring esports athletes. The sector’s prospects are based on overcoming infrastructure, perception, talent development, and regulatory barriers. Esports may establish itself as a viable and acceptable career alternative in India with continued support, investment, and stakeholder collaboration

Executive Summary

As India concluded its 77th Republic Day celebrations on January 26, 2026, with grandeur and patriotic enthusiasm along the iconic Kartavya Path, a video began circulating on social media claiming to show Indian security personnel failing to perform motorcycle stunts during the ceremonial parade. The short clip allegedly depicts soldiers attempting high-risk, synchronised motorcycle manoeuvres, only to lose balance and fall off their bikes. The visuals were widely shared online with mocking captions, suggesting incompetence during a nationally televised event. However, an research by the CyberPeace found that the video is not authentic and was digitally generated using artificial intelligence.

Claim

A Pakistan-based X user, Sadaf Baloch (@sadafzbaloch), shared the video on January 27, claiming it showed Indian security personnel failing to execute motorcycle stunts during the Republic Day parade held on January 26, 2026. While sharing the clip, the user wrote:“Every time the Indian Army tries a tactical stunt, it looks less like combat training and more like a low-budget circus trailer filmed in one take.”The post was widely circulated with similar narratives questioning the professionalism of Indian forces.

Here is the link and archive link to the post, along with a screenshot.

To verify the authenticity of the viral video, the Desk conducted a detailed frame-by-frame analysis. During the examination, a watermark linked to ‘Sora’—an AI text-to-video generation model was detected at the 00:05 timestamp. The presence of this watermark strongly indicated that the video was artificially generated and not recorded during a real-world event.

Fact Check:

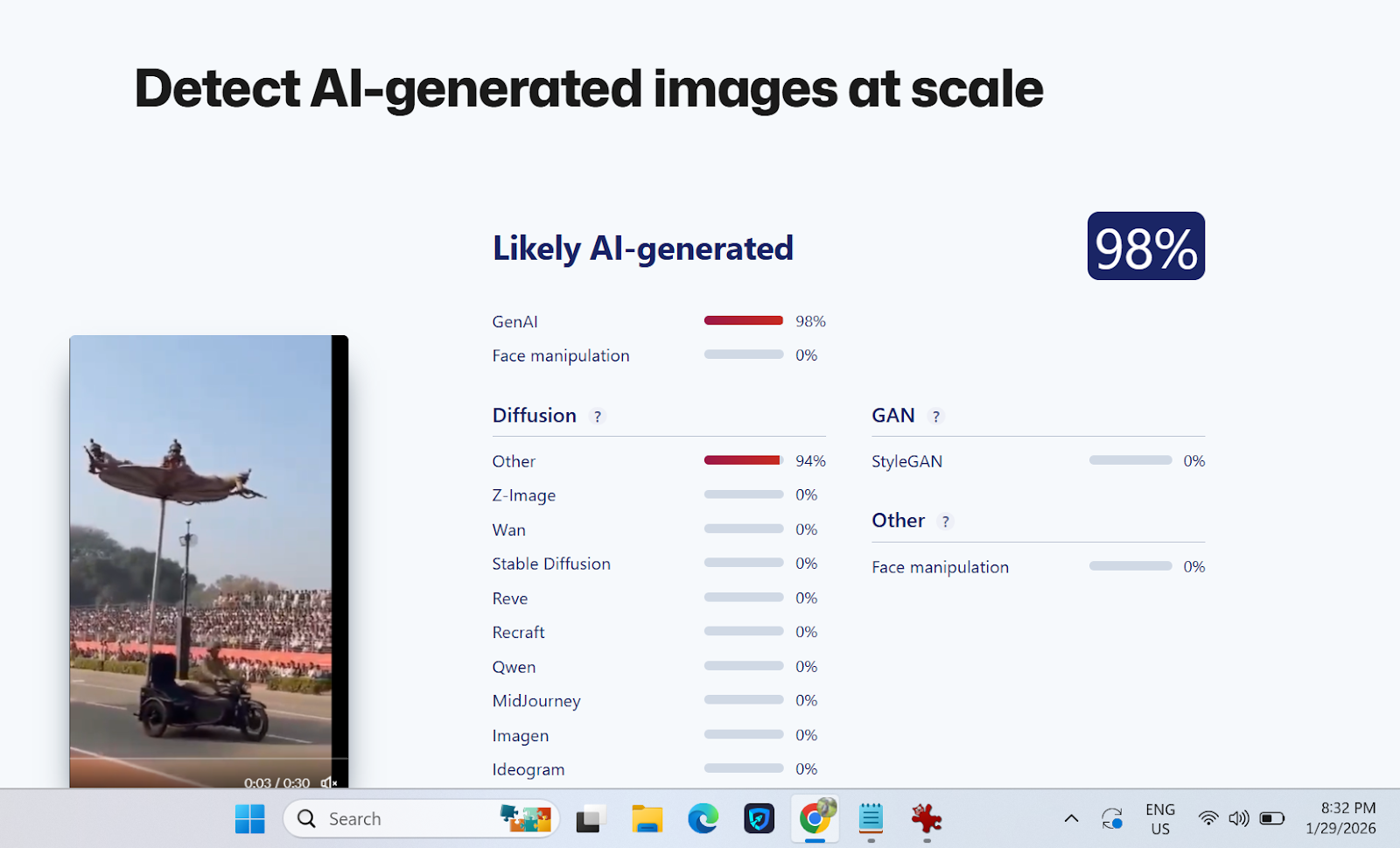

Further visual scrutiny revealed several inconsistencies commonly associated with AI-generated content. The background appeared unnatural and lacked realistic depth, while the movements and reactions of the security personnel looked mechanically exaggerated and inconsistent with real physics. Facial expressions and body motions during the alleged falls also appeared unrealistic. To strengthen the verification, the Desk analysed the clip using Sightengine, an AI-detection tool. The results showed a 98 per cent probability that the video contained AI-generated or deepfake elements.

Below is a screenshot of the result.

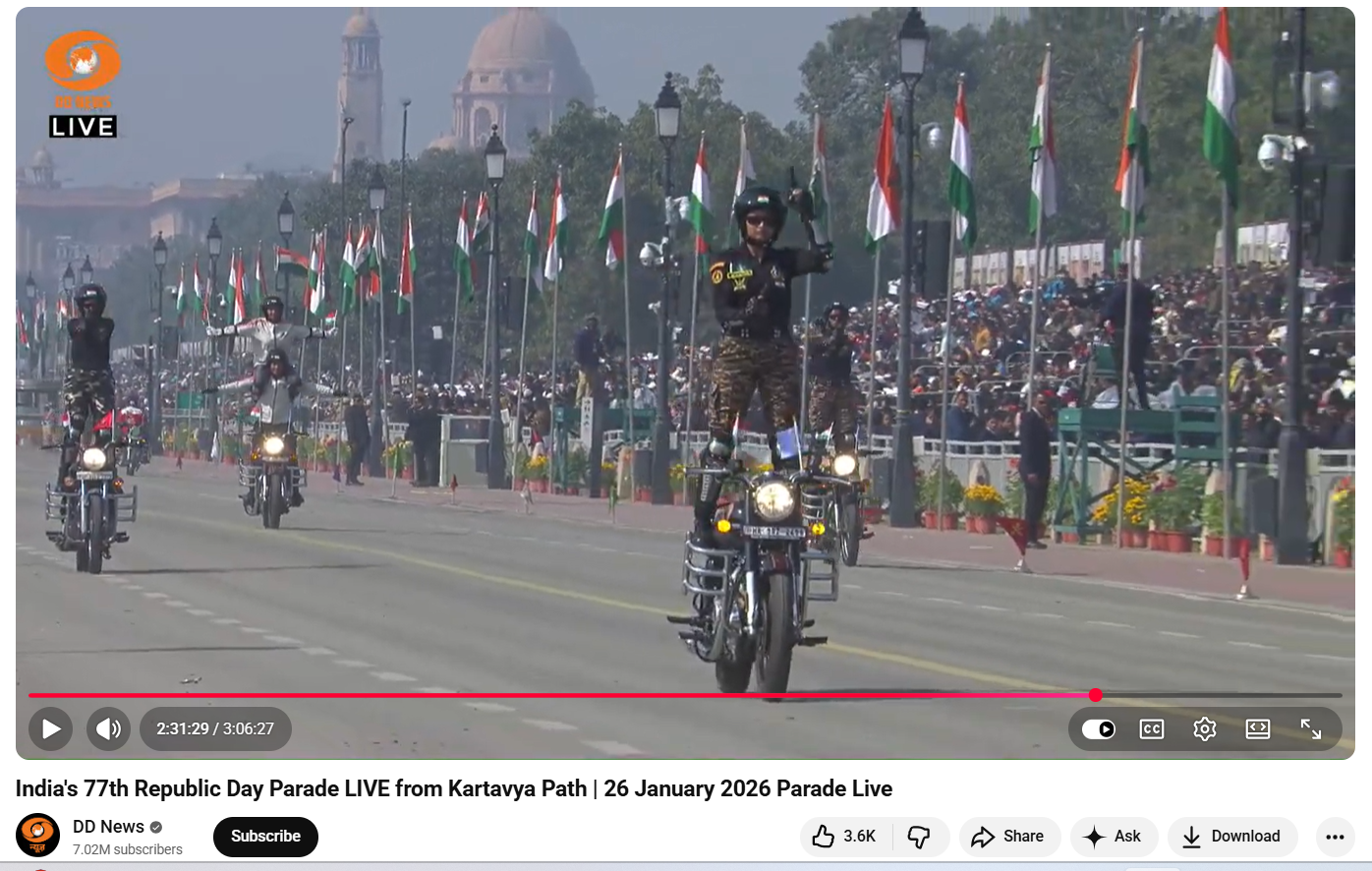

As part of the research , the Desk also conducted a customised keyword search and reviewed official coverage of the Republic Day parade. A full-length video broadcast by DD News on its official YouTube channel was examined. The footage showed joint CRPF and SSB motorcycle teams performing traditional daredevil stunts without any mishap. No incident resembling the viral claim was found in the official broadcast or in any credible media reports.

Here is the video link and a screenshot.

Conclusion

The CyberPeace research confirms that the viral video purportedly showing Indian security personnel failing to perform motorcycle stunts during the 77th Republic Day parade is AI-generated. The clip has been falsely circulated online as genuine content with the intent to mislead viewers and spread misinformation.