From Social Media Ads to Fraud: The Rise of Fake Banking Apps - A Cybercrime investigation Case Study

Executive Summary:

Recently, CyberPeace faced a case involving a fraudulent Android application imitating the Punjab National Bank (PNB). The victim was tricked into downloading an APK file named "PNB.apk" via WhatsApp. After the victim installed the apk file, it resulted in unauthorized multiple transactions on multiple credit cards.

Case Study: The Attack: Social Engineering Meets Malware

The incident started when the victim clicked on a Facebook ad for a PNB credit card. After submitting basic personal information, the victim receives a WhatsApp call from a profile displaying the PNB logo. The attacker, posing as a bank representative, fakes the benefits and features of the Credit Card and convinces the victim to install an application named PNB.apk. The so called bank representative sent the app through WhatsApp, claiming it would expedite the credit card application. The application was installed in the mobile device as a customer care application. It asks for permissions such as to send or view SMS messages. The application opens only if the user provides this permission.

It extracts the credit card details from the user such as Full Name, Mobile Number, complain, on further pages irrespective of Refund, Pay or Other. On further processing, it asks for other information such as credit card number, expiry date and cvv number.

Now the scammer has access to all the details of the credit card information, access to read or view the sms to intercept OTPs.

The victim, thinking they were securely navigating the official PNB website, was unaware that the malware was granting the hacker remote access to their phone. This led to ₹4 lakhs worth of 11 unauthorized transactions across three credit cards.

The Investigation & Analysis:

Upon receiving the case through CyberPeace helpline, the CyberPeace Research Team acted swiftly to neutralize the threat and secure the victim’s device. Using a secure remote access tool, we gained control of the phone with the victim’s consent. Our first step was identifying and removing the malicious "PNB.apk" file, ensuring no residual malware was left behind.

Next, we implemented crucial cyber hygiene practices:

- Revoking unnecessary permissions – to prevent further unauthorized access.

- Running antivirus scans – to detect any remaining threats.

- Clearing sensitive data caches – to remove stored credentials and tokens.

The CyberPeace Helpline team assisted the victim to report the fraud to the National Cybercrime Portal and helpline (1930) and promptly blocked the compromised credit cards.

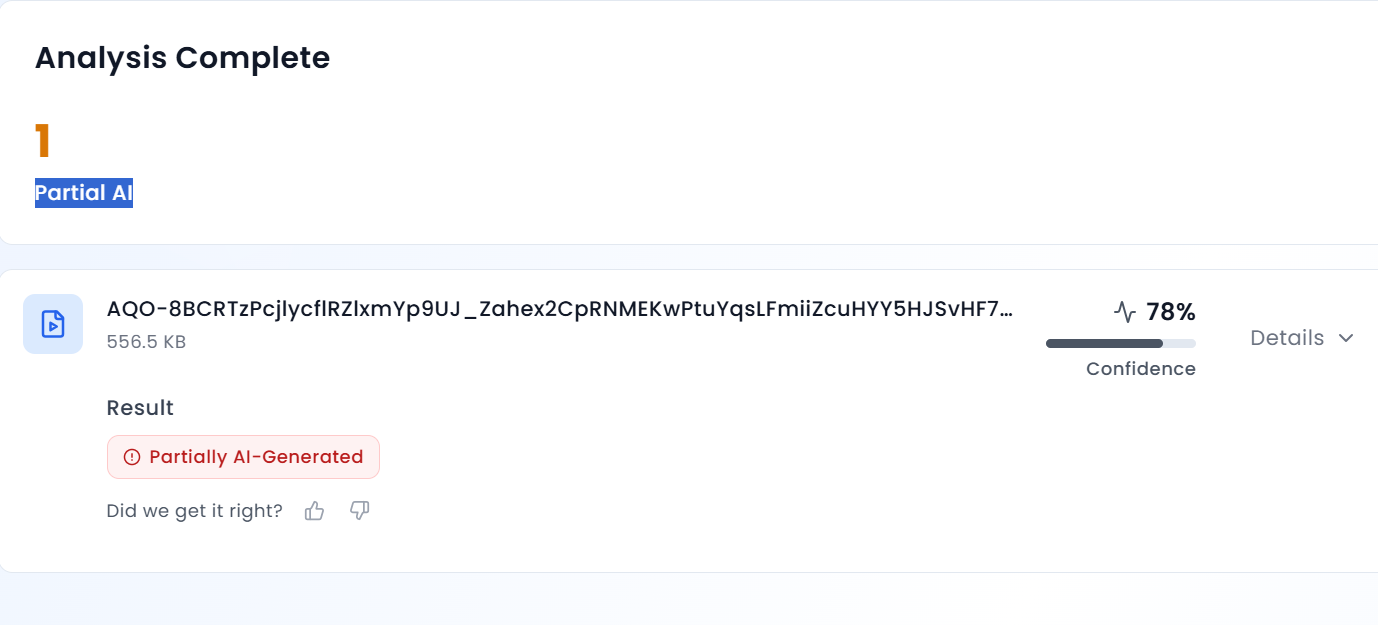

The technical analysis for the app was taken ahead and by using the md5 hash file id. This app was marked as malware in virustotal and it has all the permissions such as Send/Receive/Read SMS, System Alert Window.

In the similar way, we have found another application in the name of “Axis Bank” which is circulated through whatsapp which is having similar permission access and the details found in virus total are as follows:

Recommendations:

This case study implies the increasingly sophisticated methods used by cybercriminals, blending social engineering with advanced malware. Key lessons include:

- Be vigilant when downloading the applications, even if they appear to be from legitimate sources. It is advised to install any application after checking through an application store and not through any social media.

- Always review app permissions before granting access.

- Verify the identity of anyone claiming to represent financial institutions.

- Use remote access tools responsibly for effective intervention during a cyber incident.

By acting quickly and following the proper protocols, we successfully secured the victim’s device and prevented further financial loss.