#FactCheck: Viral Video Claiming IAF Air Chief Marshal Acknowledged Loss of Jets Found Manipulated

Executive Summary:

A video circulating on social media falsely claims to show Indian Air Chief Marshal AP Singh admitting that India lost six jets and a Heron drone during Operation Sindoor in May 2025. It has been revealed that the footage had been digitally manipulated by inserting an AI generated voice clone of Air Chief Marshal Singh into his recent speech, which was streamed live on August 9, 2025.

Claim:

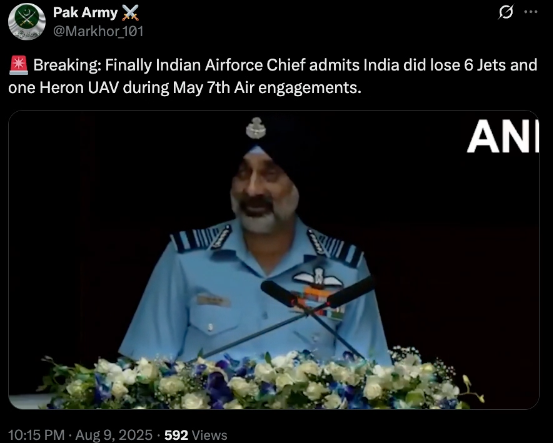

A viral video (archived video) (another link) shared by an X user stating in the caption “ Breaking: Finally Indian Airforce Chief admits India did lose 6 Jets and one Heron UAV during May 7th Air engagements.” which is actually showing the Air Chief Marshal has admitted the aforementioned loss during Operation Sindoor.

Fact Check:

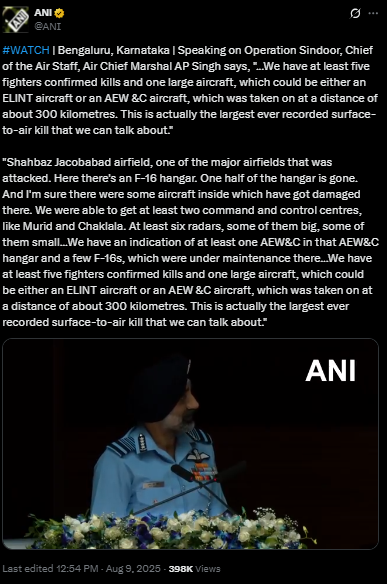

By conducting a reverse image search on key frames from the video, we found a clip which was posted by ANI Official X handle , after watching the full clip we didn't find any mention of the aforementioned alleged claim.

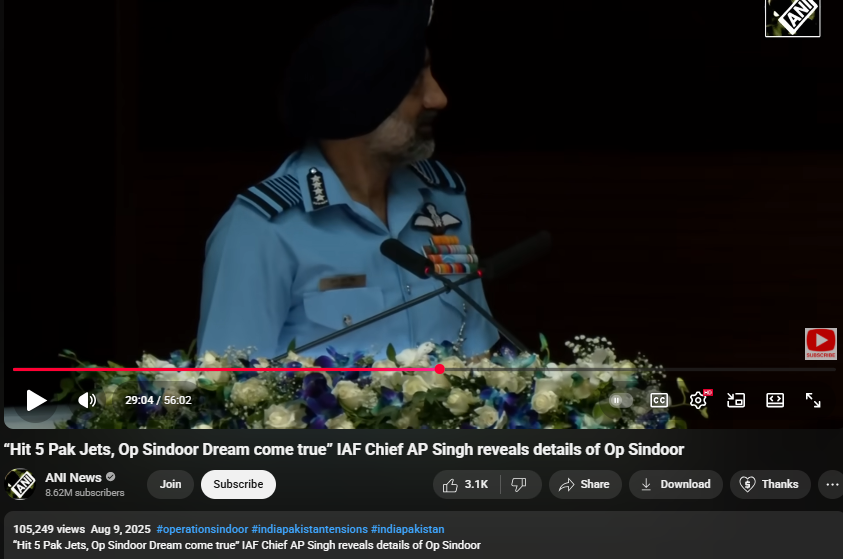

On further research we found an extended version of the video in the Official YouTube Channel of ANI which was published on 9th August 2025. At the 16th Air Chief Marshal L.M. Katre Memorial Lecture in Marathahalli, Bengaluru, Air Chief Marshal AP Singh did not mention any loss of six jets or a drone in relation to the conflict with Pakistan. The discrepancies observed in the viral clip suggest that portions of the audio may have been digitally manipulated.

The audio in the viral video, particularly the segment at the 29:05 minute mark alleging the loss of six Indian jets, appeared to be manipulated and displayed noticeable inconsistencies in tone and clarity.

Conclusion:

The viral video claiming that Air Chief Marshal AP Singh admitted to the loss of six jets and a Heron UAV during Operation Sindoor is misleading. A reverse image search traced the footage that no such remarks were made. Further an extended version on ANI’s official YouTube channel confirmed that, during the 16th Air Chief Marshal L.M. Katre Memorial Lecture, no reference was made to the alleged losses. Additionally, the viral video’s audio, particularly around the 29:05 mark, showed signs of manipulation with noticeable inconsistencies in tone and clarity.

- Claim: Viral Video Claiming IAF Chief Acknowledged Loss of Jets Found Manipulated

- Claimed On: Social Media

- Fact Check: False and Misleading

Related Blogs

Introduction:

Technology has become a vital part of everyone’s life nowadays, it occupies essential activities of a person’s life whether we are working or playing and studying. I would say from education to corporate, technology makes everything easier and simpler to achieve the goals for a particular thing. Corporate companies are using technology for their day-to-day work and there are many law-based foundations that are publishing blogs and papers for legal awareness, many lawyers use internet technology for promoting themselves which amounts to growth in their work. Some legal work can now be done by machines, which was previously unthinkable. Large disputes frequently have many documents to review. Armies of young lawyers and paralegals are typically assigned to review these documents. This work can be done by a properly trained machine. Machine drafting of documents is also gaining popularity. We’ve also seen systems that can forecast the outcome of a dispute. We are starting to see machines take on many tasks that we once thought was solely the domain of lawyers.

How to expand law firms and the corporate world with the help of technology?

If we talk about how lawyers’ lives will be impacted by technology then I would explain about law students first. Students are the one who is utilizing the technology at its best for their work, tech could be helpful in students’ lives. as law students use SCC online and manupatra, which are used for case laws. And during their law internships, they use it to help their seniors to find appropriate cases for them. and use it as well for their college research work. SCC and manupatra are very big platforms by which we can say if students use technology for their careers, it will impact their law career in the best ways.

A lawyer running a law firm is not a small task, and there are plenty of obstacles to that, such as a lack of tech solutions, failure to fulfil demands, and inability to innovate, these obstacles prevent the growth of some firms. The right legal tech can grow an organization or a law firm and there will be fewer obstacles.

Technology can be proven as a good mechanism to grow the law firm, as everything depends on tech, from court work to corporate. If we talk about covid during 2020, everything shifted towards the virtual world, court hearings switched to online mode due to covid which proved as a bone to the legal system as the case hearings were speedy and there was no physical contact due to that.

Legal automation is also helping law firms to grow in a competitive world. And it has other benefits also like shifting tedious tasks from humans to machines, allowing the lawyer to work on more valuable work. I would say that small firms should also need to embrace automation for competition in the corporate sector. Today, artificial intelligence offers a solution to solve or at least make the access-to-justice issue better and completely transform our traditional legal system.

There was a world-cited author, Richard Susskind, OBE, who talked about the future of law and lawyers and he wrote a book, Online Courts and the Future of Justice. Richard argues that technology is going to bring about a fascinating decade of change in the legal sector and transform our court system. Although automating our old ways of working plays a part in this, even more, critical is that artificial intelligence and technology will help give more individuals access to justice.

The rise of big data has also resulted in rapid identification systems, which allow police officers to quickly see an individual’s criminal history through a simple search.The FBI’s Next Generation Identification (NGI) system matches individuals with their criminal history information using biometrics such as fingerprints, palm prints, iris recognition, and facial recognition. The NGI’s current technologies are constantly being updated, and new ones are being added, to make the NGI the most comprehensive way to gather up-to-date information on the person being examined

During covid, there were e-courts services in courts, and lawyers and judges were taking cases online. After the covid, the use of technology increased in the law field also from litigation to corporate. As technology can also safeguard confidential information between parties and lawyers. There was ODR, (online dispute resolution) happening meetings that were taking place online mode.

File sharing is inevitable in the practice of law. Yet sometimes the most common ways of sharing (think email) are not always the most secure. With the remote office, the boom has come an increased need for alternate file-sharing solutions. There is data encryption to protect data as it is a reliable method to protect confidential data and information.

Conclusion-

Technology has been playing a vital role in the legal industry and has increased the efficiency of legal offices and the productivity of clerical workers. With the advent of legal tech, there is greater transparency between legal firms and clients. Clients know how many fees they must pay and can keep track of the day-to-day progress of the lawyer on their case. Also, there is no doubt that technology, if used correctly, is fast and efficient – more than any human individual. This can prove to be of great assistance to any law firm. Lawyers of the future will be the ones who create the systems that will solve their client’s problems. These legal professionals will include legal knowledge engineers, legal risk managers, system developers, design thinking experts, and others. These people will use technology to create new ways of solving legal problems. In many ways, the legal sector is experiencing the same digitization that other industries have, and because it is so document-intensive, it is actually an industry that stands to benefit greatly from what technology has to offer.

Introduction

In a world where social media dictates public perception and content created by AI dilutes the difference between fact and fiction, mis/disinformation has become a national cybersecurity threat. Today, disinformation campaigns are designed for their effect, with political manipulation, interference in public health, financial fraud, and even community violence. India, with its 900+ million internet users, is especially susceptible to this distortion online. The advent of deep fakes, AI-text, and hyper-personalised propaganda has made disinformation more plausible and more difficult to identify than ever.

What is Misinformation?

Misinformation is false or inaccurate information provided without intent to deceive. Disinformation, on the other hand, is content intentionally designed to mislead and created and disseminated to harm or manipulate. Both are responsible for what experts have termed an "infodemic", overwhelming people with a deluge of false information that hinders their ability to make decisions.

Examples of impactful mis/disinformation are:

- COVID-19 vaccine conspiracy theories (e.g., infertility or microchips)

- Election-related false news (e.g., EVM hacking so-called)

- Social disinformation (e.g., manipulated videos of riots)

- Financial scams (e.g., bogus UPI cashbacks or RBI refund plans)

How Misinformation Spreads

Misinformation goes viral because of both technology design and human psychology. Social media sites such as Facebook, X (formerly Twitter), Instagram, and WhatsApp are designed to amplify messages that elicit high levels of emotional reactions are usually polarising, sensationalistic, or fear-mongering posts. This causes falsehoods or misinformation to get much more attention and activity than authentic facts, and therefore prioritises virality over truth.

Another major consideration is the misuse of generative AI and deep fakes. Applications like ChatGPT, Midjourney, and ElevenLabs can be used to generate highly convincing fake news stories, audio recordings, or videos imitating public figures. These synthetic media assets are increasingly being misused by bad actors for political impersonation, propagating fabricated news reports, and even carrying out voice-based scams.

To this danger are added coordinated disinformation efforts that are commonly operated by foreign or domestic players with certain political or ideological objectives. These efforts employ networks of bot networks on social media, deceptive hashtags, and fabricated images to sway public opinion, especially during politically sensitive events such as elections, protests, or foreign wars. Such efforts are usually automated with the help of bots and meme-driven propaganda, which makes them scalable and traceless.

Why Misinformation is Dangerous

Mis/disinformation is a significant threat to democratic stability, public health, and personal security. Perhaps one of the most pernicious threats is that it undermines public trust. If it goes unchecked, then it destroys trust in core institutions like the media, judiciary, and electoral system. This erosion of public trust has the potential to destabilise democracies and heighten political polarisation.

In India, false information has had terrible real-world outcomes, especially in terms of creating violence. Misleading messages regarding child kidnappers on WhatsApp have resulted in rural mob lynching. As well, communal riots have been sparked due to manipulated religious videos, and false terrorist warnings have created public panic.

The pandemic of COVID-19 also showed us how misinformation can be lethal. Misinformation regarding vaccine safety, miracle cures, and the source of viruses resulted in mass vaccine hesitancy, utilisation of dangerous treatments, and even avoidable deaths.

Aside from health and safety, mis/disinformation has also been used in financial scams. Cybercriminals take advantage of the fear and curiosity of the people by promoting false investment opportunities, phishing URLs, and impersonation cons. Victims get tricked into sharing confidential information or remitting money using seemingly official government or bank websites, leading to losses in crypto Ponzi schemes, UPI scams, and others.

India’s Response to Misinformation

- PIB Fact Check Unit

The Press Information Bureau (PIB) operates a fact-checking service to debunk viral false information, particularly on government policies. In 3 years, the unit identified more than 1,500 misinformation posts across media.

- Indian Cybercrime Coordination Centre (I4C)

Working under MHA, I4C has collaborated with social media platforms to identify sources of viral misinformation. Through the Cyber Tipline, citizens can report misleading content through 1930 or cybercrime.gov.in.

- IT Rules (The Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021 [updated as on 6.4.2023]

The Information Technology (Intermediary Guidelines) Rules were updated to enable the government to following aspects:

- Removal of unlawful content

- Platform accountability

- Detection Tools

There are certain detection tool that works as shields in assisting fact-checkers and enforcement bodies to:

- Identify synthetic voice and video scams through technical measures.

- Track misinformation networks.

- Label manipulated media in real-time.

CyberPeace View: Solutions for a Misinformation-Resilient Bharat

- Scale Digital Literacy

"Think Before You Share" programs for rural schools to teach students to check sources, identify clickbait, and not reshare fake news.

- Platform Accountability

Technology platforms need to:

- Flag manipulated media.

- Offer algorithmic transparency.

- Mark AI-created media.

- Provide localised fact-checking across diverse Indian languages.

- Community-Led Verification

Establish WhatsApp and Telegram "Fact Check Hubs" headed by expert organisations, industry experts, journalists, and digital volunteers who can report at the grassroots level fake content.

- Legal Framework for Deepfakes

Formulate targeted legislation under the Bhartiya Nyaya Sanhita (BNS) and other relevant laws to make malicious deepfake and synthetic media use a criminal offense for:

- Electoral manipulation.

- Defamation.

- Financial scams.

- AI Counter-Misinformation Infrastructure

Invest in public sector AI models trained specifically to identify:

- Coordinated disinformation patterns.

- Botnet-driven hashtag campaigns.

- Real-time viral fake news bursts.

Conclusion

Mis/disinformation is more than just a content issue, it's a public health, cybersecurity, and democratic stability challenge. As India enters the digitally empowered world, making a secure, informed, and resilient information ecosystem is no longer a choice; now, it's imperative. Fighting misinformation demands a whole-of-society effort with AI innovation, public education, regulatory overhaul, and tech responsibility. The danger is there, but so is the opportunity to guide the world toward a fact-first, trust-based digital age. It's time to act.

References

- https://www.pib.gov.in/factcheck.aspx

- https://www.meity.gov.in/static/uploads/2024/02/Information-Technology-Intermediary-Guidelines-and-Digital-Media-Ethics-Code-Rules-2021-updated-06.04.2023-.pdf

- https://www.cyberpeace.org

- https://www.bbc.com/news/topics/cezwr3d2085t

- https://www.logically.ai

- https://www.altnews.in

Executive Summary

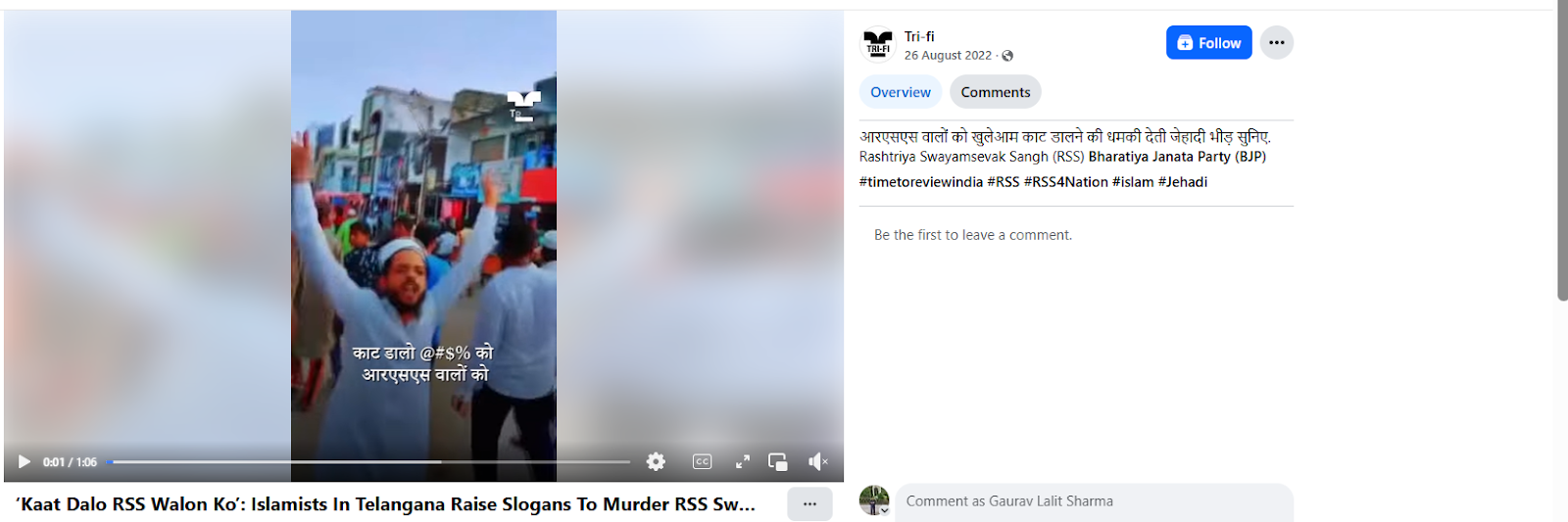

A video showing a group of people wearing Muslim caps raising provocative slogans against the Rashtriya Swayamsevak Sangh (RSS) is being widely shared on social media. Users sharing the clip claim that the incident took place recently in Uttar Pradesh. However, CyberPeace research found the claim to be false. The probe established that the video is neither recent nor related to Uttar Pradesh. In fact, the footage dates back to 2022 and is from Telangana. The slogans heard in the video were raised during a protest against Goshamahal MLA T. Raja Singh, and the clip is now being circulated with a misleading claim.

Claim

On January 21, 2026, a user on social media platform X (formerly Twitter) shared the video claiming it showed people in Uttar Pradesh chanting slogans such as, “Kaat daalo saalon ko, RSS walon ko” and “Gustakh-e-Nabi ka sar chahiye.” The post suggested that such slogans were being raised openly in Uttar Pradesh despite strict law enforcement. Links to the post and its archive are provided below.

Fact Check:

To verify the claim, CyberPeace research conducted a reverse image search using keyframes from the viral video. The same footage was found on a Facebook account where it had been uploaded on August 26, 2022, indicating that the video is not recent.

Further verification led the team to a report published by news portal OpIndia on August 25, 2022, which featured identical visuals from the viral clip. According to the report, the video showed a protest march organised against BJP MLA T. Raja Singh following his alleged controversial remarks about Prophet Muhammad. The report identified one of the individuals in the video as Kaleem Uddin, who was allegedly heard raising the slogan “Kaat daalo saalon ko,” to which the crowd responded “RSS walon ko.” The slogan was linked to incitement against RSS members.

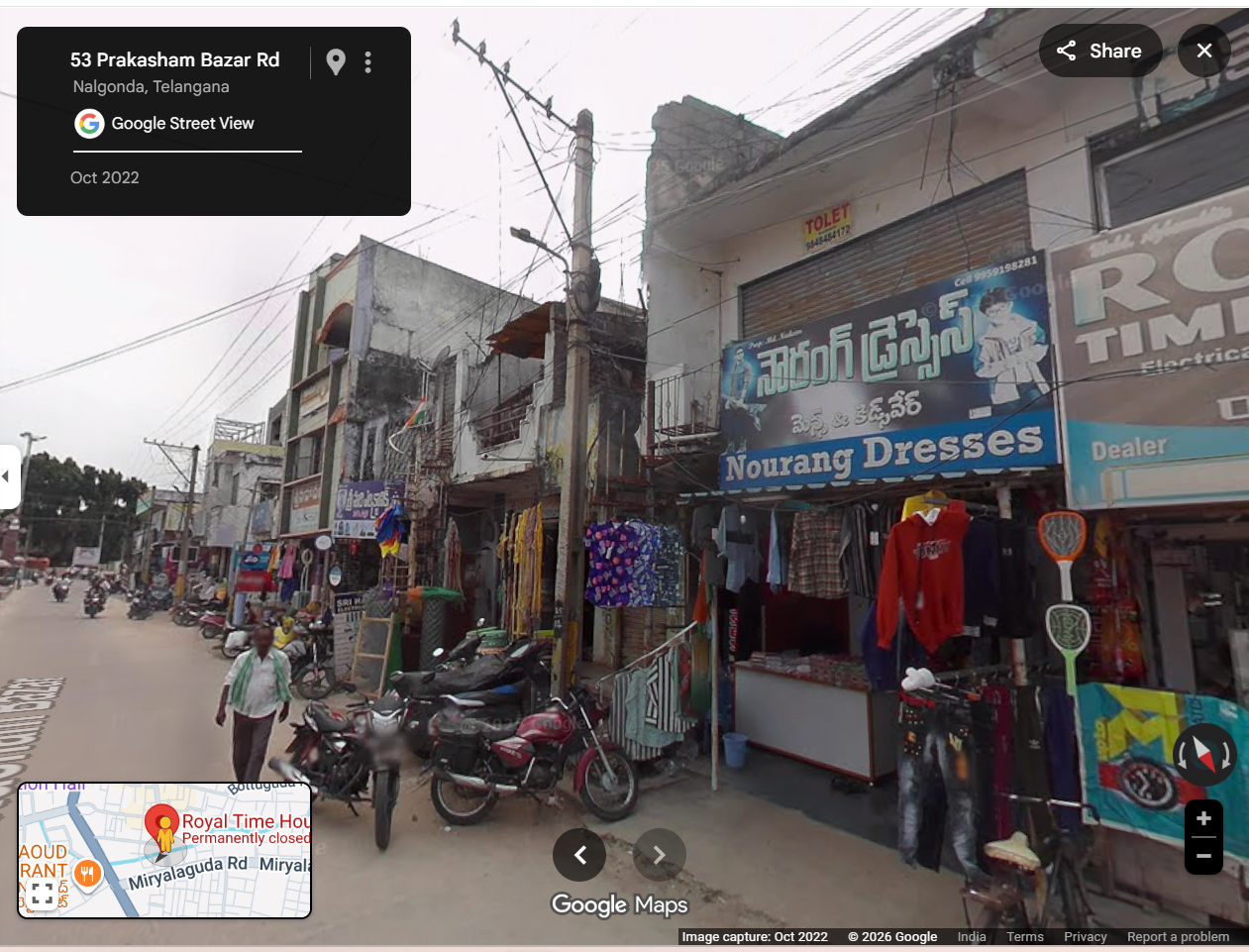

To confirm the location, the video was examined closely. A shop sign reading “Royal Time House” was visible in the footage. Using Google Street View, the same shop was located in Nalgonda, Telangana, conclusively establishing that the video was filmed there and not in Uttar Pradesh.

Conclusion

CyberPeace research confirmed that the viral video is from 2022 and was recorded in Telangana, not Uttar Pradesh. The clip is being falsely circulated with a misleading claim to give it a communal and political angle.