#FactCheck: Fake Phishing link on Modi Government is giving ₹5,000 to all Indian citizens via UPI

Executive Summary:

A viral social media message claims that the Indian government is offering a ₹5,000 gift to citizens in celebration of Prime Minister Narendra Modi’s birthday. However, this claim is false. The message is part of a deceptive scam that tricks users into transferring money via UPI, rather than receiving any benefit. Fact-checkers have confirmed that this is a fraud using misleading graphics and fake links to lure people into authorizing payments to scammers.

Claim:

The post circulating widely on platforms such as WhatsApp and Facebook states that every Indian citizen is eligible to receive ₹5,000 as a gift from the current Union Government on the Prime Minister’s birthday. The message post includes visuals of PM Modi, BJP party symbols, and UPI app interfaces such as PhonePe or Google Pay, and urges users to click on the BJP Election Symbol [Lotus] or on the provided link to receive the gift directly into their bank account.

Fact Check:

Our research indicates that there is no official announcement or credible article supporting the claim that the government is offering ₹5,000 under the Pradhan Mantri Jan Dhan Yojana (PMJDY). This claim does not appear on any official government websites or verified scheme listings.

While the message was crafted to appear legitimate, it was in fact misleading. The intent was to deceive users into initiating a UPI payment rather than receiving one, thereby putting them at financial risk.

A screen popped up showing a request to pay ₹686 to an unfamiliar UPI ID. When the ‘Pay ₹686’ button was tapped, the app asked for the UPI PIN—clearly indicating that this would have authorised a payment straight from the user’s bank account to the scammer’s.

We advise the public to verify such claims through official sources before taking any action.

Our research indicated that the claim in the viral post is false and part of a fraudulent UPI money scam.

Clicking the link that went with the viral Facebook post, it took us to a website

https://wh1449479[.]ispot[.]cc/with a somewhat odd domain name of 'ispot.cc', which is certainly not a government-related or commonly known domain name. On the website, we observed images that featured a number of unauthorized visuals, including a Prime Minister Narendra Modi image, a Union Minister and BJP President J.P. Nadda image, the national symbol, the BJP symbol, and the Pradhan Mantri Jan Dhan Yojana logo. It looked like they were using these visuals intentionally to convince users that the website was legitimate.

Conclusion:

The assertion that the Indian government is handing out ₹5,000 to all citizens is totally false and should be reported as a scam. The message uses the trust related to government schemes, tricking users into sending money through UPI to criminals. They recommend that individuals do not click on links or respond to any such message about obtaining a government gift prior to verification. If you or a friend has fallen victim to this fraud, they are urged to report it immediately to your bank, and report it through the National Cyber Crime Reporting Portal (https://cybercrime.gov.in) or contact the cyber helpline at 1930. They also recommend always checking messages like this through their official government website first.

- Claim: The Modi Government is distributing ₹5,000 to citizens through UPI apps

- Claimed On: Social Media

- Fact Check: False and Misleading

Related Blogs

Technology has revolutionized our lives, offering countless benefits and conveniences that make our daily lives more accessible and connected. However, with these benefits come potential challenges and risks that can impact our digital experiences. In this article, we have gathered expert advice on the impact of technology use in our daily lives, including the importance of warranties and tech protection in safeguarding our technology investments. Our experts offer invaluable insights and guidance on using technology safely and effectively while protecting us from unexpected costs or problems. Whether you’re a casual user or a technology enthusiast, our experts’ insights will help you navigate the world of technology with confidence and peace of mind.

How to protect kids from online abuse in the modern era of technology?

“As parents, it’s important to acknowledge that online child abuse is a widespread problem that we need to address. But does this mean we should ban technology from our children’s lives? Absolutely not! Technology is an integral part of our children’s lives, providing numerous benefits. Instead, we should think of practical ways to minimize the risks of online abuse. Many parents today depend on parental control apps like Mobicip to safeguard their children’s online activities and build healthy digital habits. These apps allow parents to supervise their child’s online behavior, restrict screen time, prevent access to certain websites and applications, filter inappropriate content, and get instant notifications about dangerous interactions or inappropriate content. Parents feel reassured and free of worry, knowing that their children’s online surroundings are secure and protected. In addition to using a parental control app, we must also focus on building a strong connection with our kids. By initiating conversations about their digital lives, we can understand their digital world, educate them about the potential risks and dangers of the internet, and teach them how to stay safe. By being proactive and engaged in our children’s digital lives, we can protect them from online abuse while still allowing them to benefit from technology.”

How to increase engagement in online classes via technology?

“Online classes have gained immense popularity in recent years due to their many advantages. However, online educators face several challenges that can impede the effectiveness of their classes, one of which is the lack of engagement from students. To address this challenge, it is crucial to adopt certain strategies that can increase student engagement and create a more meaningful learning environment.

One effective strategy is to limit the number of chokepoints that students may face while enrolling in a class. This can be achieved by using booking and scheduling technology that provides students with a hassle-free experience. The system sends regular reminders and notifications to learners about upcoming classes and assignments, helping them stay organized and committed to their learning.

Technology plays a vital role in improving communication between students and teachers and in increasing student engagement and participation during a session. Utilizing technology such as polling, chat boxes, and breakout rooms enables learners to actively participate in the class and share their perspectives, leading to more effective learning.

Personalization is also essential in creating a meaningful learning environment. A scheduling system can help create customized learning paths for each student, where they can progress at their own pace and focus on topics that interest them. This ensures that each student receives individual attention and is able to learn in a way that suits their learning style.

Moreover, gamification techniques can be used to make the learning process more fun and engaging. This includes using badges, points, and leaderboards to motivate learners to achieve their goals while competing with each other.”

Dr. Sukanya Kakoty, Omnify

How to choose the best due diligence software for your business?

“With a variety of solutions on the market, finding the best due diligence software for your business can be a tedious task. In addition to the software’s capabilities meeting your needs, there are other factors to consider when picking the right solution for your business. As due diligence involves the sharing of sensitive information, security is a key factor to consider. When browsing solutions, it is important to consider if their security features are up to industry standards. User-friendliness is another factor to consider when adopting a new due diligence software. Introducing a new tool should increase team efficiency, not disrupt your existing workflow. When searching for the perfect due diligence solution, pick one that meets the above criteria and more. DealRoom is a lifecycle deal management solution, providing pipeline, diligence, integration, and document management all under one platform. DealRoom’s user-friendly and intuitive features allow for customizable workflows to fit the specific needs of each user. DealRoom also offers industry-leading security features, including data encryption, granular permissions, and detailed audit logs, guaranteeing that your information is always protected. When choosing the best due diligence software for your business, consider a user-friendly, flexible, and secure solution.”

Why are backups important, and what is the safest way of doing them?

“Backups are important for several reasons. They help to protect against data loss, which can be caused by a variety of reasons, such as hardware failure, software corruption, natural disasters, or cyber-attacks. Backups also help to ensure that important data is available when needed, such as in the case of an emergency or system failure. Additionally, backups provide a way to recover deleted or corrupted files. This is also important because the loss of data can result in consequences such as financial loss, damage to reputation, and even legal issues. If not these, then loss of data may also lead to emotional effects in certain cases. A backup, however, can restore data quickly and avoid any significant disruption, emotional or otherwise. The safest way of doing backups is by following the 3-2-1 backup rule. This rule states that you should have at least three copies of your data, stored on at least two different storage media, with one copy stored offsite. This provides redundancy in case of a failure of one storage medium or location.

There are several methods of backing up data, including:

- External hard drives or USB drives: These are inexpensive and portable, making them a popular choice for personal backups. However, they can be lost or damaged, so it is important to keep them in a safe location and make regular backups.

- Cloud backups: These store data on remote servers, which can be accessed from anywhere with an internet connection. This provides an offsite backup solution, but it is important to choose a reputable provider and to ensure that the data is encrypted and secure.

- Network-attached storage (NAS): These are devices that connect to a network and provide centralized storage for multiple devices. They can be configured to automatically back up data from multiple devices on the network.

- Tape backups: These are less common but are still used by some businesses for the long-term storage of large amounts of data.

Regardless of the backup method chosen, it is important to regularly test backups regularly to ensure that they can be successfully restored in case of an emergency.”

What are the best trending electric toys for kids in 2023?

“Augmented Reality (AR) Toys: These toys blend the physical and digital world, providing an immersive experience. Popular examples include AR-enabled building sets and interactive storybooks that come to life through an app.

Educational Robotics: Robotics toys like the LEGO Mindstorms Robot Inventor and Sphero’s programmable robots have gained popularity for teaching coding, engineering, and problem-solving skills through hands-on play.”

Aside from security, what are some of the features of a VPN?

“Accessing geo-restricted content: One of the main benefits of using a VPN is its ability to bypass geographical restrictions in the world of flight prices, content, gaming, and more. Many websites and online services are only available in certain countries or regions. With a VPN, you can change your virtual location and access content that is otherwise blocked or unavailable in your country. Imagine you’re playing a game with friends; by using a VPN, you can improve your gaming experience by connecting to a server closer to the game’s host location and bypassing regional restrictions to access features unavailable in your region.

Increased privacy and anonymity: While security and privacy go hand in hand, VPNs offer more than just encryption. They also provide a level of anonymity by masking your IP address and making it difficult for websites and online services to track your online activity. This can be particularly useful for users who are concerned about their online privacy or who want to avoid targeted ads.

Faster internet speeds: Believe it or not, using a VPN can actually improve your internet speed in certain situations. If your internet service provider (ISP) is throttling your internet speed, a VPN can help you bypass this by encrypting your traffic and hiding it from your ISP. Additionally, some VPN providers offer dedicated servers that are optimized for faster speeds, reducing buffering and improving download and upload speeds.”

How does creating a website can help a home business?

“As a digital marketing consultant, I once worked with a client who had a home-based bakery business. She struggled to reach new customers beyond her local community and wanted to expand her reach and grow her business. After assessing her needs, I recommended that she create a website for her business. With the website, she could showcase her unique baked goods, provide a platform for online ordering, and expand her reach to customers beyond her local community. The website was designed to be visually appealing and user-friendly while effectively showcasing the brand and products in an attractive way. Additionally, the website was optimized for search engines and integrated with social media platforms to increase visibility and drive traffic to the site. Within a few months of launching the website, she saw an increase in orders. Customers could easily place orders through the website and leave reviews, which provided social proof and helped build trust with potential customers. Also used data analytics to track customer behavior and make data-driven decisions to improve the website and marketing strategy. The website became a valuable asset for her business, helping her to expand her reach, increase sales, and gather valuable customer data. Overall, creating a website helped her home-based business to grow and thrive in a competitive marketplace.”

Lito James, MassivePeak.com

How can technology be utilized to enhance productivity for remote teams working from home?

“Our team facilitates strategic planning sessions and we’re frequently working with teams that are working remotely. Here are a few of our best tips for productivity:

- Clear strategy: As teams have fewer touch points, it’s critical they are aligned and bought into the direction of the organization.

- Clear big rocks for the week. Make sure you’re not just busy but doing things that will move the needle on your strategic goals

- Clear accountability: Basecamp or other project management tools to help make sure nothing falls through the cracks

- Clear communication: Schedule the right meetings, so you are connecting at the right times to deliver important work and align on important topics.

- Clear agendas: When you do meet, make sure you’re coming together, staying focused, and getting the most important information across

Creating the right strategy and the right structure to keep it moving forward will help your team drive your most important outcomes, regardless of if you’re working in an office or remotely.

Anthony Taylor, SME Strategy Consulting

How to monitor your kids’ activities online?

“As a father of two kids and the founder of TheSweetBits.com, a website dedicated to providing guides on software and apps, I have extensive experience in monitoring my children’s online activities. Over the years, I have tested and reviewed numerous parental control apps and software to ensure my children’s online safety. When it comes to monitoring your kids’ activity online, it’s crucial to find a balance between keeping them safe and respecting their privacy. One of the most effective ways to achieve this balance is by using parental control software that allows you to set limits on their device usage, restrict access to inappropriate content, and monitor their online activity. However, it’s important to note that parental control software is not a substitute for good communication with your kids. You should have regular conversations with them about online safety and the potential dangers of the internet. By establishing trust and open communication, you can work together to create a safe and responsible online environment for your family. As I always say, ‘Parental control software is just one tool in the toolbox of responsible parenting’.”

How to plan the best online date with the help of technology?

“To plan a date online with the help of technology, we recommend using a reservation app like OpenTable to make dinner reservations. Not only will this let her know you thought ahead with the smaller details, but it will also ensure a smooth date by eliminating a long waiting time for a table to open up.”

What are the benefits of teaching kids to code and how to make it fun?

“The ability to code has become increasingly important in the modern world due to the rapid advancement of technology and its integration into nearly every aspect of our lives. This is something that will only continue as our children grow up and enter the world at large as the demand for workers with coding skills continues to grow rapidly. Teaching kids to code offers numerous benefits beyond just developing technical skills and getting them future-ready. It promotes problem-solving abilities, critical thinking, and logical reasoning, all of which are essential in today’s digital age. Additionally, coding can increase creativity and encourage children to think outside the box. However, it’s important to make coding fun and engaging for kids rather than a dull and tedious task. One way to make it exciting is to introduce gamification elements, such as incorporating fun characters or adding game-like challenges, and there are loads of great apps to get them started with. We love CodeSpark Academy, Lego Boost, and Tynker. Another approach is to encourage group work or peer learning, where children can work together to solve problems and learn from each other. Ultimately, making coding fun and enjoyable can ignite children’s interest in technology and set them on a path to explore new and exciting opportunities in the future.”

Are dating apps useful in the modern dating era and how to find the correct one?

“Dating apps have become a popular tool for modern daters to find potential partners. However, they also come with their own set of problems. One of the biggest issues with current dating apps is that they focus too much on superficial factors such as sexual attraction, rather than deeper compatibility and true attraction. This can lead to frustration and disappointment for users who are seeking more meaningful connections. Fortunately, AI technology is starting to offer solutions to these problems. By using machine learning algorithms and data analysis, apps like Iris Dating are able to help users find potential matches based on more than just superficial qualities. Iris uses a process called “iris training” to learn each user’s unique preferences and suggest compatible matches accordingly. This approach allows users to connect with people to whom they are really attracted, leading to more meaningful and lasting relationships. When it comes to finding the right dating app, it’s important to do your research and choose one that aligns with your values and preferences. Look for apps that prioritize true attraction and use AI technology to help you find meaningful matches. Don’t be afraid to try out multiple apps until you find one that feels right for you. Overall, while dating apps have their flaws, AI-powered apps like Iris offer hope for a more personalized and meaningful dating experience. By leveraging the power of technology, we can make the search for love a little bit easier and a lot more enjoyable.”

What are some IT risk management tips for home businesses?

“As more and more people turn to home-based businesses, IT security for these operations is becoming an increasingly important concern. From data breaches to cyberattacks, the risks you face as a home business owner are the same as those faced by larger enterprises. So, what are some IT risk management tips for home businesses? First, consider using a virtual private network or VPN to enhance the security of your online communications. Additionally, enable two-factor authentication for all of your accounts, including any cloud services you use. Practice good password hygiene, regularly updating passwords and avoiding easily guessable or common passwords. Finally, consider investing in cybersecurity insurance to protect your business in the event of a cyberattack or data breach. By taking these steps to mitigate IT risks, you can help ensure your home business stays secure and successful. Below are some additional suggestions to help you stay vigilant and set up your home business for longevity.

-Keep Your Software Up to Date: One of the best ways to reduce your risk of being hacked is to keep your software up to date. This includes both your operating system and any applications you have installed.

-Use a Firewall: A firewall is a piece of software that helps to block incoming connections from untrusted sources. By blocking these connections, you can help to prevent hackers from gaining access to your system.

-Use Anti-Virus Software: In addition to using a firewall, you should also use anti-virus software. Anti-virus software helps to protect your system from viruses and other malware. These programs work by scanning your system for known threats and then quarantining or deleting any files that are found to be infected.

-Back Up Your Data Regularly: Finally, it is important to back up your data regularly. This way, if your system is ever compromised, you will not lose any important files or data.

-Encrypt Your Data: If you are storing sensitive data on your computer, it is important to encrypt it to protect it from being accessed by unauthorized individuals. Encryption is a process of transforming data into a format that cannot be read without a decryption key. There are many different encryption algorithms that you can use, so make sure to choose one that is appropriate for the type of data you are encrypting.”

What are the benefits of giving your child a smartphone?

“If you’re teetering back and forth on getting your child a smartphone, consider these benefits as you make your decision:

• Connection. Smartphones keep kids in contact with friends and family—for fun and function! From school to extracurricular activities, kids can easily let their parents and caregivers know about late pickups, ride requests, and other changes of plans.

• Safety. If your child or someone they’re with gets hurt, their smartphone could save a life! And with built-in GPS, their location is always accessible. Plus, parental controls and other smartphone safeguards offer even more protection.

• Convenience. Life is a little easier when your child has a smartphone, plain and simple! You can call each other, send texts, and always know where they are.

• Acceptance. These days, it’s uncommon for kids to not have smartphones. With a smartphone, your child feels belonging and inclusion among their peers.

• Development. Having something as valuable as a smartphone teaches children responsibility and accountability. And with a smartphone of their own, your child will learn how to use technology in an appropriate, safe, and disciplined way.

• Education. Smartphones are incredible teaching tools! Educational apps, videos, and games can keep kids sharp. Plus, smartphones can introduce them to new hobbies and interests!”

How is technology promoting health and wellness?

“Technology has revolutionized the healthcare industry and is playing an increasingly important role in promoting health and wellness. Here are some ways in which technology has helped:

Wearable technology: Wearable devices, such as fitness trackers and smartwatches, are becoming increasingly popular. These devices can track a person’s physical activity, sleep patterns, heart rate, and more. They provide valuable insights into a person’s health and wellness and can help motivate them to make positive lifestyle changes.

Telehealth: Telemedicine allows patients to receive medical care remotely through video conferencing, phone calls, or other digital means. It has also allowed fitness professionals to work with more people who aren’t in a local market via video conferencing, webinars, etc.

Mobile Apps: There are a plethora of mobile apps available that promote health and wellness. These apps can help users track their diet and exercise, manage chronic conditions, and access medical information.

Virtual Reality: Virtual reality (VR) is being used in healthcare to treat conditions such as anxiety, phobias, and PTSD. VR can also be used for physical therapy and rehabilitation.

Overall, technology is playing an increasingly important role in promoting health and wellness, and it is likely that we will see even more innovative uses of technology in the healthcare industry in the future.”

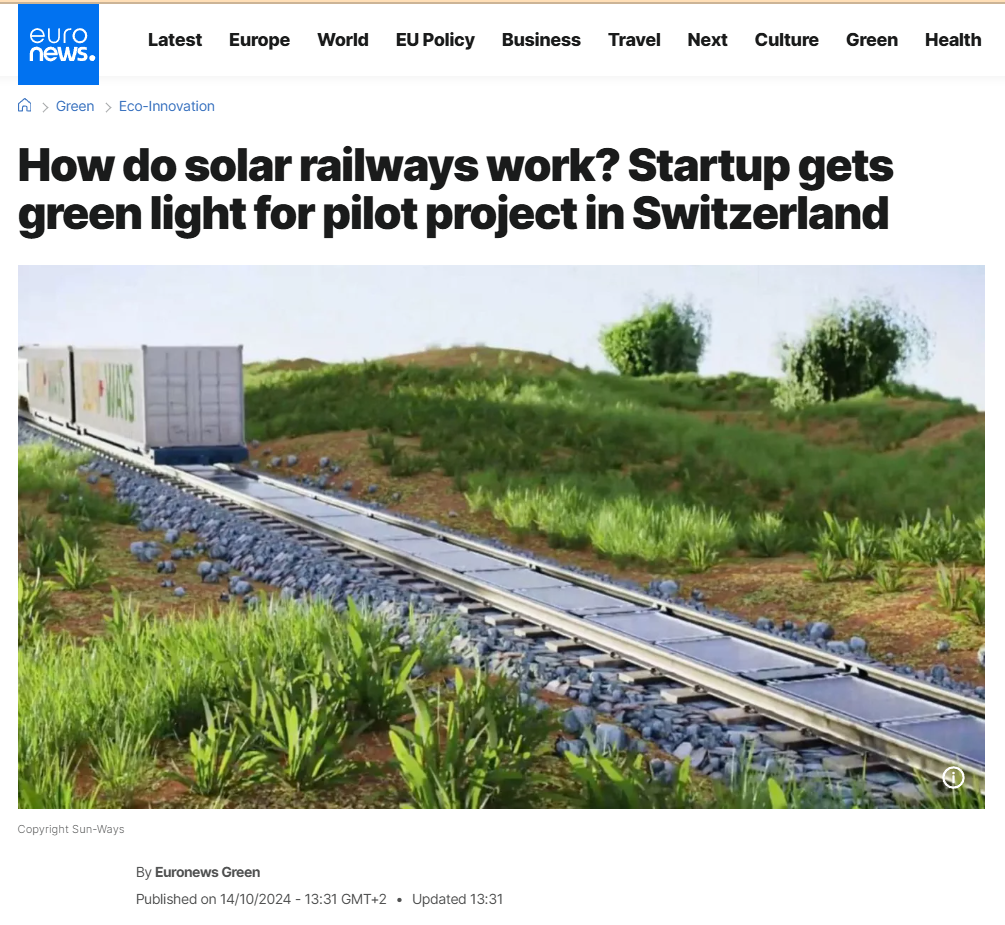

Executive Summary:

Social media has been overwhelmed by a viral post that claims Indian Railways is beginning to install solar panels directly on railway tracks all over the country for renewable energy purposes. The claim also purports that India will become the world's first country to undertake such a green effort in railway systems. Our research involved extensive reverse image searching, keyword analysis, government website searches, and global media verification. We found the claim to be completely false. The viral photos and information are all incorrectly credited to India. The images are actually from a pilot project by a Swiss start-up called Sun-Ways.

Claim:

According to a viral post on social media, Indian Railways has started an all-India initiative to install solar panels directly on railway tracks to generate renewable energy, limit power expenses, and make global history in environmentally sustainable rail operations.

Fact check:

We did a reverse image search of the viral image and were soon directed to international media and technology blogs referencing a project named Sun-Ways, based in Switzerland. The images circulated on Indian social media were the exact ones from the Sun-Ways pilot project, whereby a removable system of solar panels is being installed between railway tracks in Switzerland to evaluate the possibility of generating energy from rail infrastructure.

We also thoroughly searched all the official Indian Railways websites, the Ministry of Railways news article, and credible Indian media. At no point did we locate anything mentioning Indian Railways engaging or planning something similar by installing solar panels on railway tracks themselves.

Indian Railways has been engaged in green energy initiatives beyond just solar panel installation on program rooftops, and also on railway land alongside tracks and on train coach roofs. However, Indian Railways have never installed solar panels on railway tracks in India. Meanwhile, we found a report of solar panel installations on the train launched on 14th July 2025, first solar-powered DEMU (diesel electrical multiple unit) train from the Safdarjung railway station in Delhi. The train will run from Sarai Rohilla in Delhi to Farukh Nagar in Haryana. A total of 16 solar panels, each producing 300 Wp, are fitted in six coaches.

We also found multiple links to support our claim from various media links: Euro News, World Economy Forum, Institute of Mechanical Engineering, and NDTV.

Conclusion:

After extensive research conducted through several phases including examining facts and some technical facts, we can conclude that the claim that Indian Railways has installed solar panels on railway tracks is false. The concept and images originate from Sun-Ways, a Swiss company that was testing this concept in Switzerland, not India.

Indian Railways continues to use renewable energy in a number of forms but has not put any solar panels on railway tracks. We want to highlight how important it is to fact-check viral content and other unverified content.

- Claim: India’s solar track project will help Indian Railways run entirely on renewable energy.

- Claimed On: Social Media

- Fact Check: False and Misleading

A photograph showing a massive crowd on a road is being widely shared on social media. The image is being circulated with the claim that people in the United States are staging large-scale protests against President Donald Trump.

However, CyberPeace Foundation’s research has found this claim to be misleading. Our fact-check reveals that the viral photograph is nearly eight years old and has been falsely linked to recent political developments.

Claim:

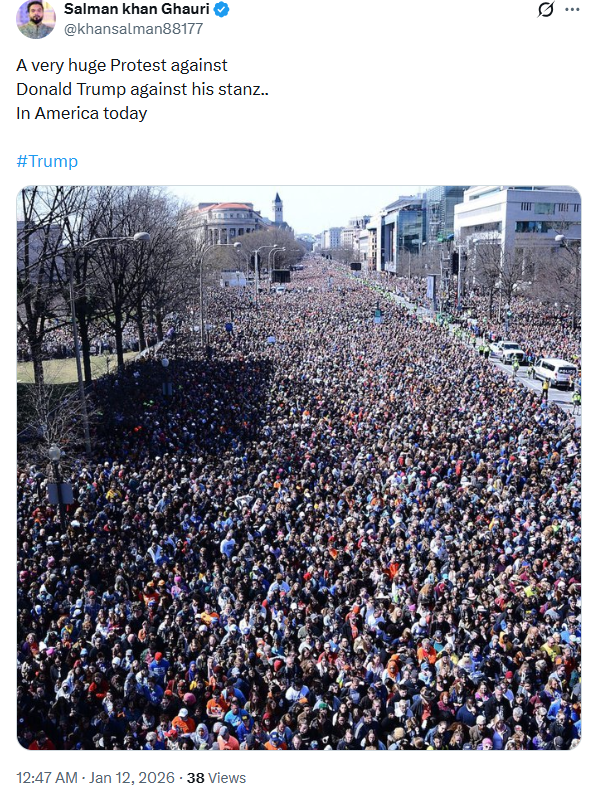

Social media users are sharing a photograph and claiming that it shows people protesting against US President Donald Trump.An X (formerly Twitter) user, Salman Khan Gauri (@khansalman88177), shared the image with the caption:“Today, a massive protest is taking place in America against Donald Trump.”

The post can be viewed here, and its archived version is available here.

FactCheck:

To verify the claim, we conducted a reverse image search of the viral photograph using Google. This led us to a report published by The Mercury News on April 6, 2018.

The report features the same image and states that the photograph was taken on March 24, 2018, during the ‘March for Our Lives’ rally in Washington, DC. The rally was organized to demand stricter gun control laws in the United States. The image shows a large crowd gathered on Pennsylvania Avenue in support of gun reform.

The report further notes that the Associated Press, on March 30, 2018, debunked false claims circulating online which alleged that liberal billionaire George Soros and his organizations had paid protesters $300 each to participate in the rally.

Further research led us to a report published by The Hindu on March 25, 2018, which also carries the same photograph. According to the report, thousands of Americans across the country participated in ‘March for Our Lives’ rallies following a mass shooting at a school in Florida. The protests were led by survivors and victims, demanding stronger gun laws.

The objective of these demonstrations was to break the legislative deadlock that has long hindered efforts to tighten firearm regulations in a country frequently rocked by mass shootings in schools and colleges.

Conclusion

The viral photograph is nearly eight years old and is unrelated to any recent protests against President Donald Trump.The image actually depicts a gun control protest held in 2018 and is being falsely shared with a misleading political claim.By circulating this outdated image with an incorrect context, social media users are spreading misinformation.