#FactCheck: AI-Generated Audio Falsely Claims COAS Admitted to Loss of 6 Jets and 250 Soldiers

Executive Summary:

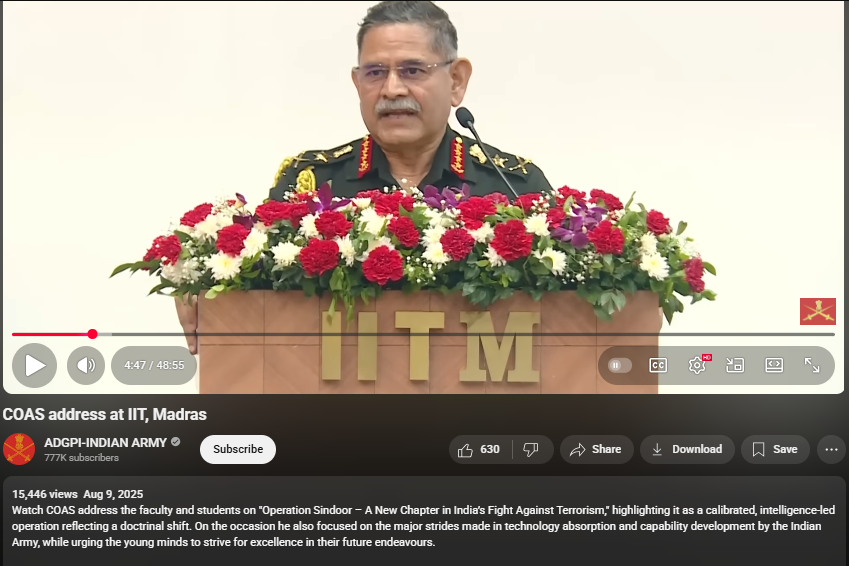

A viral video (archive link) claims General Upendra Dwivedi, Chief of Army Staff (COAS), admitted to losing six Air Force jets and 250 soldiers during clashes with Pakistan. Verification revealed the footage is from an IIT Madras speech, with no such statement made. AI detection confirmed parts of the audio were artificially generated.

Claim:

The claim in question is that General Upendra Dwivedi, Chief of Army Staff (COAS), admitted to losing six Indian Air Force jets and 250 soldiers during recent clashes with Pakistan.

Fact Check:

Upon conducting a reverse image search on key frames from the video, it was found that the original footage is from IIT Madras, where the Chief of Army Staff (COAS) was delivering a speech. The video is available on the official YouTube channel of ADGPI – Indian Army, published on 9 August 2025, with the description:

“Watch COAS address the faculty and students on ‘Operation Sindoor – A New Chapter in India’s Fight Against Terrorism,’ highlighting it as a calibrated, intelligence-led operation reflecting a doctrinal shift. On the occasion, he also focused on the major strides made in technology absorption and capability development by the Indian Army, while urging young minds to strive for excellence in their future endeavours.”

A review of the full speech revealed no reference to the destruction of six jets or the loss of 250 Army personnel. This indicates that the circulating claim is not supported by the original source and may contribute to the spread of misinformation.

Further using AI Detection tools like Hive Moderation we found that the voice is AI generated in between the lines.

Conclusion:

The claim is baseless. The video is a manipulated creation that combines genuine footage of General Dwivedi’s IIT Madras address with AI-generated audio to fabricate a false narrative. No credible source corroborates the alleged military losses.

- Claim: AI-Generated Audio Falsely Claims COAS Admitted to Loss of 6 Jets and 250 Soldiers

- Claimed On: Social Media

- Fact Check: False and Misleading

Related Blogs

Introduction

Purchasing online currencies through one of the numerous sizable digital marketplaces designed specifically for this purpose is the simplest method. The quantity of cryptocurrency and money paid. These online marketplaces impose an exchange fee. After being obtained, digital cash is stored in a digital wallet and can be used in the metaverse or as real money to make purchases of goods and services in the real world. Blockchain ensures the security and decentralisation of each exchange.

Its worth and application are comparable to those of gold: when a large number of investors choose this valuable asset, its value increases and vice versa. This also applies to cryptocurrencies, which explains why they have become so popular in recent years. The metaphysical realm is an online space where users can communicate with one another via virtual personas, among other features. Furthermore, money and commerce always come up when people communicate.

Web3 is welcoming the metaverse, and in an environment where conventional currency isn't functional, its technologies are making it possible to use cryptocurrencies. Non-Fungible Tokens (NFTs) can be used to monitor intellectual rights to ownership in the metaverse, while cryptocurrencies are used to pay for content and incentivise consumers. This write-up addresses what the metaverse crypto is. It also delves into the advantages, disadvantages, and applications of crypto in this context.

Convergence of Metaverse and Cryptocurrency

As the main form of digital money in the Metaverse, digital currencies can be used to do business and exchange in the digital realm. The term "metaverse" describes a simulation of reality where users can communicate in real time with other users and an environment created by computers. The acquisition and exchange of virtual products, virtual possessions, and electronic creativity within the Metaverse can all be made possible via cryptocurrency.

Many digital currencies are based on blockchain software, which can offer an accessible and safe way to confirm payments and manage digital currencies in the Metaverse. By giving consumers vouchers or other electronic currencies in exchange for their accomplishments or contributions, cryptocurrency might encourage consumer engagement and involvement in the Metaverse.

In the Metaverse, cryptocurrency can also facilitate portable connectivity, enabling users to move commodities and their worth between various virtual settings and platforms.

The idea of fragmentation in the Metaverse, where participants have more ownership and control over their virtual worlds, is consistent with the decentralised characteristics of cryptocurrencies.

Advantages of Metaverse Cryptocurrency

There are countless opportunities for creativity and discovery in the metaverse. Because the blockchain is accessible to everyone, unchangeable, and password-protected, metaverse-centric cryptocurrencies offer greater safety and adaptability than cash. Crypto will be crucial to the evolution of the metaverse as it keeps growing and more individuals show interest in using it. Here are a few of the variables influencing the growth of this new virtual environment.

Safety

Your Bitcoin wallet is intimately linked to your personal information, progress, and metaverse possessions. Additionally, if your digital currency wallet is compromised, especially if your account credentials are weak, public, or connected to your real-world identity, cybercriminals may try to steal your money or personal data.

Adaptability

Digital assets can be accessed and exchanged worldwide due to cryptocurrencies’ ability to transcend national borders. By utilising a local cryptocurrency, many metaverse platforms streamline transactions and eliminate the need for frequent currency conversions between various digital or fiat currencies. Another advantage of using autonomous contract languages is for metaverse cryptos. When consumers make transactions within the network, applications do away with the need for administrative middlemen.

Objectivity

By exposing interactions in a publicly accessible distributed database, the use of blockchain improves accountability. It is more difficult for dishonest people to raise the cost of digital goods and land since Bitcoin transactions are public. Metaverse cryptocurrencies are frequently employed to control project modifications. The outcomes of these legislative elections are made public using digital contracts.

NFT, Virtual worlds, and Digital currencies

Using the NFT is an additional method of using Bitcoin for metaverse transactions. These are distinct electronic documents that have significant potential value.

A creator must convert an electronic work of art into a virtual object or virtual world if they want to display it digitally in the metaverse. Artists produce one-of-a-kind, serialised pieces that are given an NFT that may be acquired through Bitcoin payments.

Applications of Metaverse Cryptography

Fiat money or independent virtual currencies like Robux are used by Web 2 metaverse initiatives to pay for goods, real estate, and services. Fiat lacked the adaptability of cryptocurrencies with automated contract capabilities, even though it may be used to pay for goods and finance the creation of projects. Users can stake these within the network virtual currencies to administer distributed metaverses, and they have all the same functions as fiat currency.

Banking operations

Lending digital cash to purchase metaverse land is possible. Banks that have already made inroads into the metaverse include HSBC and JPMorgan, both of which possess virtual real estate. "We are making our foray into the metaverse, allowing us to create innovative brand experiences for both new and existing customers," said Suresh Balaji, chief marketing officer for HSBC in Asia-Pacific.

Purchasing

An increasingly important aspect of the metaverse is online commerce. Users can interact with real-world brands, tour simulated malls, and try on virtual apparel for their characters. Adidas, for instance, debuted an NFT line in 2021 that included customizable peripherals for the Sandbox. Buyers of NFTs crossed the line separating the virtual universe and the actual world to obtain the tangible goods associated with their NFTs.

Authority

Metaverse initiatives are frequently governed by cryptocurrency. Decentraland, a well-known Ethereum-based metaverse featuring virtual reality components, permits users to submit and vote on suggestions provided they own specific tokens.

Conclusion

The combination of the virtual world and cryptocurrencies creates novel opportunities for trade, innovation, and communication. The benefits of using the blockchain system are increased objectivity, safety, and flexibility. By facilitating exclusive ownership of digital assets, NFTs enhance metaverse immersion even more. In the metaverse, cryptocurrencies are used in banking, shopping, and government, forming a user-driven, autonomous digital world. The combination of cryptocurrencies and the metaverse will revolutionise how we interact with online activities, creating a dynamic environment that presents both opportunities and difficulties.

References

- https://www.telefonica.com/en/communication-room/blog/metaverse-and-cryptocurrencies-what-is-their-relationship/

- https://hedera.com/learning/metaverse/metaverse-crypto

- https://www.linkedin.com/pulse/unleashing-power-connection-between-cryptocurrency-ai-amit-chandra/

Executive Summary:

The viral video circulating on social media about the Indian men’s 4x400m relay team recently broke the Asian record and qualified for the finals of the world Athletics championship. The fact check reveals that this is not a recent event but it is from the World World Athletics Championships, August 2023 that happened in Budapest, Hungary. The Indian team comprising Muhammed Anas Yahiya, Amoj Jacob, Muhammed Ajmal Variyathodi, and Rajesh Ramesh, clocked a time of 2 minutes 59.05 seconds, finishing second behind the USA and breaking the Asian record. Although they performed very well in the heats, they only got fifth place in the finals. The video is being reuploaded with false claims stating its a recent record.

Claims:

A recent claim that the Indian men’s 4x400m relay team set the Asian record and qualified to the world finals.

Fact Check:

In the recent past, a video of the Indian Men’s 4x400m relay team which set a new Asian record is viral on different Social Media. Many believe that this is a video of the recent achievement of the Indian team. Upon receiving the posts, we did keyword searches based on the input and we found related posts from various social media. We found an article published by ‘The Hindu’ on August 27, 2023.

According to the article, the Indian team competed in the World Athletics Championship held in Budapest, Hungary. During that time, the team had a very good performance. The Indian team, which consisted of Muhammed Anas Yahiya, Amoj Jacob, Muhammed Ajmal Variyathodi, and Rajesh Ramesh, completed the race in 2:58.47 seconds, coming second after the USA in the event.

The earlier record was 3.00.25 which was set in 2021.

This was a new record in Asia, so it was a historic moment for India. Despite their great success, this video is being reshared with captions that implies this is a recent event, which has raised confusion. We also found various social media posts posted on Aug 26, 2023. We also found the same video posted on the official X account of Prime Minister Narendra Modi, the caption of the post reads, “Incredible teamwork at the World Athletics Championships!

Anas, Amoj, Rajesh Ramesh, and Muhammed Ajmal sprinted into the finals, setting a new Asian Record in the M 4X400m Relay.

This will be remembered as a triumphant comeback, truly historical for Indian athletics.”

This reveals that this is not a recent event but it is from the World World Athletics Championships, August 2023 that happened in Budapest, Hungary.

Conclusion:

The viral video of the recent news about the Indian men’s 4x400m relay team breaking the Asian record is not true. The video was from August 2023 that happened at the World Athletics Championships, Budapest. The Indian team broke the Asian record with 2 minutes 59.05 seconds in second position while the US team obtained first position with a timing of 2 minutes 58.47 seconds. However, the video circulated projecting as a recent event is misleading and false.

- Claim: Recent achievement of the Indian men's 4x400m relay team broke the Asian record and qualified for the World finals.

- Claimed on: X, LinkedIn, Instagram

- Fact Check: Fake & Misleading

Introduction:

Former Egyptian MP Ahmed Eltantawy was targeted with Cytrox’s predator spyware through links sent via SMS and WhatsApp. Former Egyptian MP Ahmed Eltantawy has been targeted with Cytrox’s Predator spyware in a campaign believed to be state-sponsored cyber espionage. After Eltantawy made his intention to run for president in the 2024 elections known, the targeting took place between May and September 2023. The spyware was distributed using links sent via SMS and WhatsApp, network injection, and visits to certain websites by Eltantawy. The Citizen Lab examined the assaults with the help of Google's Threat Analysis Group (TAG), and they were able to acquire an iPhone zero-day exploit chain that was designed to be used to install spyware on iOS versions up to 16.6.1.

Investigation: The Ahmed Eltantawy Incident

Eltantawy's device was forensically examined by The Citizen Lab, which uncovered several efforts to use Cytrox's Predator spyware to target him. In the investigation, The Citizen Lab and TAG discovered an iOS exploit chain utilised in the attacks against Eltantawy. They started a responsible disclosure procedure with Apple, and as a consequence, it resulted in the release of updates patching the vulnerabilities used by the exploit chain. Mobile zero-day exploit chains may be quite expensive, with black market values for them exceeding millions of dollars. The Citizen Lab also identified several domain names and IP addresses associated with Cytrox’s Predator spyware. Additionally, a network injection method was also utilised to get the malware onto Eltantawy's phone, according to the study. He would be discreetly routed to a malicious website using network injection when he went to certain websites that weren't HTTPS.

What is Cyber Espionage?

Cyber espionage, also referred to as cyber spying, is a sort of cyberattack in which an unauthorised user tries to obtain confidential or sensitive information or intellectual property (IP) for financial gain, business benefit, or political objectives.

Apple's Response: A Look at iOS Vulnerability Patching

Users are advised to keep their devices up-to-date and enable lockdown Mode on iPhones. Former Egyptian MP targeted with predator spyware ahead of 2024 presidential run hence Update your macOS Ventura, iOS, and iPadOS devices, as Apple has released emergency updates to address the flaws. Apple has Released Emergency Updates Amid Citizen Lab’s Disclosure. Apple has issued three emergency updates for iOS, iPadOS (1), and macOS Ventura (2).

The updates address the following vulnerabilities:

CVE-2023-41991,

CVE-2023-41992,

CVE-2023-41993.

Apple customers are advised to immediately install these emergency security updates to protect themselves against potential targeted spyware attacks. By updating promptly, users will ensure that their devices are secure and cannot be compromised by such attacks exploiting these particular zero-day vulnerabilities. Hence it is advisable to maintain up-to-date software and enable security features in your Apple devices.

Conclusion:

Ahmed Eltantawy, a former Egyptian MP and presidential candidate, was targeted with Cytrox’s Predator spyware after announcing his bid for the presidency. He was targeted by Cytrox Predator Spyware Campaign. Such an incident is believed to be State-Sponsored Cyber Espionage. The incident raises the question of loss of privacy and shows the mala fide intention of the political opponents. The investigation Findings reveal that Ahmed Eltantawy was the victim of a sophisticated cyber espionage campaign that leveraged Cytrox’s Predator spyware. Apple advised that all users are urged to update their Apple devices. This case raises alarming concerns about the lack of controls on the export of spyware technologies and underscores the importance of security updates and lockdown modes on Apple devices.

References:

- https://uksnackattack.co.uk/predator-in-the-wires-ahmed-eltantawy-targeted-by-predator-spyware-upon-presidential-ambitions-announcement

- https://citizenlab.ca/2023/09/predator-in-the-wires-ahmed-eltantawy-targeted-with-predator-spyware-after-announcing-presidential-ambitions/#:~:text=Between%20May%20and%20September%202023,in%20the%202024%20Egyptian%20elections.

- https://thehackernews.com/2023/09/latest-apple-zero-days-used-to-hack.html

- https://www.hackread.com/zero-day-ios-exploit-chain-predator-spyware/