#FactCheck - AI-Generated Video Falsely Claims Salman Khan Is Joining AIMIM

A video of Bollywood actor Salman Khan is being widely circulated on social media, in which he can allegedly be heard saying that he will soon join Asaduddin Owaisi’s party, the All India Majlis-e-Ittehadul Muslimeen (AIMIM). Along with the video, a purported image of Salman Khan with Asaduddin Owaisi is also being shared. Social media users are claiming that Salman Khan is set to join the AIMIM party.

CyberPeace research found the viral claim to be false. Our research revealed that Salman Khan has not made any such statement, and that both the viral video and the accompanying image are AI-generated.

Claim

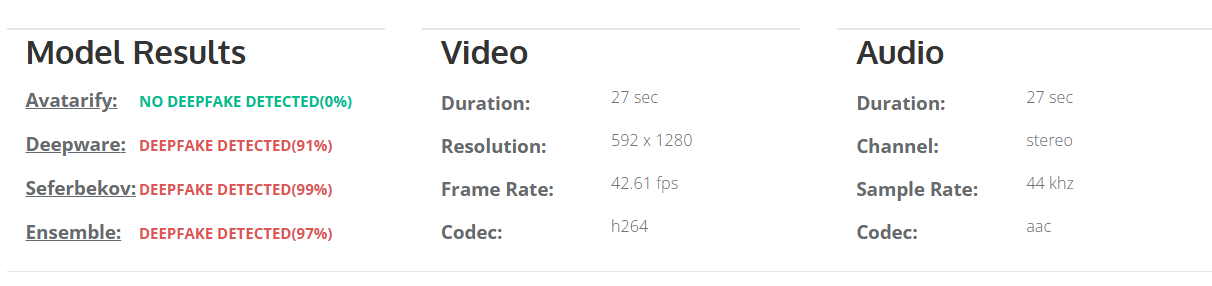

Social media users claim that Salman Khan has announced his decision to join AIMIM.On 19 January 2026, a Facebook user shared the viral video with the caption, “What did Salman say about Owaisi?” In the video, Salman Khan can allegedly be heard saying that he is going to join Owaisi’s party. (The link to the post, its archived version, and screenshots are available.)

Fact Check:

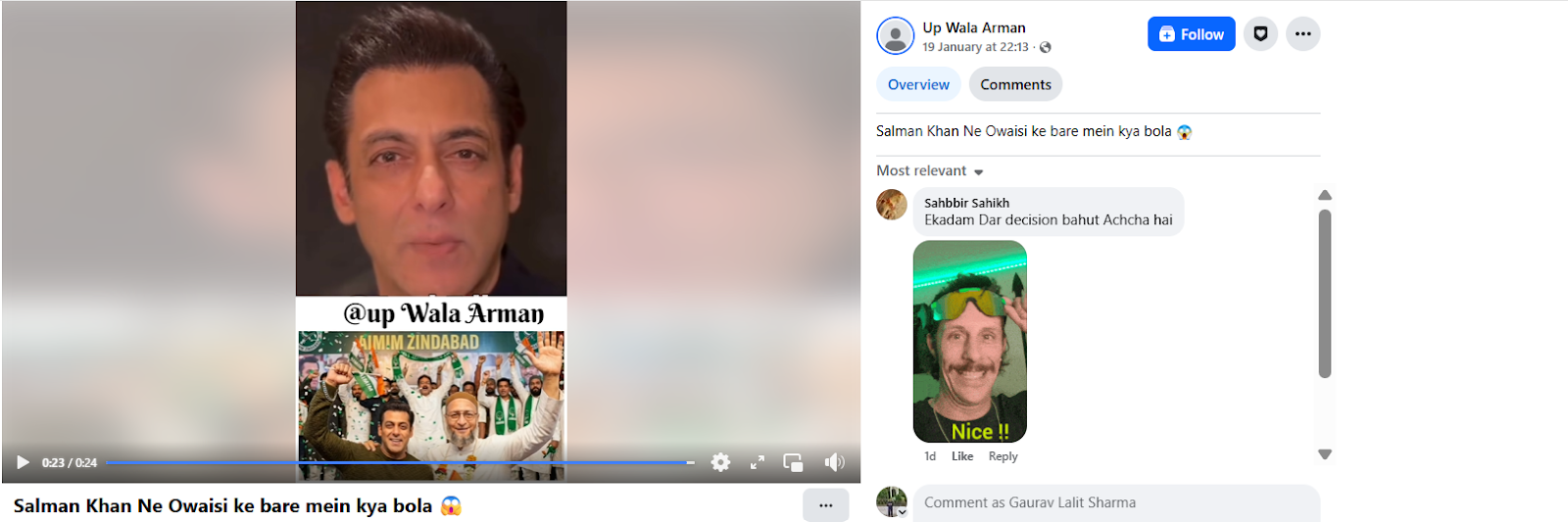

To verify the claim, we first searched Google using relevant keywords. However, no credible or reliable media reports were found supporting the claim that Salman Khan is joining AIMIM.

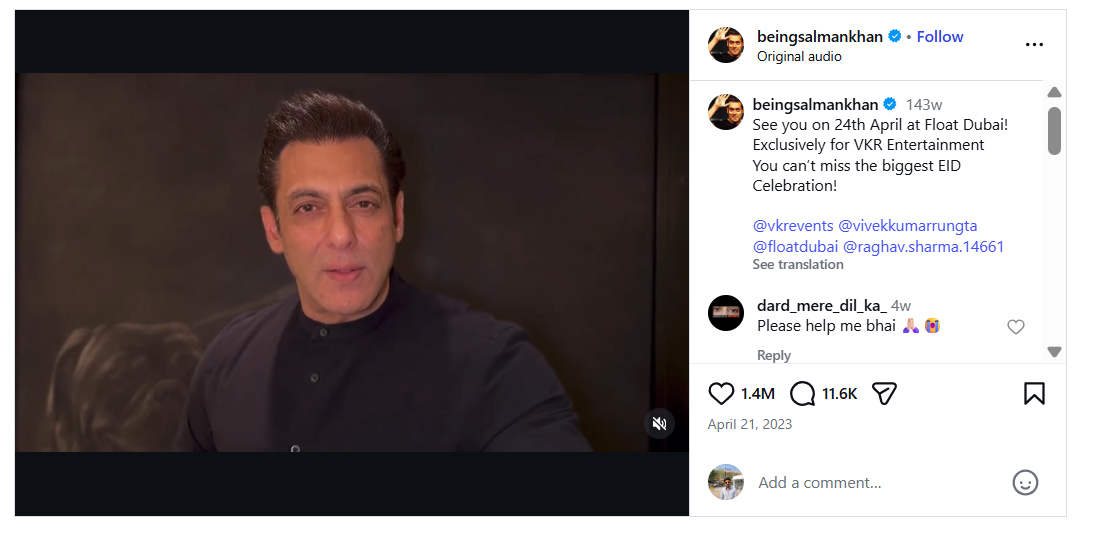

In the next step of verification, we extracted key frames from the viral video and conducted a reverse image search using Google Lens. This led us to a video posted on Salman Khan’s official Instagram account on 21 April 2023. In the original video, Salman Khan is seen talking about an event scheduled to take place in Dubai. A careful review of the full video confirmed that no statement related to AIMIM or Asaduddin Owaisi is made.

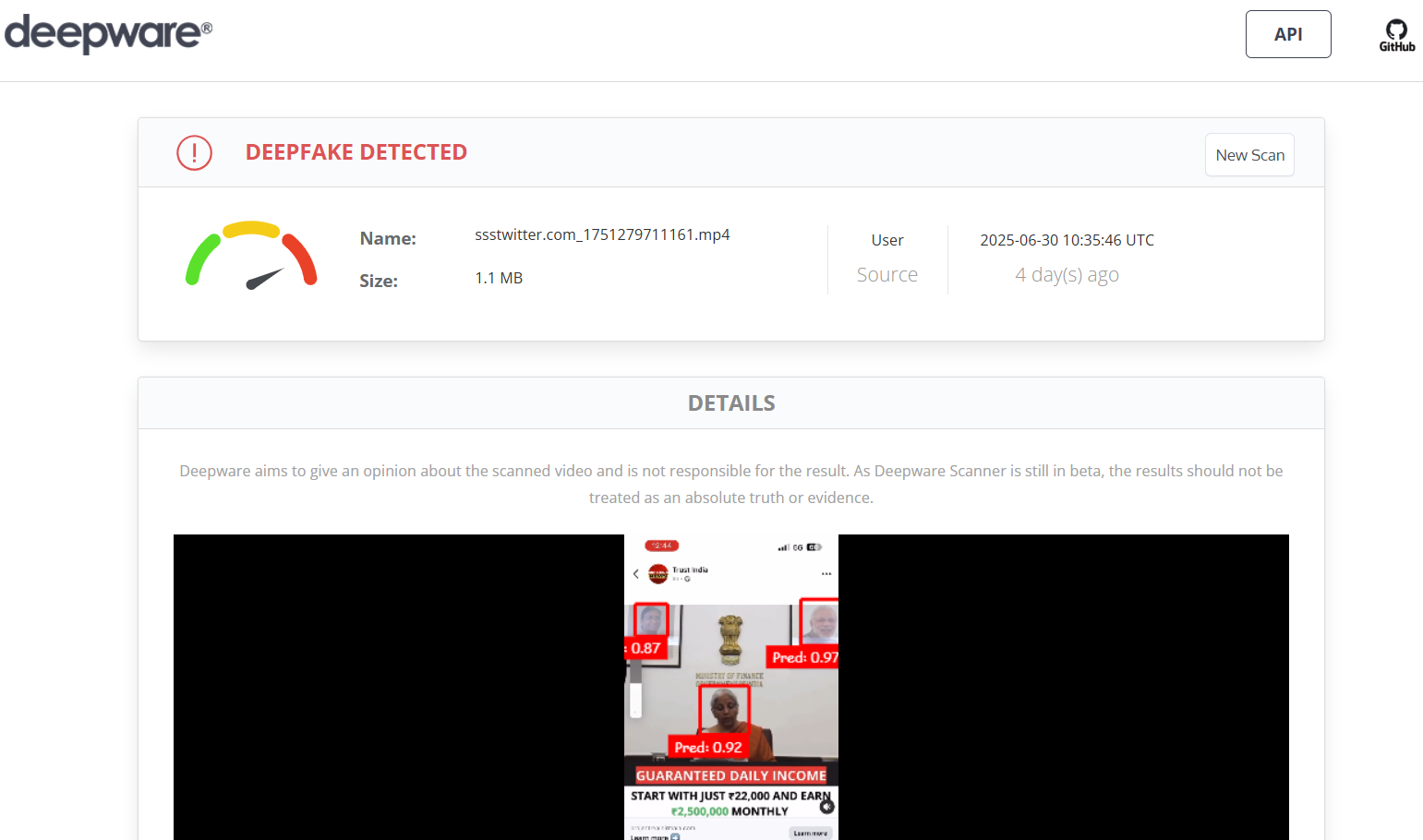

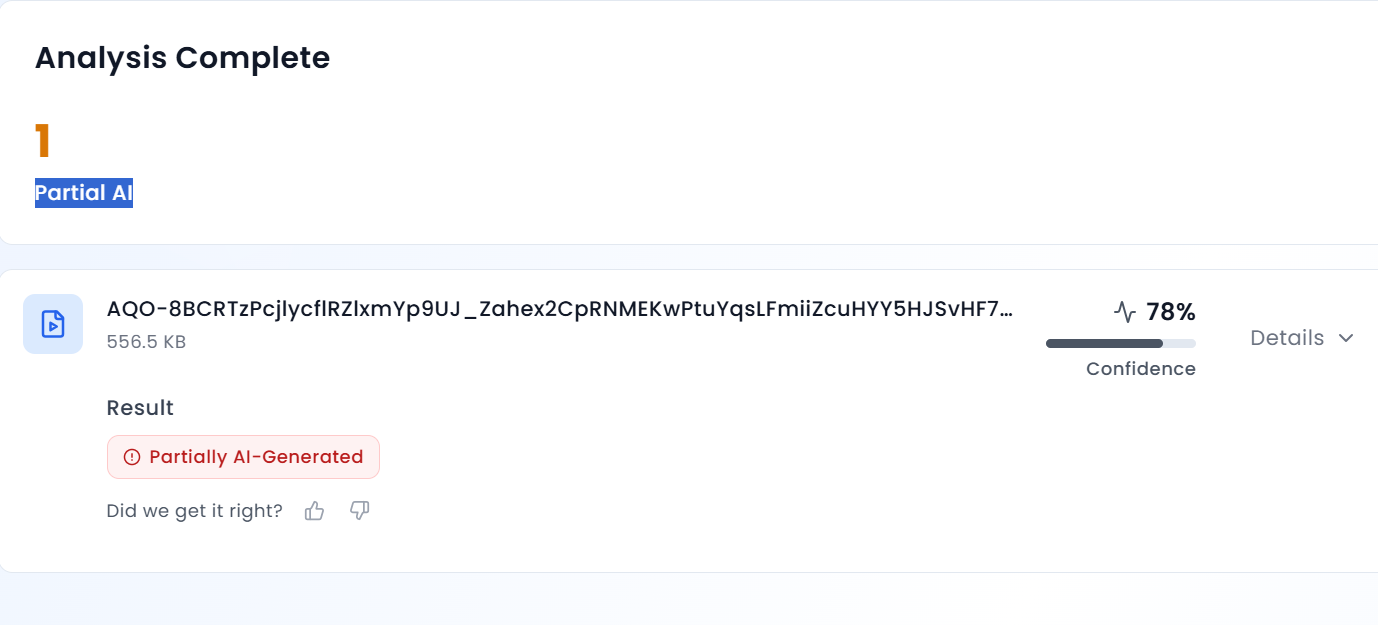

Further analysis of the viral clip revealed that Salman Khan’s voice sounds unnatural and robotic. To verify this, we scanned the video using AURGIN AI, an AI-generated content detection tool. According to the tool’s analysis, the viral video was generated using artificial intelligence.

Conclusion

Salman Khan has not announced that he is joining the AIMIM party. The viral video and the image circulating on social media are AI-generated and manipulated.