Rise of AI Sextortion

Introduction

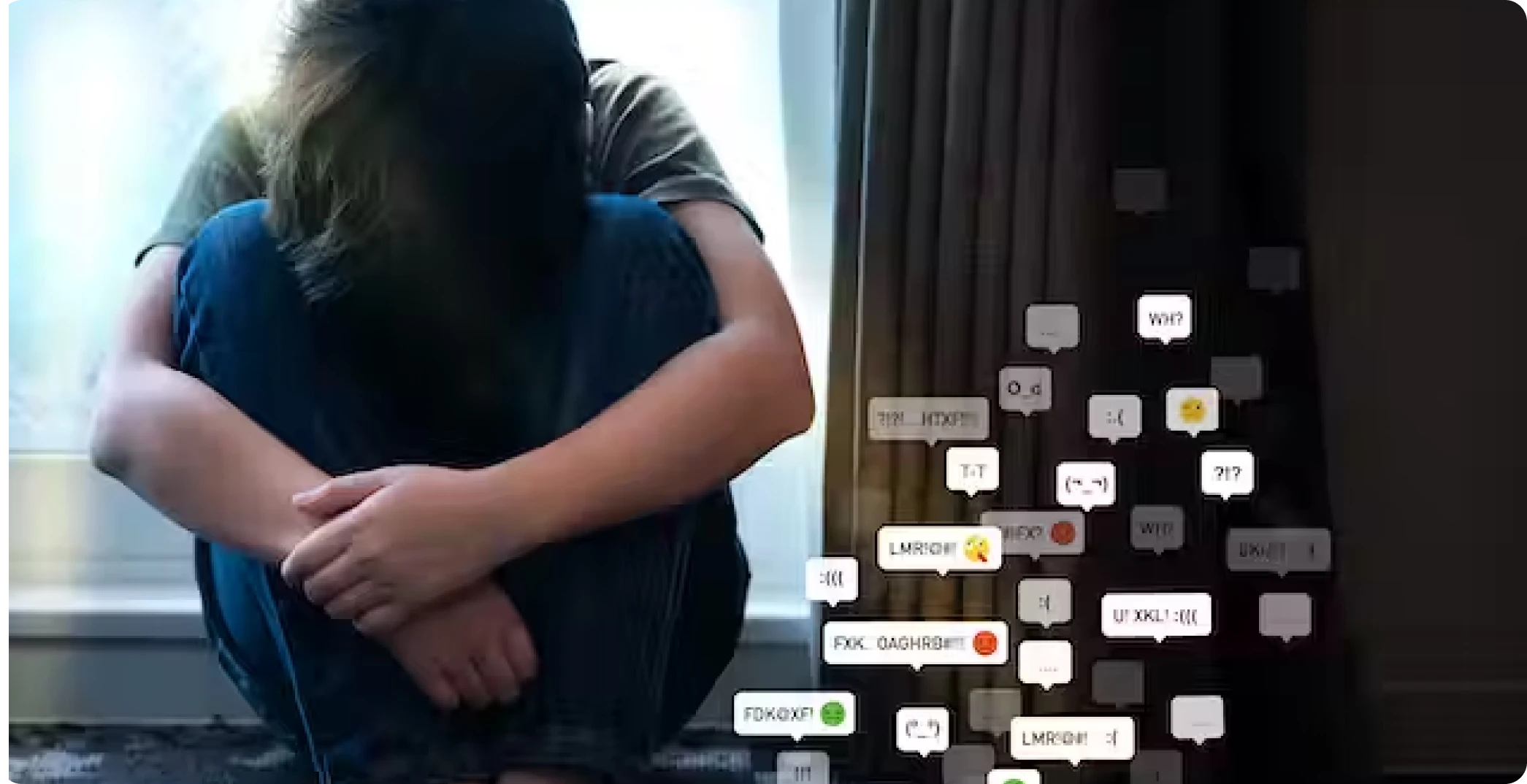

The advent of AI-driven deepfake technology has facilitated the creation of explicit counterfeit videos for sextortion purposes. There has been an alarming increase in the use of Artificial Intelligence to create fake explicit images or videos for sextortion.

What is AI Sextortion and Deepfake Technology

AI sextortion refers to the use of artificial intelligence (AI) technology, particularly deepfake algorithms, to create counterfeit explicit videos or images for the purpose of harassing, extorting, or blackmailing individuals. Deepfake technology utilises AI algorithms to manipulate or replace faces and bodies in videos, making them appear realistic and often indistinguishable from genuine footage. This enables malicious actors to create explicit content that falsely portrays individuals engaging in sexual activities, even if they never participated in such actions.

Background on the Alarming Increase in AI Sextortion Cases

Recently there has been a significant increase in AI sextortion cases. Advancements in AI and deepfake technology have made it easier for perpetrators to create highly convincing fake explicit videos or images. The algorithms behind these technologies have become more sophisticated, allowing for more seamless and realistic manipulations. And the accessibility of AI tools and resources has increased, with open-source software and cloud-based services readily available to anyone. This accessibility has lowered the barrier to entry, enabling individuals with malicious intent to exploit these technologies for sextortion purposes.

The proliferation of sharing content on social media

The proliferation of social media platforms and the widespread sharing of personal content online have provided perpetrators with a vast pool of potential victims’ images and videos. By utilising these readily available resources, perpetrators can create deepfake explicit content that closely resembles the victims, increasing the likelihood of success in their extortion schemes.

Furthermore, the anonymity and wide reach of the internet and social media platforms allow perpetrators to distribute manipulated content quickly and easily. They can target individuals specifically or upload the content to public forums and pornographic websites, amplifying the impact and humiliation experienced by victims.

What are law agencies doing?

The alarming increase in AI sextortion cases has prompted concern among law enforcement agencies, advocacy groups, and technology companies. This is high time to make strong Efforts to raise awareness about the risks of AI sextortion, develop detection and prevention tools, and strengthen legal frameworks to address these emerging threats to individuals’ privacy, safety, and well-being.

There is a need for Technological Solutions, which develops and deploys advanced AI-based detection tools to identify and flag AI-generated deepfake content on platforms and services. And collaboration with technology companies to integrate such solutions.

Collaboration with Social Media Platforms is also needed. Social media platforms and technology companies can reframe and enforce community guidelines and policies against disseminating AI-generated explicit content. And can ensure foster cooperation in developing robust content moderation systems and reporting mechanisms.

There is a need to strengthen the legal frameworks to address AI sextortion, including laws that specifically criminalise the creation, distribution, and possession of AI-generated explicit content. Ensure adequate penalties for offenders and provisions for cross-border cooperation.

Proactive measures to combat AI-driven sextortion

Prevention and Awareness: Proactive measures raise awareness about AI sextortion, helping individuals recognise risks and take precautions.

Early Detection and Reporting: Proactive measures employ advanced detection tools to identify AI-generated deepfake content early, enabling prompt intervention and support for victims.

Legal Frameworks and Regulations: Proactive measures strengthen legal frameworks to criminalise AI sextortion, facilitate cross-border cooperation, and impose offender penalties.

Technological Solutions: Proactive measures focus on developing tools and algorithms to detect and remove AI-generated explicit content, making it harder for perpetrators to carry out their schemes.

International Cooperation: Proactive measures foster collaboration among law enforcement agencies, governments, and technology companies to combat AI sextortion globally.

Support for Victims: Proactive measures provide comprehensive support services, including counselling and legal assistance, to help victims recover from emotional and psychological trauma.

Implementing these proactive measures will help create a safer digital environment for all.

Misuse of Technology

Misusing technology, particularly AI-driven deepfake technology, in the context of sextortion raises serious concerns.

Exploitation of Personal Data: Perpetrators exploit personal data and images available online, such as social media posts or captured video chats, to create AI- manipulation violates privacy rights and exploits the vulnerability of individuals who trust that their personal information will be used responsibly.

Facilitation of Extortion: AI sextortion often involves perpetrators demanding monetary payments, sexually themed images or videos, or other favours under the threat of releasing manipulated content to the public or to the victims’ friends and family. The realistic nature of deepfake technology increases the effectiveness of these extortion attempts, placing victims under significant emotional and financial pressure.

Amplification of Harm: Perpetrators use deepfake technology to create explicit videos or images that appear realistic, thereby increasing the potential for humiliation, harassment, and psychological trauma suffered by victims. The wide distribution of such content on social media platforms and pornographic websites can perpetuate victimisation and cause lasting damage to their reputation and well-being.

Targeting teenagers– Targeting teenagers and extortion demands in AI sextortion cases is a particularly alarming aspect of this issue. Teenagers are particularly vulnerable to AI sextortion due to their increased use of social media platforms for sharing personal information and images. Perpetrators exploit to manipulate and coerce them.

Erosion of Trust: Misusing AI-driven deepfake technology erodes trust in digital media and online interactions. As deepfake content becomes more convincing, it becomes increasingly challenging to distinguish between real and manipulated videos or images.

Proliferation of Pornographic Content: The misuse of AI technology in sextortion contributes to the proliferation of non-consensual pornography (also known as “revenge porn”) and the availability of explicit content featuring unsuspecting individuals. This perpetuates a culture of objectification, exploitation, and non-consensual sharing of intimate material.

Conclusion

Addressing the concern of AI sextortion requires a multi-faceted approach, including technological advancements in detection and prevention, legal frameworks to hold offenders accountable, awareness about the risks, and collaboration between technology companies, law enforcement agencies, and advocacy groups to combat this emerging threat and protect the well-being of individuals online.