Law in 30 Seconds? The Rise of Influencer Hype and Legal Misinformation

Introduction

In today's digital age, we consume a lot of information and content on social media apps, and it has become a daily part of our lives. Additionally, the algorithm of these apps is such that once you like a particular category of content or show interest in it, the algorithm starts showing you a lot of similar content. With this, the hype around becoming a content creator has also increased, and people have started making short reel videos and sharing a lot of information. There are influencers in every field, whether it's lifestyle, fitness, education, entertainment, vlogging, and now even legal advice.

The online content, reels, and viral videos by social media influencers giving legal advice can have far-reaching consequences. ‘LAW’ is a vast subject where even a single punctuation mark holds significant meaning. If it is misinterpreted or only partially explained in social media reels and short videos, it can lead to serious consequences. Laws apply based on the facts and circumstances of each case, and they can differ depending on the nature of the case or offence. This trend of ‘swipe for legal advice’ or ‘law in 30 seconds’, along with the rise of the increasing number of legal influencers, poses a serious problem in the online information landscape. It raises questions about the credibility and accuracy of such legal advice, as misinformation can mislead the masses, fuel legal confusion, and create risks.

Bar Council of India’s stance against legal misinformation on social media platforms

The Bar Council of India (BCI) on Monday (March 17, 2025) expressed concern over the rise of self-styled legal influencers on social media, stating that many without proper credentials spread misinformation on critical legal issues. Additionally, “Incorrect or misleading interpretations of landmark judgments like the Citizenship Amendment Act (CAA), the Right to Privacy ruling in Justice K.S. Puttaswamy (Retd.) v. Union of India, and GST regulations have resulted in widespread confusion, misguided legal decisions, and undue judicial burden,” the body said. The BCI also ordered the mandatory cessation of misleading and unauthorised legal advice dissemination by non-enrolled individuals and called for the establishment of stringent vetting mechanisms for legal content on digital platforms. The BCI emphasised the need for swift removal of misleading legal information.

Conclusion

Legal misinformation on social media is a growing issue that not only disrupts public perception but also influences real-life decisions. The internet is turning complex legal discourse into a chaotic game of whispers, with influencers sometimes misquoting laws and self-proclaimed "legal experts" offering advice that wouldn't survive in a courtroom. The solution is not censorship, but counterbalance. Verified legal voices need to step up, fact-checking must be relentless, and digital literacy must evolve to keep up with the fast-moving world of misinformation. Otherwise, "legal truth" could be determined by whoever has the best engagement rate, rather than by legislation or precedent.

References:

Related Blogs

Introduction

युद्धे सूर्यास्ते युध्यन्तः समाप्तयन्ति, In ancient times, after the day’s battle had ended and the sun had set, warriors would lay down their arms and rest, allowing their minds and bodies to recover before facing the next challenge, and giving warriors time to rest and prepare mentally and physically for the next day. Today, as we remain endlessly connected to work through screens and notifications, the Right to Disconnect bill seeks to restore that same rhythm of rest and renewal in the digital age. By giving individuals the space to disconnect, it aims to restores balance, protects psychological health, and acknowledges that human resilience is not limitless, even in a world dominated by technology.

The Right to Disconnect Bill, 2025, was recently introduced in the lower house of Parliament during the winter session, which began on 1st December 2025, as a private member’s bill by Ms. Supriya Sule, Lok Sabha MP.

Understanding the Psychology Behind the Proposed Right to disconnect Bill

The purpose of this law is based on neuroscience for humans. When workers are always in a state of being "always on", the situation of their bodies gets to the chronic stress response state where they are getting overwhelmed with cortisol, which is the main human stress hormone. The constant vigilance that the body and mind are under forces the nervous system into always being in a state of sympathetic activation, while depriving it of the restorative (parasympathetic) states that are necessary for genuine recovery. Neuroscience studies show that 96% of heavy users of technology suffer from anxiety and lack of sleep due to technology. This phenomenon is known medically as "bytemares." The brain tries to attend to several things at once, and this way its cognitive capacity becomes thinner, so there is a reduction in focus, productivity is decreased, and the stress level is increased considerably.

Increasingly, the mental suffering that people get through is not only the physical and psychological aspects of it. The digital fatigue generated by the "always-on culture" getting chronic takes its toll on the emotional capacity of the staff, interrupts their sleep cycles (particularly depriving them of REM sleep), and leads to lower melatonin secretion.

Employees in such environments have a 23% increased chance of suffering from burnout, which the World Health Organisation defines as an occupational syndrome consisting of emotional exhaustion, depersonalization, and downgrading of performance. Mental health is the silent destruction that goes on without anyone noticing; the individuals who are affected show productive performance while their neuroendocrine systems are dying little by little.

Hence, the intent of the Indian legislature is clear, which is to prioritize the human dimension, allowing employees, the warriors of the digital age, to pause and recover, fostering work‑life balance without compromising commitment or productivity, and reflecting a thoughtful, humane approach in the modern technology driven world.

The proposed Right to Disconnect Bill takes position as a law that can greatly help with the mental health of employees and therefore keep them healthy. The bill allows employees to legally disconnect from electronic communication related to their jobs outside of the working hours set by the employer; this way, it recognises more or less that the human brain was never meant to be always connected.

The Need for Digital Detox from a Scientific Perspective

Digital detoxification is the process through which the brain resets its dopamine receptors, hence stopping the process of instant gratification that is constantly reinforced through notifications. The employees who cut off their connection can focus better, remain emotionally stable, and lead healthier lives, the effect of which is measurable. Not only on single persons, but also the World Health Organisation, through its studies, has declared that mental health interventions in workplaces can yield a return of 4:1 on investment through increased productivity and decline in absenteeism.

Digital Detox: Structured Disconnection, Not Digital Rejection

One of the most important aspects of the proposed bill is the acknowledgment of digital detox as a supportive tool. However, it is very important to note that digital detox does not mean completely cutting off technology. It is the rule-based disengagement that brings back cognitive balance. Measures like limiting notifications after work hours, protecting weekends and holidays from routine communication and creating offline time zones facilitate the brain's resetting process. Psychological studies associate such practices with better concentration, emotional control, sleep quality and finally productivity in the long run. The initiative of having digital detox centres and offering counselling services is an indication that the issue of overexposure is not just a matter of personal lack of discipline, but rather a problem of modern working designs.

Positioning Mental Well-Being as Core

The fundamental aspect of the bill is based on the constitutional assurance provided by Article 21 (Constitution of India), the Right to Life and personal Liberty, which has been interpreted by the courts to cover health of mind and body as well as time for leisure. This law reform grants a right to not be available at work, which means that employers will not be able to require constant availability at work without suffering legal consequences. The Right to Disconnect Bill finally illustrates society's unanimity that, amidst our digital age, mental well-being protection is no more a nice-to-have it is a must-have. The bill permits the guarding of the recovery periods, and at the same time, it recognises that the productivity that is sustainable comes from employees who are rested and mentally healthy, not from the constantly depleted workforce in the digital chains.

The psychological Rationale

Psychological analysis indicates that this always-on condition impacts productivity in measurable ways. The human brain may get overloaded to distinguish between important and unimportant information due to the uninterrupted flow of alerts and communications. The whole process leads to a situation, continuous exposure to alerts diminishes the ability to notice the really important events thus allowing the critical ones to go unnoticed. Burnout results as a natural consequence. Research shows that the psychological state resulting from digital overstimulation is anxiety, sleep problems, tiredness, and inability to focus.

Work Culture in the Cybersecurity Realm and Analysis of the Right to Disconnect

Although every sector today demands high productivity and significant commitment from its workforce, the Cybersecurity professionals, IT engineers, SOC analysts, incident responders, cyberseucrity researchers, cyber lawyers and digital operations teams are often engage in 24x7 loop because they deal with uniquely critical responsibilities, if ignored or delayed, can compromise sensitive systems, data integrity, and national security.

It is notable that the flow of activities has been silently but significantly changing the paradigm. Availability has replaced accountability, and often responsiveness is regarded as performance. The “on duty” and “off duty” line blurs when a client escalation or a suspected breach alert calls the phone at midnight. This way, an unspoken rule develops that the worker has to be reachable irrespective of the time as being reachable has become part of the job.

In India, the 48-hour work week that is already among the world's most demanding has been made even more intense by digital connectivity. The work intensity of remote and hybrid models has further crossed spatial and temporal boundaries producing a psychologically endless workday. Hence, the cyber workforce lives in a constant state of low-grade alertness, i.e., never fully sleeping, never fully offline. For professionals working in cyber security, this issue of wellbeing is not just a personal issue but also a business issue. Mental fatigue may lead to poor decision making, slower response time in case of incidents, and more errors being made unintentionally by people.

Hence comes the relevance of the proposed Right to Disconnect bill, Implementing it in the cybersecurity realm may require employers to plan for additional task forces so that productivity remains unaffected, while ensuring that employees receive the rest and balance they need. This approach not only protects mental well‑being but also creates opportunities for new roles, distributes workloads fairly, and strengthens the overall resilience and efficiency of the organization.

Legislature Intent - The Right to Disconnect as a preventive control

In this scenario, the Right to Disconnect Bill, 2025, which was presented in the Lok Sabha as a private member's bill, can be seen as a precautionary measure in the digital risk ecosystem instead of merely as a employee welfare initiative. It intends to create legally enforceable lines of demarcation between the demands of a job and one's personal life. The bill provisions, like the right not to answer work calls and texts after office hours, protection from being fired, pay for overtime, and agreed-upon emergency protocols, are all tools to set new norms rather than to impose restrictions on the output.

This can be seen as security logic that has been established in the cyber governance sphere. Even the best systems require planned downtimes for patching, upgrading, and recovery. Humans cannot be treated differently. Loss of operation without recovery will only increase the likelihood of failure. The Right to Disconnect works as a human-layer security, which reduces the risk of incidents caused by fatigue and burnout among employees.

The Legislative Recognition of Human Needs

The Right to Disconnect Bill is a landmark change of thinking, moving from the perception of disconnection as unprofessional to the acknowledgement of it as a basic requirement for human dignity and health. The Indian legislation, which was passed through a private member's bill, clearly defines the limits of professional and personal time. By providing the employees with the legal right to disconnect, the bill affirms what psychological science has been telling us for a long time: people need real breaks to be at their best.

Conclusion

The Proposed Right to Disconnect Bill, 2025, is a progressive move in law, which, among others confirms that a digital world, constant connectivity may undermines both individual health and company/orgnisation’s buisness continuity. A balanced approach is essential, with clearly agreed-upon emergency norms to guide situations where employees may need to work extra hours in a reasonable and lawful manner. It recognises that people are the backbone of the digital ecosystem and need time off to work effectively and securely. In a connected economy, protecting mental bandwidth is as crucial as protecting technical networks, making the Right to Disconnect a key element of sustainable resilience.

From a cybersecurity perspective, no secure digital future can emerge from exhausted minds. A strong digital and cyber‑India will have laws like the Right to Disconnect Bill, signaling a shift in policy thinking. This law moves the burden from individuals having to adapt to always-on technologies onto systems, organisations, and governance structures to respect human limits. By recognising mental well-being as an essential factor of employee’s wellbeing, the bill reinforces that resilient work ecosystems depend not only on robust infrastructure and controls but also on well-rested, focused, and secure individuals.

References

- https://www.shankariasparliament.com/blogs/pdf/right-to-disconnect-bill-2025

- https://ijlr.iledu.in/wp-content/uploads/2025/04/V5I653.pdf

- https://timesofindia.indiatimes.com/education/news/no-calls-and-emails-after-office-hours-right-to-disconnect-bill-introduced-in-lok-sabha-to-set-workplace-boundaries/articleshow/125806984.cms

- https://www.hindustantimes.com/india-news/what-is-right-to-disconnect-bill-introduced-in-lok-sabha-and-can-it-clear-parliament-101765025582585.html

Executive Summary:

A video went viral on social media claiming to show a bridge collapsing in Bihar. The video prompted panic and discussions across various social media platforms. However, an exhaustive inquiry determined this was not real video but AI-generated content engineered to look like a real bridge collapse. This is a clear case of misinformation being harvested to create panic and ambiguity.

Claim:

The viral video shows a real bridge collapse in Bihar, indicating possible infrastructure failure or a recent incident in the state.

Fact Check:

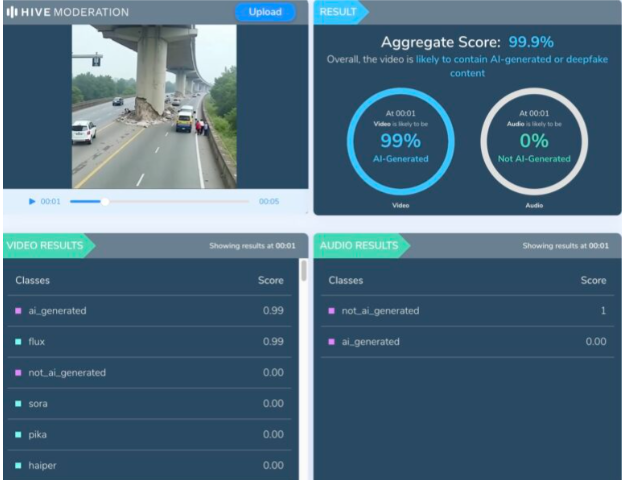

Upon examination of the viral video, various visual anomalies were highlighted, such as unnatural movements, disappearing people, and unusual debris behavior which suggested the footage was generated artificially. We used Hive AI Detector for AI detection, and it confirmed this, labelling the content as 99.9% AI. It is also noted that there is the absence of realism with the environment and some abrupt animation like effects that would not typically occur in actual footage.

No valid news outlet or government agency reported a recent bridge collapse in Bihar. All these factors clearly verify that the video is made up and not real, designed to mislead viewers into thinking it was a real-life disaster, utilizing artificial intelligence.

Conclusion:

The viral video is a fake and confirmed to be AI-generated. It falsely claims to show a bridge collapsing in Bihar. This kind of video fosters misinformation and illustrates a growing concern about using AI-generated videos to mislead viewers.

Claim: A recent viral video captures a real-time bridge failure incident in Bihar.

Claimed On: Social Media

Fact Check: False and Misleading

Introduction

A 33-year-old MBA graduate and 36-year-old software engineer set up the cybercrime hub in one bedroom. They formed the nameless private enterprise two years ago and hired the two youngsters as employees. The police revealed that the fraudsters moved Rs 854 crore rapidly through 84 bank accounts in the last two years. They were using eight mobile phones active during the day and night for their malicious operations. This bad actors group came in the eyes of the police when a 26-year-old woman filed a complaint, she was lured and cheated for Rs 8.5 lakh on the pretext of making small investments for high returns. It led to cyber crime police on their doorstep. The police discovered that they were operating a massive cyber fraud network from that single room, targeting a large number of people for committing cyber fraud through offering investment schemes and luring innocent people.

How cybercrime fraudsters lured the victims?

The Bangalore police have busted a cyber fraud scam worth 854 Crore rupees. And police have arrested 6 accused. These bad actors illegally deceived numerous victims on the pretext of investment schemes. The gang used to lure them through WhatsApp and Telegram. Initially, the people were asked to invest small amounts, promising daily profits ranging from 1 thousand to 5 thousand rupees. As the trust grew, thousands of victims indulged in investments ranging from 1 lack to 10 lack rupees. This Money luring modus operandi was used by the fraudsters to attract them and get the victims to invest more and more. The amount invested by the victims was deposited into various bank accounts by the fraudsters. When the victims tried to withdraw their amount after depositing they were unable to do so. Soon after the amount was received, the accused gang would launder the money and divert it to other accounts.

Be cautious of online investment fraud

It concerns all of us who used to invest online. The Bangalore police have busted cyber crime or cyber investment fraud of 854 crore rupees. The 6 members of the gang that the police have arrested used to approach victims through WhatsApp and telegram to convince them to invest small amounts, from 1 thousand to 10 thousand at the bare minimum and promising them returns or profit amount per day and later lock this amount and diverting it into different bank accounts, ensuring that those get invested never get access to it again. Now, this went on in the country receiving a large number of cases that have been registered from various states in the country.

Advisory and best practices

- It is important to mention that there could be several other cybercrime investment frauds like this that you may not even be aware of. Hence, this incident of massive online investment fraud operated from the IT capital of the country definitely acts as an eye-opener for all of us. We urge people to be cautious and raise the alarm about any such cyber crime or investment fraud that they see in the cyber world today.

- In the age of the internet, where there is a large number of mobile users in the country, and users look for a source of income on the internet and use it to invest their money, it is important to be aware of such fraud and be cautious and take proper precautions before investing in any such online scheme. It is always advisable to invest only in legitimate sources and after conducting due diligence.

- Be cautious and do your research: Whenever you are investing in any scheme or in digital currency, make sure to verify the authenticity or legitimacy of the person or company who is offering such service. Check the reviews, official website, and feedback from authentic sources. Find out whether the agents or brokers who contact you are licensed to operate in your state and are compliant with regulators or other investors.

- Verify the credentials: Check the genuineness by checking the licenses, registration and certification of the person or company offering such services, whether he is authorised or not.

- Be Skeptical of offers which seem to be too good: If it sounds too good, be cautious and inquire about its authenticity, such as unsolicited offers. Be especially careful if you receive an unsolicited pitch to invest in a particular company or see it praised online but if you could not find current financial information about it from independent sources. It could be a fraudulent scheme. It is advisable to compare promised yields with current returns on well-known stock indexes.

- Seek Expert Advice: If you are a beginner in online investment, you may seek advice from reliable resources such as financial advisors who can provide more clarity on aspects of investment and guidance to help you make informed decisions.

- Avoid Unreliable Platforms: Be cautious and stick to authorised established agencies. Be cautious when dealing with a person or company lacking sufficient user reviews and credible security measures.

- Protect yourself online: Protect yourself online. Fraudsters target users on online and social marketing sites and commit various online frauds; hence, it's important to be cautious and protect yourself online. So be cautious and make your own sound decision after all analysis while investing in any such services.

- Report Suspicious Accounts: If you encounter any social media accounts, social media groups or profiles which seem suspicious and engaged in fraudulent services, you must report such profiles to the respective platform immediately.

- Report cyber crimes to law enforcement agencies: A powerful resource available to victims of cybercrime is the National Cyber Crime Reporting Portal, equipped with a 24x7 helpline number, 1930. This portal serves as a centralised platform for reporting cybercrimes, including financial fraud.

Conclusion:

This recent cyber investment fraud worth Rs 854 Crore, orchestrated by a group of fraudsters operating from a single room, serves as a stark reminder of the risks posed by bad actors. This incident underscores the importance of being vigilant when it comes to online investments and financial transactions. As we navigate the vast and interconnected landscape of the internet, it is imperative that we exercise due diligence and employ best practices to protect ourselves. We need to be cautious and protected from falling victim to these fraudulent schemes, actively reporting suspicious accounts and cybercrimes to relevant authorities through resources like the National Cyber Crime Reporting Portal will contribute to helping stop these types of cyber crimes. Knowledge and awareness are some of the biggest factors we have in fighting back against such cyber frauds in this digital age and making a safer digital environment for everyone.

References

- https://www.news18.com/india/bengaluru-cyber-crime-rs-854-crore-84-banks-accounts-fraud-network-one-bedroom-house-yelahanka-karnataka-8618426.html

- https://indianexpress.com/article/cities/bangalore/cyber-crime-bengaluru-links-over-5000-cases-india-8982753/lite/