#FactCheck: Old clip of Greenland tsunami depicts as tsunami in Japan

Executive Summary:

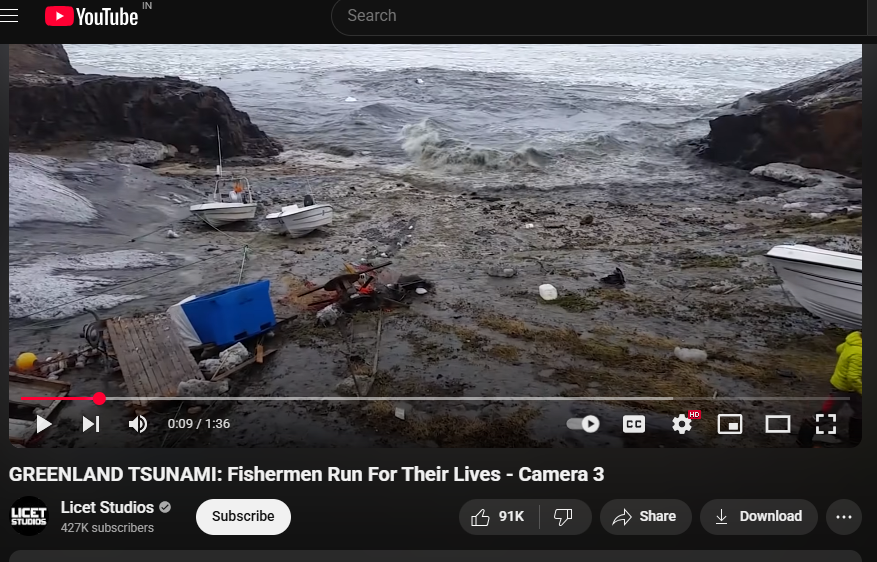

A viral video depicting a powerful tsunami wave destroying coastal infrastructure is being falsely associated with the recent tsunami warning in Japan following an earthquake in Russia. Fact-checking through reverse image search reveals that the footage is from a 2017 tsunami in Greenland, triggered by a massive landslide in the Karrat Fjord.

Claim:

A viral video circulating on social media shows a massive tsunami wave crashing into the coastline, destroying boats and surrounding infrastructure. The footage is being falsely linked to the recent tsunami warning issued in Japan following an earthquake in Russia. However, initial verification suggests that the video is unrelated to the current event and may be from a previous incident.

Fact Check:

The video, which shows water forcefully inundating a coastal area, is neither recent nor related to the current tsunami event in Japan. A reverse image search conducted using keyframes extracted from the viral footage confirms that it is being misrepresented. The video actually originates from a tsunami that struck Greenland in 2017. The original footage is available on YouTube and has no connection to the recent earthquake-induced tsunami warning in Japan

The American Geophysical Union (AGU) confirmed in a blog post on June 19, 2017, that the deadly Greenland tsunami on June 17, 2017, was caused by a massive landslide. Millions of cubic meters of rock were dumped into the Karrat Fjord by the landslide, creating a wave that was more than 90 meters high and destroying the village of Nuugaatsiaq. A similar news article from The Guardian can be found.

Conclusion:

Videos purporting to depict the effects of a recent tsunami in Japan are deceptive and repurposed from unrelated incidents. Users of social media are urged to confirm the legitimacy of such content before sharing it, particularly during natural disasters when false information can exacerbate public anxiety and confusion.

- Claim: Recent natural disasters in Russia are being censored

- Claimed On: Social Media

- Fact Check: False and Misleading

Related Blogs

Introduction

In today's digital age protecting your personal information is of utmost importance. The bad actors are constantly on the lookout for ways to misuse your sensitive or personal data. The Aadhaar card is a crucial document that is utilised by all of us for various aspects. It is considered your official government-verified ID and is used for various purposes such as for verification purposes, KYC purposes, and even for financial transactions. Your Aadhaar card is used in so many ways such as flight tickets booked by travel agents, check-in in hotels, verification at educational institutions and more. The bad actors can target and lure the victims by unauthorized access to your Aadhaar data and commit cyber frauds such as identity theft, unauthorized access, and financial fraud. Hence it is significantly important to protect your personal information and Aadhaar card details and prevent the misuse of your personal information.

What is fingerprint cloning?

Cybercrooks have been exploiting the Aadhaar Enabled Payment System (AePS). These scams entail cloning individuals' Aadhaar-linked biometrics through silicon fingerprints and unauthorized biometric devices, subsequently siphoning money from their bank accounts. Fingerprint cloning also known as fingerprint spoofing is a technique or a method where an individual tries to replicate someone else's fingerprint for unauthorized use. This is done for various reasons, including gaining unauthorized access to data, unlocking data or committing identity theft. The process of fingerprint cloning includes collection and creation.

The recent case of Aadhaar Card fingerprint cloning in Nawada

Nawada Cyber Police unit has arrested two perpetrators who were engaged in fingerprint cloning fraud. The criminals are accused of duping consumers of money from their bank accounts by cloning their fingerprints. Among the two perpetrators, one of them runs the Common Service Centre (CSC) whereas the second is a sweeper at the DBGB branch bank. The criminals are accused of duping consumers of money from their bank accounts by cloning their fingerprints. According to the police, an organized gang of cyber criminals had been defrauding the consumers for the last two years with the help of a CSC operator and were embezzling money from the accounts of consumers by cloning their fingerprints and taking Aadhaar numbers. The operator used to collect the Aadhaar number from the consumers by putting their thumb impression on a register. Among these two perpetrators, one was accused of withdrawing more money from the consumer's account and making less payment and sometimes not making the payment after withdrawing the money. Whereas the second perpetrator stole the data of consumers from the DBGB branch bank and prepared their fingerprint clone. During the investigation of a case related to fraud, the Special Investigation Team (SIT) of Cyber Police conducted raids in Govindpur and Roh police station areas on the basis of technical surveillance and available evidence and arrested them.

Safety measures for the security of your Aadhaar Card data

- Locking your biometrics: One way to save your Aadhaar card and prevent unauthorized access is by locking your biometrics. To lock & unlock your Aadhaar biometrics you can visit the official website of UIDAI or its official portal. So go to UIDAI’s and select the “Lock/Unlock Biometrics” from the Aadhar service section. Then enter the 12-digit Aadhaar number and security code and click on the OTP option. An OTP will be sent to your registered mobile number with Aadhaar. Once the OTP is received enter the OTP and click on the login button that will allow you to lock your biometrics. Enter the 4-digit security code mentioned on the screen and click on the “Enable” button. Your biometrics will be locked and you will have to unblock them in case you want to access them again. The official website of UIDAI is “https://uidai.gov.in/” and there is a dedicated Aadhar helpline 1947.

- Use masked Aadhaar Card: A masked Aadhaar card is a different rendition of an Aadhaar card that is designed to amplify the privacy and security of an individual Aadhaar number. In a masked Aadhaar card, the first eight digits of the twelve digits Aadhaar number are replaced by XXXX- XXXX and only the last four digits are visible. This adds an additional layer of protection to an individual Aadhaar’s number. To download a masked Aadhaar card you visit the government website of UIDAI and on the UIDAI homepage, you will see a "Download Aadhaar" option. Click on it. In the next step, you will be required to enter your 12-digit Aadhaar number along with the security code displayed on the screen. After entering your Aadhaar number, click on the Send OTP. You will receive an OTP on your registered phone number. Enter the OTP received in the provided field and click on the “Submit” button. You will be asked to select the format of your Aadhaar card, You can choose the masked Aadhaar card option. This will replace the first eight digits of your Aadhaar number with "XXXX-XXXX" on the downloaded Aadhaar card. Once the format is selected, click on the “Download Aadhaar” button and your masked Aadhaar card will be downloaded. So if any organisation requires your Aadhaar for verification you can share your masked Aadhar card which only shows the last 4 digits of your Aadhaar card number. Just the way you keep your bank details safe you should also keep your Aadhaar number secure otherwise people can misuse your identity and use it for fraud.

- Monitoring your bank account transactions: Regularly monitor your bank account statements for any suspicious activity and you can also configure transaction alerts with your bank account transactions.

Conclusion:

It is important to secure your Aadhaar card data effectively. The valuable security measure option of locking biometrics provides an additional layer of security. It safeguards your identity from potential scammers. By locking your biometrics you can secure your biometric data and other personal information preventing unauthorized access and any misuse of your Aadhaar card data. In today's evolving digital landscape protecting your personal information is of utmost importance. The cyber hygiene practices, safety and security measures must be adopted by all of us hence establishing cyber peace and harmonizing cyberspace.

References:

- https://www.livehindustan.com/bihar/story-cyber-crime-csc-operator-and-bank-sweeper-arrested-in-nawada-cheating-by-cloning-finger-prints-8913667.html

- https://www.indiatoday.in/news-analysis/story/cloning-fingerprints-fake-shell-entities-is-your-aadhaar-as-safe-as-you-may-think-2398596-2023-06-27

Executive Summary:

A viral video claiming to show Israelis pleading with Iran to "stop the war" is not authentic. As per our research the footage is AI-generated, created using tools like Google’s Veo, and not evidence of a real protest. The video features unnatural visuals and errors typical of AI fabrication. It is part of a broader wave of misinformation surrounding the Israel-Iran conflict, where AI-generated content is widely used to manipulate public opinion. This incident underscores the growing challenge of distinguishing real events from digital fabrications in global conflicts and highlights the importance of media literacy and fact-checking.

Claim:

A X verified user with the handle "Iran, stop the war, we are sorry" posted a video featuring people holding placards and the Israeli flag. The caption suggests that Israeli citizens are calling for peace and expressing remorse, stating, "Stop the war with Iran! We apologize! The people of Israel want peace." The user further claims that Israel, having allegedly initiated the conflict by attacking Iran, is now seeking reconciliation.

Fact Check:

The bottom-right corner of the video displays a "VEO" watermark, suggesting it was generated using Google's AI tool, VEO 3. The video exhibits several noticeable inconsistencies such as robotic, unnatural speech, a lack of human gestures, and unclear text on the placards. Additionally, in one frame, a person wearing a blue T-shirt is seen holding nothing, while in the next frame, an Israeli flag suddenly appears in their hand, indicating possible AI-generated glitches.

We further analyzed the video using the AI detection tool HIVE Moderation, which revealed a 99% probability that the video was generated using artificial intelligence technology. To validate this finding, we examined a keyframe from the video separately, which showed an even higher likelihood of 99% probability of being AI generated. These results strongly indicate that the video is not authentic and was most likely created using advanced AI tools.

Conclusion:

The video is highly likely to be AI-generated, as indicated by the VEO watermark, visual inconsistencies, and a 99% probability from HIVE Moderation. This highlights the importance of verifying content before sharing, as misleading AI-generated media can easily spread false narratives.

- Claim: AI generated video of Israelis saying "Stop the War, Iran We are Sorry".

- Claimed On: Social Media

- Fact Check:AI Generated Mislead

Introduction

Quantum technology involves the study of matter and energy at the sub-atomic level. This technology uses superposition and entanglement to provide new capabilities in computing, cryptography and communication and solves problems at speeds not possible with classical computers. Unlike classical bits, qubits can exist in a superposition of states, representing 0, 1, or any combination of these states simultaneously. The Union Cabinet approved the National Quantum Mission on 19 April 2023, with a budget allocation of Rs 6000 Crore. The mission will seed, nourish, and scale up scientific and industrial R&D in the domain of quantum technology so that India emerges as one of the leaders in developing quantum technologies and their applications.

The Union Minister for Science and Technology and Minister of Earth Sciences, Dr. Jitendra Singh announced the selection of 8 start-ups for support under India’s National Quantum Mission and the National Mission on Interdisciplinary Cyber-Physical Systems (NM-ICPS). The selected start-ups represent diverse quantum tech domains and were chosen via a rigorous evaluation process. These startups are poised to be critical enablers in translating quantum research into practical applications. This start-up selection aligns with India’s broader vision for technological self-reliance and innovation by 2047.

Policy Landscape and Vision

The National Quantum Mission’s main goal is to develop intermediate-scale quantum computers with 50-1000 physical qubits in 8 years, across diverse platforms such as superconducting and photonic technology. The mission deliverables include the development of satellite-based secure quantum communications between ground stations over a range of 2000 km within India, long-distance secure quantum communications with other countries, inter-city quantum key distribution over 2000 km, and multi-node quantum networks with quantum memories.

The National Mission on Interdisciplinary Cyber-Physical Systems aims to promote translational research in Cyber-Physical Systems and associated technologies and prototypes and demonstrates applications for national priorities. The other expectations are enhancing the top-of-the-line research base, human resource development and skill sets in these emerging areas. These missions align with India’s broader ideals such as the Digital India and Make in India campaigns to strengthen India’s technological ecosystem.

Selected Startups and Their Innovations

The startups selected reflect alignment with India’s National Quantum Mission, oriented towards fostering cutting-edge research and innovation and have industrial applications aiming at placing India as the global leader in quantum technology. The selections are:

- QNu Labs (Bengaluru): is advancing quantum communication by developing end-to-end quantum-safe heterogeneous networks.

- QPiAI India Pvt. Ltd. (Bengaluru): is building a superconducting quantum computer.

- Dimira Technologies Pvt. Ltd. (IIT Mumbai): is creating indigenous cryogenic cables, essential for quantum computing.

- Prenishq Pvt. Ltd. (IIT Delhi): developing precision diode-laser systems.

- QuPrayog Pvt. Ltd. (Pune): is working on creating optical atomic clocks and related technologies.

- Quanastra Pvt. Ltd. (Delhi): is developing advanced cryogenics and superconducting detectors.

- Pristine Diamonds Pvt. Ltd. (Ahmedabad): is creating diamond materials for quantum sensing.

- Quan2D Technologies Pvt. Ltd. (Bengaluru): is making advancements in superconducting Nanowire Single-photon Detectors.