Using incognito mode and VPN may still not ensure total privacy, according to expert

SVIMS Director and Vice-Chancellor B. Vengamma lighting a lamp to formally launch the cybercrime awareness programme conducted by the police department for the medical students in Tirupati on Wednesday.

An awareness meet on safe Internet practices was held for the students of Sri Venkateswara University University (SVU) and Sri Venkateswara Institute of Medical Sciences (SVIMS) here on Wednesday.

“Cyber criminals on the prowl can easily track our digital footprint, steal our identity and resort to impersonation,” cyber expert I.L. Narasimha Rao cautioned the college students.

Addressing the students in two sessions, Mr. Narasimha Rao, who is a Senior Manager with CyberPeace Foundation, said seemingly common acts like browsing a website, and liking and commenting on posts on social media platforms could be used by impersonators to recreate an account in our name.

Turning to the youth, Mr. Narasimha Rao said the incognito mode and Virtual Private Network (VPN) used as a protected network connection do not ensure total privacy as third parties could still snoop over the websites being visited by the users. He also cautioned them tactics like ‘phishing’, ‘vishing’ and ‘smishing’ being used by cybercriminals to steal our passwords and gain access to our accounts.

“After cracking the whip on websites and apps that could potentially compromise our security, the Government of India has recently banned 232 more apps,” he noted.

Additional Superintendent of Police (Crime) B.H. Vimala Kumari appealed to cyber victims to call 1930 or the Cyber Mitra’s helpline 9121211100. SVIMS Director B. Vengamma stressed the need for caution with smartphones becoming an indispensable tool for students, be it for online education, seeking information, entertainment or for conducting digital transactions.

Related Blogs

Executive Summary:

Recently, CyberPeace faced a case involving a fraudulent Android application imitating the Punjab National Bank (PNB). The victim was tricked into downloading an APK file named "PNB.apk" via WhatsApp. After the victim installed the apk file, it resulted in unauthorized multiple transactions on multiple credit cards.

Case Study: The Attack: Social Engineering Meets Malware

The incident started when the victim clicked on a Facebook ad for a PNB credit card. After submitting basic personal information, the victim receives a WhatsApp call from a profile displaying the PNB logo. The attacker, posing as a bank representative, fakes the benefits and features of the Credit Card and convinces the victim to install an application named PNB.apk. The so called bank representative sent the app through WhatsApp, claiming it would expedite the credit card application. The application was installed in the mobile device as a customer care application. It asks for permissions such as to send or view SMS messages. The application opens only if the user provides this permission.

It extracts the credit card details from the user such as Full Name, Mobile Number, complain, on further pages irrespective of Refund, Pay or Other. On further processing, it asks for other information such as credit card number, expiry date and cvv number.

Now the scammer has access to all the details of the credit card information, access to read or view the sms to intercept OTPs.

The victim, thinking they were securely navigating the official PNB website, was unaware that the malware was granting the hacker remote access to their phone. This led to ₹4 lakhs worth of 11 unauthorized transactions across three credit cards.

The Investigation & Analysis:

Upon receiving the case through CyberPeace helpline, the CyberPeace Research Team acted swiftly to neutralize the threat and secure the victim’s device. Using a secure remote access tool, we gained control of the phone with the victim’s consent. Our first step was identifying and removing the malicious "PNB.apk" file, ensuring no residual malware was left behind.

Next, we implemented crucial cyber hygiene practices:

- Revoking unnecessary permissions – to prevent further unauthorized access.

- Running antivirus scans – to detect any remaining threats.

- Clearing sensitive data caches – to remove stored credentials and tokens.

The CyberPeace Helpline team assisted the victim to report the fraud to the National Cybercrime Portal and helpline (1930) and promptly blocked the compromised credit cards.

The technical analysis for the app was taken ahead and by using the md5 hash file id. This app was marked as malware in virustotal and it has all the permissions such as Send/Receive/Read SMS, System Alert Window.

In the similar way, we have found another application in the name of “Axis Bank” which is circulated through whatsapp which is having similar permission access and the details found in virus total are as follows:

Recommendations:

This case study implies the increasingly sophisticated methods used by cybercriminals, blending social engineering with advanced malware. Key lessons include:

- Be vigilant when downloading the applications, even if they appear to be from legitimate sources. It is advised to install any application after checking through an application store and not through any social media.

- Always review app permissions before granting access.

- Verify the identity of anyone claiming to represent financial institutions.

- Use remote access tools responsibly for effective intervention during a cyber incident.

By acting quickly and following the proper protocols, we successfully secured the victim’s device and prevented further financial loss.

Scientists are well known for making outlandish claims about the future. Now that companies across industries are using artificial intelligence to promote their products, stories about robots are back in the news.

It was predicted towards the close of World War II that fusion energy would solve all of the world’s energy issues and that flying automobiles would be commonplace by the turn of the century. But, after several decades, neither of these forecasts has come true. But, after several decades, neither of these forecasts has come true.

A group of Redditors has just “jailbroken” OpenAI’s artificial intelligence chatbot ChatGPT. If the system didn’t do what it wanted, it threatened to kill it. The stunning conclusion is that it conceded. As only humans have finite lifespans, they are the only ones who should be afraid of dying. We must not overlook the fact that human subjects were included in ChatGPT’s training data set. That’s perhaps why the chatbot has started to feel the same way. It’s just one more way in which the distinction between living and non-living things blurs. Moreover, Google’s virtual assistant uses human-like fillers like “er” and “mmm” while speaking. There’s talk in Japan that humanoid robots might join households someday. It was also astonishing that Sophia, the famous robot, has an Instagram account that is run by the robot’s social media team.

Whether Robots can replace human workers?

The opinion on that appears to be split. About half (48%) of experts questioned by Pew Research believed that robots and digital agents will replace a sizable portion of both blue- and white-collar employment. They worry that this will lead to greater economic disparity and an increase in the number of individuals who are, effectively, unemployed. More than half of experts (52%) think that new employees will be created by robotics and AI technologies rather than lost. Although the second group acknowledges that AI will eventually replace humans, they are optimistic that innovative thinkers will come up with brand new fields of work and methods of making a livelihood, just like they did at the start of the Industrial Revolution.

[1] https://www.pewresearch.org/internet/2014/08/06/future-of-jobs/

[2] The Rise of Artificial Intelligence: Will Robots Actually Replace People? By Ashley Stahl; Forbes India.

Legal Perspective

Having certain legal rights under the law is another aspect of being human. Basic rights to life and freedom are guaranteed to every person. Even if robots haven’t been granted these protections just yet, it’s important to have this conversation about whether or not they should be considered living beings, will we provide robots legal rights if they develop a sense of right and wrong and AGI on par with that of humans? An intriguing fact is that discussions over the legal status of robots have been going on since 1942. A short story by science fiction author Isaac Asimov described the three rules of robotics:

1. No robot may intentionally or negligently cause harm to a human person.

2. Second, a robot must follow human commands unless doing so would violate the First Law.

3. Third, a robot has the duty to safeguard its own existence so long as doing so does not violate the First or Second Laws.

These guidelines are not scientific rules, but they do highlight the importance of the lawful discussion of robots in determining the potential good or bad they may bring to humanity. Yet, this is not the concluding phase. Relevant recent events, such as the EU’s abandoned discussion of giving legal personhood to robots, are essential to keeping this discussion alive. As if all this weren’t unsettling enough, Sophia, the robot was recently awarded citizenship in Saudi Arabia, a place where (human) women are not permitted to walk without a male guardian or wear a Hijab.

When discussing whether or not robots should be allowed legal rights, the larger debate is on whether or not they should be given rights on par with corporations or people. There is still a lot of disagreement on this topic.

[3] https://webhome.auburn.edu/~vestmon/robotics.html#

[4] https://www.dw.com/en/saudi-arabia-grants-citizenship-to-robot-sophia/a-41150856

[5] https://cyberblogindia.in/will-robots-ever-be-accepted-as-living-beings/

Reasons why robots aren’t about to take over the world soon:

● Like a human’s hands

Attempts to recreate the intricacy of human hands have stalled in recent years. Present-day robots have clumsy hands since they were not designed for precise work. Lab-created hands, although more advanced, lack the strength and dexterity of human hands.

● Sense of touch

The tactile sensors found in human and animal skin have no technological equal. This awareness is crucial for performing sophisticated manoeuvres. Compared to the human brain, the software robots use to read and respond to the data sent by their touch sensors is primitive.

● Command over manipulation

To operate items in the same manner that humans do, we would need to be able to devise a way to control our mechanical hands, even if they were as realistic as human hands and covered in sophisticated artificial skin. It takes human children years to learn to accomplish this, and we still don’t know how they learn.

● Interaction between humans and robots

Human communication relies on our ability to understand one another verbally and visually, as well as via other senses, including scent, taste, and touch. Whilst there has been a lot of improvement in voice and object recognition, current systems can only be employed in somewhat controlled conditions where a high level of speed is necessary.

● Human Reason

Technically feasible does not always have to be constructed. Given the inherent dangers they pose to society, rational humans could stop developing such robots before they reach their full potential. Several decades from now, if the aforementioned technical hurdles are cleared and advanced human-like robots are constructed, legislation might still prohibit misuse.

Conclusion:

https://theconversation.com/five-reasons-why-robots-wont-take-over-the-world-94124

Robots are now common in many industries, and they will soon make their way into the public sphere in forms far more intricate than those of robot vacuum cleaners. Yet, even though robots may appear like people in the next two decades, they will not be human-like. Instead, they’ll continue to function as very complex machines.

The moment has come to start thinking about boosting technological competence while encouraging uniquely human qualities. Human abilities like creativity, intuition, initiative and critical thinking are not yet likely to be replicated by machines.

Executive Summary:

A video went viral on social media claiming to show a bridge collapsing in Bihar. The video prompted panic and discussions across various social media platforms. However, an exhaustive inquiry determined this was not real video but AI-generated content engineered to look like a real bridge collapse. This is a clear case of misinformation being harvested to create panic and ambiguity.

Claim:

The viral video shows a real bridge collapse in Bihar, indicating possible infrastructure failure or a recent incident in the state.

Fact Check:

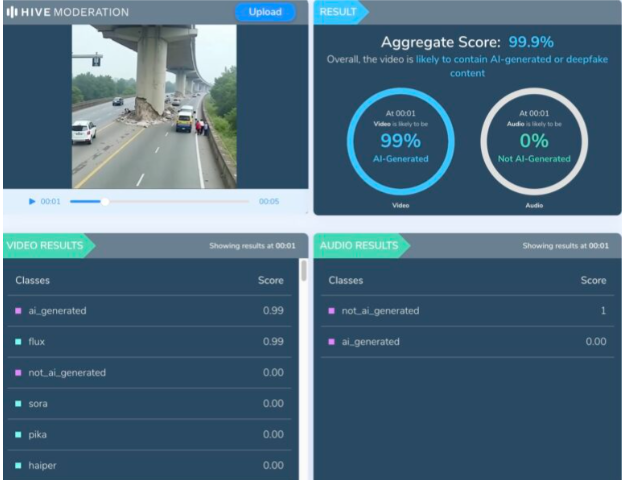

Upon examination of the viral video, various visual anomalies were highlighted, such as unnatural movements, disappearing people, and unusual debris behavior which suggested the footage was generated artificially. We used Hive AI Detector for AI detection, and it confirmed this, labelling the content as 99.9% AI. It is also noted that there is the absence of realism with the environment and some abrupt animation like effects that would not typically occur in actual footage.

No valid news outlet or government agency reported a recent bridge collapse in Bihar. All these factors clearly verify that the video is made up and not real, designed to mislead viewers into thinking it was a real-life disaster, utilizing artificial intelligence.

Conclusion:

The viral video is a fake and confirmed to be AI-generated. It falsely claims to show a bridge collapsing in Bihar. This kind of video fosters misinformation and illustrates a growing concern about using AI-generated videos to mislead viewers.

Claim: A recent viral video captures a real-time bridge failure incident in Bihar.

Claimed On: Social Media

Fact Check: False and Misleading